An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

“There is nothing so practical as a good theory”: a pragmatic guide for selecting theoretical approaches for implementation projects

Elizabeth a lynch, alison mudge, sarah knowles, alison l kitson, sarah c hunter, gill harvey.

- Author information

- Article notes

- Copyright and License information

Corresponding author.

Received 2018 Jun 12; Accepted 2018 Oct 31; Collection date 2018.

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

A multitude of theories, models and frameworks relating to implementing evidence-based practice in health care exist, which can be overwhelming for clinicians and clinical researchers new to the field of implementation science. Clinicians often bear responsibility for implementation, but may be unfamiliar with theoretical approaches designed to inform or understand implementation.

In this article, a multidisciplinary group of clinicians and health service researchers present a pragmatic guide to help clinicians and clinical researchers understand what implementation theories, models and frameworks are; how a theoretical approach to implementation might be used; and some prompts to consider when selecting a theoretical approach for an implementation project. Ten commonly used and highly cited theoretical approaches are presented, none of which have been utilised to their full potential in the literature to date. Specifically, theoretical approaches tend to be applied retrospectively to evaluate or interpret findings from a completed implementation project, rather than being used to plan and design theory-informed implementation strategies which would intuitively have a greater likelihood of success. We emphasise that there is no right or wrong way of selecting a theoretical approach, but encourage clinicians to carefully consider the project’s purpose, scope and available data and resources to allow them to select an approach that is most likely to “value-add” to the implementation project.

By assisting clinicians and clinical researchers to become confident in selecting and applying theoretical approaches to implementation, we anticipate an increase in theory-informed implementation projects. This then will contribute to more nuanced advice on how to address evidence-practice gaps and ultimately to contribute to better health outcomes.

Electronic supplementary material

The online version of this article (10.1186/s12913-018-3671-z) contains supplementary material, which is available to authorized users.

Keywords: Evidence-based practice, Implementation, Knowledge translation, Theory-informed

Clinicians and clinical researchers usually have expert knowledge about evidence-based interventions for different clinical conditions. While some health professionals may have experience of implementing evidence-based interventions in their own practice or overseeing a change in practice by health professionals directly under their supervision, many are not familiar or confident with the current evidence regarding how to effectively, efficiently and sustainably implement evidence-based interventions into routine clinical practice.

Implementation science has been defined as “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services” [ 1 ], and recognises that strong evidence alone is not sufficient to change practice. Researchers from a multitude of backgrounds have proposed different approaches to predicting, guiding and explaining how evidence is implemented, drawing on practical experience, observation, empirical study and the development and synthesis of an eclectic range of theories about individual, group and organisational change. This has resulted in a plethora of implementation frameworks, models, and theories; recent data suggest more than 100 theoretical approaches are being used by implementation researchers [ 2 ].

Use of established implementation theories, models or frameworks can help implementation researchers through contributing to new and more nuanced knowledge about how and why implementation succeeds or fails [ 3 ]. For clinicians, implementation theories, models and frameworks can be applied so new initiatives are planned, implemented and evaluated more systematically, which may enhance the success, sustainability and scalability of the project. When we consciously move our thinking from implicit assumptions about how we think implementation works to making our thinking more explicit and structured through the application of an established theoretical approach, then we might be able to be more objective and more creative about our approach to planning, guiding and evaluating.

Despite the growing recognition of the need to use theory to inform implementation programs [ 3 ], many clinicians and clinical researchers are unfamiliar with theories of implementation and behaviour change. For instance, in Australia in 2012, the majority of medical, nursing and allied health professionals who had successfully applied for National Health and Medical Research Council (NHMRC) Translating Research Into Practice (TRIP) fellowships had no previous experience using implementation theories or frameworks [ 4 ].

While previous manuscripts about implementation theories, models and frameworks are helpful for researchers with good background knowledge in implementation science to make sense of the different theoretical approaches available (for example, by providing a taxonomy to distinguish between different categories of implementation theories, models and frameworks) [ 3 ], it is important that information about how and why to select and apply theoretical approaches is made accessible to frontline health professionals who strive to provide the best quality, evidence-based care to their patients.

Throughout this manuscript, we will caution the reader that this article cannot provide a simple “paint by numbers” approach to implementation. It would be counter-productive to try to create an algorithm that would capture planning and implementing behaviour change across multiple innovations in the complex adaptive systems of modern healthcare. Rather, we encourage thoughtful assessment and responsiveness to the particular circumstances of each implementation—the change to be introduced, the proposed way to make the change, the people who need to be involved, and the setting in which the change happens. Our intention is to provide accessible guidance based on our collective clinical and research experience, to assist clinicians and clinical researchers in their endeavours to design and conduct more effective implementation projects to improve clinical care, and to design more robust evaluations to advance the empirical evidence for this emerging science.

Therefore the aims of this paper are to:

Demystify some of the jargon through defining the nature and role of frameworks, models and theories

Describe and compare commonly used theoretical approaches, and how they are applied in practice

Suggest how to select a theoretical approach based on purpose, scope and context of the individual project

The suggestions made in this debate paper are derived from our experiential knowledge as a multidisciplinary group of clinicians and health service researchers with interest and experience in implementation science in different international settings. EAL was a hospital-based physiotherapist for 14 years and is now an early-career researcher, AM is a practicing general physician and mid-career researcher, SK has a psychology background and is a researcher in a National Institute of Health Research Collaboration for Leadership in Applied Health Research and Care, SCH (psychology background) is an early career researcher, and GH and ALK have backgrounds in nursing and are senior researchers with extensive experience in improvement and implementation research. We work collaboratively with front-line clinicians and run workshops to help clinicians and clinical researchers apply theory to improve the success and sustainability of implementation projects. Through this work we recognise the need to assist clinicians and clinical researchers to navigate this complex landscape.

We encourage the reader to consider our recommendations as prompts or guides rather than definitive prescriptions. We have found that a more systematic understanding of implementation processes tends to be generated when different people (with different backgrounds and different approaches to implementation) share their experiences. Therefore, we have framed this paper using the experiences of author AM to illustrate the ways that clinicians can engage with utilising implementation theories, models and frameworks.

Case study part 1 (experience of author AM): I am a physician and I plan to implement a delirium prevention program. I have some implementation experience and know that it won’t be easy. I have heard about implementation science, so I hope there may be tools to help me. I understand a bit about Knowledge to Action (KTA) to guide my planning. I have strong evidence of effectiveness and cost-effectiveness [knowledge creation & synthesis] , and there are established clinical practice guidelines [knowledge tools/products] . There is an effective model to implement delirium prevention developed in the USA ( http://www.hospitalelderlifeprogram.org ), but it used skilled geriatric nurses and large numbers of trained volunteers, which is not feasible in my hospital. None of the strategies in the guidelines are “hard” but they just don’t seem to get done consistently. I need to find out from staff and patients why this is the case, and then try to find ways to support them. Perhaps they need more education or reminders, or maybe we can reallocate the tasks to make it easier? Or are there strategies I am not familiar with? Whatever I do, I want to measure better care in some way to keep my boss happy and the staff interested. And my previous projects have tended to fizzle out over time… KTA gives me part of a plan but I need some more tools to know how to take the next steps.

Defining frameworks, models and theories

Some researchers have delineated between frameworks, models and theories, whereas other researchers use these terms interchangeably. In general, implementation frameworks, models and theories are cognitive tools that can assist a researcher or implementer to plan, predict, guide or evaluate the process of implementing evidence into practice.

Generally (for more detail refer to cited reference) [ 5 ]:

A framework lists the basic structure and components underlying a system or concept. Examples of typical frameworks are the Consolidated Framework for Implementation Research (CFIR) [ 6 ], the Theoretical Domains Framework (TDF) [ 7 , 8 ], RE-AIM [ 8 – 10 ] and Promoting Action on Research Implementation in Health Services (PARIHS) [ 9 , 10 ].

A model is a simplified representation of a system or concept with specified assumptions. An example of a model is the Knowledge to Action (KTA) cycle [ 11 ].

A theory may be explanatory or predictive, and underpins hypotheses and assumptions about how implementation activities should occur. An example of a theory is the Normalization Process Theory (NPT) [ 12 ].

In our experience, clinicians and clinical researchers want to know what implementation approach will help them and their project best; for many clinicians and clinical researchers that we talk to, navigating the rapidly expanding number of implementation theories, frameworks and models is completely daunting, and is made worse by unfamiliarity with the language used and inconsistencies in nomenclature. To avoid compounding this problem, we will refer to frameworks, models and theories collectively as “theoretical approaches”, and support our readers to focus on which theoretical approach can best suit the purpose, scope and context of the implementation project.

Theoretical approaches help to shape how we think, which is why they are important. However, most of the time we are not aware of the underlying theories or frameworks we use. In implementation science, theoretical approaches have been developed for different purposes, with different intended users and are often underpinned by different philosophical perspectives (see Table 1 ). For instance, some have been designed to assist implementation researchers and enhance the quality of implementation research, [ 6 ] to support improvement and practice development in clinical settings [ 9 ] to understand factors influencing the implementation of evidence in particular health service settings [ 13 ] or to ensure comprehensive evaluation or reporting of an implementation program [ 14 ]. Some are based on the underlying assumption that implementation is rational and predictable when relevant factors are accounted for [ 7 , 8 ]; in contrast, others are built on the assumption that implementation is unpredictable, and ongoing monitoring, flexibility and adaptation is required [ 9 , 10 ]. Some have been designed iteratively, based on the developers’ experience in implementing evidence in real-world settings [ 9 , 15 , 16 ], whereas others have been developed systematically through reviewing and synthesising published literature [ 6 , 7 , 11 ]. And finally, some but not all theoretical approaches have been tested, validated and/or adapted over time [ 8 , 10 , 17 ].

Summary of ten commonly applied theoretical approaches to implementation

Commonly used theoretical approaches

Two articles were published in 2017 which presented the most commonly used dissemination and implementation research frameworks cited in academic publications [ 18 ] and the theories most commonly used by implementation scientists [ 2 ]. For pragmatic reasons (acknowledging the systematic approach taken by authors of both manuscripts), we used these two articles to guide the selection of theoretical approaches for discussion in this paper. We included the ten theoretical approaches that were within the top 15 on both lists (i.e. both highly cited in the literature and commonly used in implementation practice) [ 6 – 9 , 11 – 16 , 19 ]. These are presented in Table 1 . We do not infer that these are the best or only theoretical approaches that should be used in implementation projects; simply that they are the most commonly used.

Of note, there are similarities across the theoretical approaches. All consider the ‘new evidence’ to be implemented; the ‘context’ where the evidence will be introduced; the ‘agents or actors’ who will use or apply the new evidence; and the ‘mechanisms’ or processes that actually make the changes happen. Mechanisms can either be people such as change champions, knowledge brokers, opinion leaders, project managers or facilitators or they can be processes such as new protocols, education sessions or audit and feedback cycles, or a combination of both.

It is important to acknowledge that there is no universally agreed-upon theory of successful implementation, nor empirical evidence about the relative advantages of one theoretical approach over another. While this may be frustrating to people new to the area of implementation science, the number of viable theoretical approaches offers clinicians an opportunity to “think outside the box”, and highlights the importance of clarifying what they are seeking to know or change through their project, and then being strategic in selecting a suitable theoretical approach.

Case study part 2 (experience of author AM) : So it is clear that I will need to adapt principles and protocols from successful programs in the USA to my local context. But how do I know what the context is? Top picks on Google scholar for “context assessment implementation science” seem to be Consolidated Framework for Implementation Research (CFIR) and Promoting Action on Research Implementation in Health Services (PARIHS). Both have nice guides that suggest useful questions to ask. There seems to be quite a lot of overlap, although I am drawn to PARIHS because from my experience I know that someone will need to spend time on the ward and build trust before we start to ask questions and introduce change. I suspect this ‘facilitator’ will be a critical role for the complex intervention because there are several behaviours to change. When I look up “behaviour change implementation science”, the Theoretical Domains Framework (TDF) dominates. Like CFIR and PARIHS, there are a lot of elements, but I can see that they would be helpful for planning or analysing surveys and interviews with patients and staff to clarify what motivates, helps and hinders them. I do feel worried about how I will collect and analyse so much data across the several different groups involved in my project. And once we have a thorough understanding of the context, staff and patients, how will I select strategies? And if it does work, how long will it will take until the “new” becomes “normal” so that I can move on to the next problem? My colleague tells me that Normalization Process Theory (NPT) is a useful way to think about this, and I am impressed with the Normalisation of Complex Interventions-Measure Development (NoMAD) tool I find on their website; I can see how I could adapt it to find out whether staff feel the changes are embedded. So where do I go from here? Do I frame the whole project with KTA, assess context with CFIR, assess the patient and staff views with TDF, adopt facilitation as the central element from PARIHS, and then look at how well it has gone using NPT? Am I being thorough or theoretically sloppy? They all look sensible, but I am not sure how to use any of them and I am worried it is going to add a whole lot of work to a complicated project.

How the theoretical approaches have been used

Recent work investigating how theoretical approaches are selected suggests that theories are not selected to suit the purpose of the implementation research; rather theoretical approaches that are familiar to researchers tend to be used, regardless of the aim of the project [ 2 ]. To explore how theoretical approaches have been applied in the literature to date, we searched for and identified review papers for 6 of our 10 theoretical approaches: KTA [ 20 ], the Reach, Effectiveness, Adoption, Implementation, and Maintenance Framework (RE-AIM) [ 21 ], CFIR [ 22 ], NPT [ 23 ], PARIHS [ 24 ] and TDF [ 25 ]. (For details of these review papers, see Additional file 1 : Table S1).

The overall message from these reviews is that theoretical approaches are not being utilised to their full potential. Despite the fact that many approaches have been developed to prospectively design and plan implementation strategies, they are almost overwhelmingly applied retrospectively to evaluate or interpret findings from a completed implementation project [ 22 – 24 ]. Further, the components of the theoretical approaches (such as coding systems or reporting dimensions) tend not to be applied consistently, with some users selecting to apply only particular theoretical components [ 21 , 22 ], or applying components in different ways than the developers intended [ 21 ].

These findings again suggest that there is not an agreed “best” – or even “easiest” – theory to apply, and that even implementation researchers may need to take a pragmatic approach to the use of theory in complex real-world projects. It reflects the relative immaturity of the field, but this provides opportunities for clinical and research partners to contribute to advancing our knowledge of how theory is selected and adapted for practical use. To support thoughtful use of theory, we suggest some practical guidance to how to choose which theory to use, and to how to use the theory effectively to support the implementation project, based on our experience and interactions with clinical and academic staff new to implementation.

How do you select the theoretical approach for your implementation project?

Research reporting guidelines for implementation studies, including Standards for Reporting Implementation Studies (STaRI) [ 26 ] and Template for Intervention Description and Replication (TIDieR) [ 27 ] specify that the rationale and theory underpinning implementation strategies should be reported. Some researchers also recommend that the reason for selecting a particular theoretical approach should be justified [ 2 , 24 ].

We acknowledge that there will always be multiple questions that could be posed for each implementation project, each of which could be approached from different theoretical perspectives. Below we provide some prompts to assist clinicians and clinical researchers to select a theoretical approach that can value-add to different implementation projects, rather than simply citing a theoretical approach in order to meet a reporting guideline. Clinicians tend to be pragmatic; they generally are not motivated by concepts like theoretical purity, they just want things to work. We emphasise that there is an “art” to selecting and applying theoretical approaches – these prompts need to be applied and considered alongside a clinician or researcher’s experience and skill and the nuances of the implementation project.

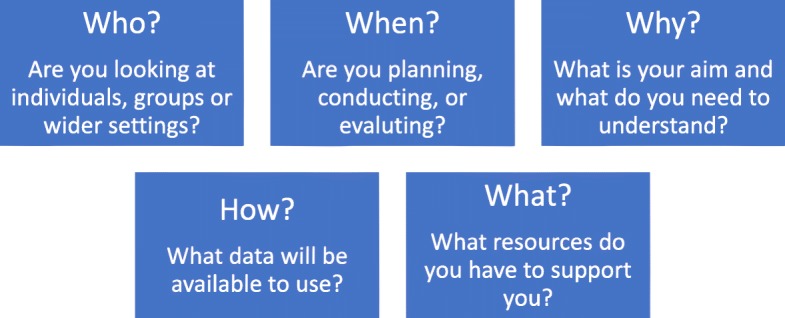

In our experience, clinicians are often anxious that they will select the ‘wrong’ theory. We reiterate that there is no precise formula for choosing a theoretical approach. One important thing to consider in theory selection is the goodness-of-fit, which is determined by each study’s needs and aims, rather than there being a ‘wrong’ choice. The following are suggested questions that could be considered to identify which theoretical approach is particularly appropriate or useful for different implementation projects (see Fig. 1 ).

Five questions to help select a theoretical approach

Who are you working with?

Are you working with individuals who have complete autonomy, are you working with a team, or are you working with an entire health service? Almost all implementation studies will inevitably touch on different organisational levels (micro-, meso- and macro-level implementation), so consider the fit of the theoretical approaches to the organisational level where your project is positioned, and whether more than one approach is required to guide implementation at different levels. Some approaches are particularly concerned with individual experiences or behaviours (for example, TDF), others with group interaction or collective working (for example, NPT; Klein) and others encompass the broader contextual factors impacting across a wider setting or service (for example, PARIHS).

When in the process are you going to use theory?

The point in time of the implementation project may be another factor guiding theoretical approach selection. Some approaches lend themselves particularly to the design and planning of an implementation strategy (for example, Exploration, Preparation, Implementation and Sustainment (EPIS); Proctor; Interconnected Systems Framework (ISF); TDF in conjunction with Behaviour Change Wheel), others to tracking the development of a project (for example, KTA), and others to planning an evaluation and defining outcome measures to assess implementation success (for example, RE-AIM).

Why are you applying a theory?

The aims and intended outcomes of each study should be considered, as different theoretical approaches provide different ‘pay offs’ in terms of the understanding gained. Different theoretical approaches can be used to measure achievement of a specific change (for example, TDF; ISF), to generate a better understanding of barriers and facilitators to inform implementation approaches (for example, PARIHS; CFIR), to develop knowledge about an ongoing implementation process (for example, KTA; Proctor), or to provide a framework of relevant implementation outcomes (for example, CFIR; RE-AIM).

How will you collect data?

Choice of theoretical approach may also be informed by what data will be available for analysis. Although ideally data collection would be designed with a particular approach in mind so that data are collected to answer the questions of interest, we are aware that in practice clinicians need to work with the resources that are available to them. For example, clinicians may have access to routinely collected outcome data which could be evaluated using the constructs in RE-AIM, but these same data might provide limited insight into the underlying mechanisms of action that NPT explores. Similarly, many services routinely collect qualitative data about professional or patient experiences which could be explored using an approach such as NPT, but these data would be unlikely to achieve a satisfactorily robust evaluation using the questions posed by RE-AIM. Again, we reiterate that this paper is not written as a prescriptive piece (i.e. we are not advocating that RE-AIM should be used to guide analysis of all projects with outcome data and NPT used to analyse projects with available patient and professional experience data) but these examples are given to illustrate the importance of careful selection of theoretical approach.

What resources are available?

The experience of the people who will be involved in coordinating the implementation project should be considered. People who have less experience in implementation projects might require structured tools to collect and analyse data, such as those developed for use with some approaches (for example, CFIR [ 28 ]; NPT [ 29 ]; TDF [ 30 , 31 ], PARIHS [ 32 ] RE-AIM [ 33 ]). The number of staff, and the time available to them to participate in the implementation project should be considered – for example facilitation (a core component of PARIHS) requires a substantial time investment for one or more person to act as facilitator, whereas approaches that are more aligned to strategies such as training and audit and feedback sessions (for instance, EPIS) might be easier to implement with less staff or resource support.

Other questions to ask to help in choosing

Does the theoretical approach have particular ‘face validity’ for the implementation project? For example, people interested in facilitation may recognise PARIHS as particularly relevant, or a project aiming to address motivations for behaviour change may lend itself to TDF.

Does the theoretical approach draw your attention to aspects of implementation that you may have otherwise neglected? For example, implementing a large scale public health intervention may have varied success due to challenges meeting the most vulnerable populations, or providing the intervention as intended across a range of sites—features that can be captured effectively by RE-AIM.

What theoretical approach(es) have studies in your topic area used? This can be helpful in providing worked examples of how particular theoretical approaches have been applied to add understanding to a project.

How can theoretical approaches be used during your implementation project?

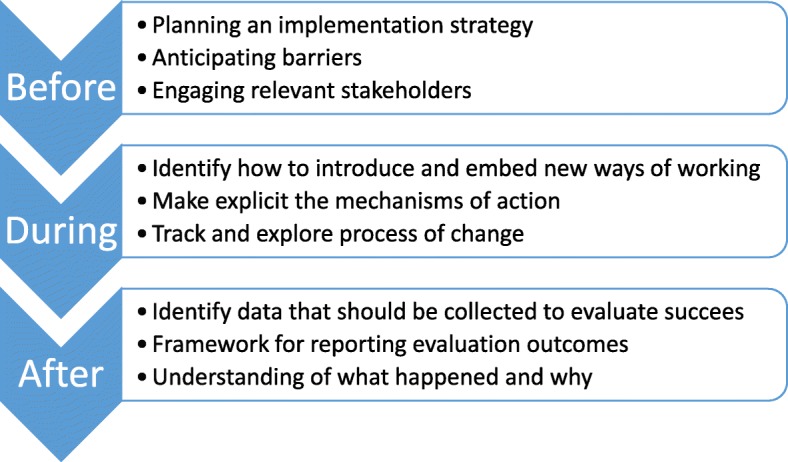

Given that implementation science is designed to have the end-point of improved health processes and outcomes, clinicians and clinical researchers should be pro-active in applying theoretical approaches to guide planning, doing and evaluating implementation projects (see Fig. 2 ).

How a theoretical approach can support a project at different stages

When developing an implementation plan, theory can be used to guide identification of key stakeholders (for example who should ideally participate in the implementation project; who needs to know about the program to ensure organisational support) and to guide the collection of pre-implementation data (for example details about the target practice or evidence to be implemented; details about the key stakeholders; features of the context in which implementation is to occur). Through identifying key stakeholders and collecting pre-implementation information, theoretical approaches can then guide the development of project-specific implementation strategies.

As well as guiding these practical steps of an implementation program, theoretical approaches can be used to predict or explore the process of change, through the development of logic models or program theories. In this way, the intended mechanisms of action can be made explicit, which can be useful when communicating with the key stakeholders, as well as exposing any hidden assumptions which may influence the success of the project.

Theoretical approaches can be used during an implementation program to guide how to introduce, embed and sustain new practices, with varying levels of guidance offered by the different approaches.

And lastly, theoretical approaches can be used to evaluate the success (or otherwise) of an implementation program through guiding what data should be collected, how results should be reported, and providing structure to guide analysis. Depending on the theoretical approach chosen, analysis may focus on the evidence being implemented, the people involved, the context in which implementation occurred, the process of implementation itself or interactions between these different factors.

Case study part 3 ( experience of author AM ): I choose PARIHS because I need to work with a whole range of staff, with different views and roles within this complex intervention, and I can see the need for facilitation. I am mostly interested in something to guide the “doing” and as my colleague and I begin to work with the first ward, we find using a lens of implementation science helps us to understand more about what is and isn’t working, so that instead of getting frustrated we can reflect more objectively and search for flexible solutions. On the first ward we also use the framework for reflection and for guiding a simple evaluation [ 34 ], and this provides useful structure for planning and adapting our approach on the next ward where the people and context differ in important ways. This then gives us confidence to start training other facilitators to “read” the local teams and context as we conduct a large funded trial across several hospitals [ 35 ], providing evidence of transferability that will be critical for spread to improve outcomes at scale. We are able to capture more consistent data about the different wards we are working on, which help us understand inconsistencies in our results, and identify the most important factors that predict which wards can implement this program successfully to help us target scarce resources. We are also able to collect data about the facilitation process, and how facilitators learn this role. We find as we become more familiar with PARIHS it becomes more useful for planning, doing and evaluating our improvements. But we are also involved in projects that this framework is too complicated to use in. Our experience makes us more confident to look for and try out other theoretical approaches that we think will suit those projects better, and help us achieve – and importantly understand – our outcomes.

Conclusions

We have written this paper based on our collective clinical and research experience to assist clinicians and clinical researchers to plan, conduct and evaluate implementation projects. Ours is a pragmatic guide based on our experiences; we look forward to others’ work in the field who are using empirical research methods to investigate how to select and apply theoretical approaches. We anticipate there will be ongoing developments and refinements in implementation theory, so it is important to recognise the great opportunities that currently exist for the implementation research and clinical communities to come together to more explicitly co-design interventions based on sets of theoretical assumptions and predictions that can inform implementation projects. Imagine conversations around theory selection that connect into clinicians’ sense of their context and the challenges they face, their history and resources, and their understanding of the cultural barriers and drivers for change. These are all important factors that need to be integrated into any ‘proposed theory of implementation’ generated by the clinicians and their research partners.

We planned this paper to help clinicians and clinical researchers to become more familiar with the different implementation theoretical approaches, to understand their rationale and existing usage, and to have the confidence to apply theory to implementation projects. We encourage clinicians and novice researchers to be open minded and at the same time to trust their instincts when selecting a theoretical approach when conducting implementation projects, all the while being conscious to consider how the different approaches fit the aims, scope and resources available. In this way, the most useful approach will be applied, rather than using a familiar or previously-applied approach that does not value-add to the project at hand. We urge clinicians to consider and select the theoretical approach(es) prospectively to be of most benefit to the project, and we encourage anyone and everyone involved in implementation projects to reflect and share their experiences about whether (and how) the theoretical approach contributed to the conduct and success of the project. In doing so, clinicians, clinical researchers and implementation scientists can collectively close the gap between espoused theory and theory in use, which can help our community of health professionals and researchers to refine our thinking. This then will contribute to more nuanced advice on how to address evidence-practice gaps and ultimately to contribute to better health outcomes.

Additional file

Table S1. Review papers about use of theoretical approaches. This table provides summaries of published manuscripts which review use of 6 theoretical approaches. (DOCX 15 kb)

Acknowledgements

We thank Heilok Cheng for assistance in preparing the manuscript for submission.

EAL received salary support from a NHMRC Early Career fellowship (1138515). The funding body had no input into the design of the study or in collection, analysis and interpretation of data nor in writing the manuscript.

Availability of data and materials

Not applicable.

Abbreviations

Consolidated framework for implementation research

Exploration, preparation, implementation and sustainment

Integrated promoting action on research implementation in Health Services

Interconnected systems framework

Knowledge to action

National health and medical research council

Normalisation of complex interventions-measure development

Normalization process theory

Promoting action on research implementation in health services

Reach, effectiveness, adoption, implementation, and maintenance

Standards for reporting implementation studies

Theoretical domains framework

Template for intervention description and replication

Translating research into practice

Authors’ contributions

EAL, AM, ALK, GH conceptualised the idea and prepared preliminary draft outline, all authors wrote sections of the manuscript, all authors read and approved final draft.

Ethics approval and consent to participate

Consent for publication, competing interests.

ALK and GH are developers of PARIHS framework and produced the refined version the i-PARIHS framework.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Elizabeth A. Lynch, Email: [email protected]

Alison Mudge, Email: [email protected].

Sarah Knowles, Email: [email protected].

Alison L. Kitson, Email: [email protected]

Sarah C. Hunter, Email: [email protected]

Gill Harvey, Email: [email protected].

- 1. Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. 2006;1(1):1. doi: 10.1186/1748-5908-1-1. [ DOI ] [ Google Scholar ]

- 2. Birken SA, Powell BJ, Shea CM, Haines ER, Alexis Kirk M, Leeman J, Rohweder C, Damschroder L, Presseau J. Criteria for selecting implementation science theories and frameworks: results from an international survey. Implement Sci. 2017;12(1):124. doi: 10.1186/s13012-017-0656-y. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 3. Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53. doi: 10.1186/s13012-015-0242-0. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 4. Phillips CJ, Marshall AP, Chaves NJ, Jankelowitz SK, Lin IB, Loy CT, Rees G, Sakzewski L, Thomas S, To TP, et al. Experiences of using the theoretical domains framework across diverse clinical environments: a qualitative study. J Multidiscip Healthc. 2015;8:139–146. doi: 10.2147/JMDH.S78458. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 5. Implementation Guide: Department of Veterans Health Administration, Health Services Research & Development, Quality Enhancement Research Initiative.; Updated 2013.

- 6. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 7. Michie S, Johnston M, Abraham C, Lawton R, Parker D, Walker A. On b: making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care. 2005;14(1):26–33. doi: 10.1136/qshc.2004.011155. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 8. Cane J, O’Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci. 2012;7(1):37. doi: 10.1186/1748-5908-7-37. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 9. Kitson A, Harvey G, McCormack B. Enabling the implementation of evidence based practice: a conceptual framework. Quality in Health Care. 1998;7:149–158. doi: 10.1136/qshc.7.3.149. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 10. Harvey G, Kitson A. PARIHS revisited: from heuristic to integrated framework for the successful implementation of knowledge into practice. Implement Sci. 2016;11:33. doi: 10.1186/s13012-016-0398-2. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 11. Graham ID, Logan J, Harrison MB, Straus SE, Tetroe J, Caswell W, Robinson N. Lost in knowledge translation: time for a map? J Contin Educ Heal Prof. 2006;26(1):13–24. doi: 10.1002/chp.47. [ DOI ] [ PubMed ] [ Google Scholar ]

- 12. May C, Finch T. Implementing, embedding, and integrating practices: an outline of normalization process theory. Sociology. 2009;43(3):535–554. doi: 10.1177/0038038509103208. [ DOI ] [ Google Scholar ]

- 13. Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, et al. Bridging the gap between prevention research and practice: the interactive systems framework for dissemination and implementation. Am J Community Psychol. 2008;41:171–181. doi: 10.1007/s10464-008-9174-z. [ DOI ] [ PubMed ] [ Google Scholar ]

- 14. Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89:1322–1327. doi: 10.2105/AJPH.89.9.1322. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 15. Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Admin Pol Ment Health. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 16. Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manag Rev. 1998;36:24–34. [ Google Scholar ]

- 17. Glasgow RE, Klesges LM, Dzewaltowski DA, Estabrooks PA, Vogt TM. Evaluating the impact of health promotion programs: using the RE-AIM framework to form summary measures for decision making involving complex issues. Health Educ Res. 2006;21:688–694. doi: 10.1093/her/cyl081. [ DOI ] [ PubMed ] [ Google Scholar ]

- 18. Skolarus TA, Lehmann T, Tabak RG, Harris J, Lecy J, Sales AE. Assessing citation networks for dissemination and implementation research frameworks. Implement Sci. 2017;12(1):97. doi: 10.1186/s13012-017-0628-2. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 19. Proctor EK, Landsverk J, Aarons GA, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Admin Pol Ment Health. 2009;36:24–34. doi: 10.1007/s10488-008-0197-4. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 20. Field B, Booth A, Ilott I, Gerrish K. Using the knowledge to action framework in practice: a citation analysis and systematic review. Implement Sci. 2014;9:172. doi: 10.1186/s13012-014-0172-2. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 21. Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103(6):e38–e46. doi: 10.2105/AJPH.2013.301299. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 22. Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of the consolidated framework for implementation research. Implement Sci. 2016;11(1):72. doi: 10.1186/s13012-016-0437-z. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 23. McEvoy R, Ballini L, Maltoni S, O’Donnell CA, Mair FS, MacFarlane A. A qualitative systematic review of studies using the normalization process theory to research implementation processes. Implement Sci. 2014;9:2–2. doi: 10.1186/1748-5908-9-2. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 24. Helfrich CD, Damschroder LJ, Hagedorn HJ, Daggett GS, Sahay A, Ritchie M, Damush T, Guihan M, Ullrich PM, Stetler CB. A critical synthesis of literature on the promoting action on research implementation in health services (PARIHS) framework. Implement Sci. 2010;5(1):82. doi: 10.1186/1748-5908-5-82. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 25. Francis JJ, O'Connor D, Curran J. Theories of behaviour change synthesised into a set of theoretical groupings: introducing a thematic series on the theoretical domains framework. Implement Sci. 2012;7:35. doi: 10.1186/1748-5908-7-35. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 26. Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, Rycroft-Malone J, Meissner P, Murray E, Patel A, et al. Standards for reporting implementation studies (StaRI) statement. BMJ. 2017;356. [ DOI ] [ PMC free article ] [ PubMed ]

- 27. Hoffmann T. C., Glasziou P. P., Boutron I., Milne R., Perera R., Moher D., Altman D. G., Barbour V., Macdonald H., Johnston M., Lamb S. E., Dixon-Woods M., McCulloch P., Wyatt J. C., Chan A.-W., Michie S. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348(mar07 3):g1687–g1687. doi: 10.1136/bmj.g1687. [ DOI ] [ PubMed ] [ Google Scholar ]

- 28. Consolidated Framework for Implementation Research [ http://www.cfirguide.org ].

- 29. Normalization Process Theory On-line Users’ Manual, Toolkit and NoMAD instrument [ http://www.normalizationprocess.org ].

- 30. Michie S, Atkins L, West R. The behaviour change wheel: a guide to designing interventions. Great Britain: Silverback Publishing; 2014. [ Google Scholar ]

- 31. Atkins L, Francis J, Islam R, O'Connor D, Patey A, Ivers N, et al. A guide to using the theoretical domains framework of behaviour change to investigate implementation problems. Implement Sci. 2017;12:77. doi: 10.1186/s13012-017-0605-9. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 32. Harvey G, Kitson A. Implementing evidence-based practice in healthcare: a facilitation guide. Abingdon, Oxon: Routledge; 2015. [ Google Scholar ]

- 33. RE-AIM.org [ http://www.re-aim.org ].

- 34. Mudge AM, McRae P, Cruickshank M. Eat, walk, engage: an interdisciplinary collaborative model to improve care of hospitalized elders. Am J Med Qual. 2015;30:5–13. doi: 10.1177/1062860613510965. [ DOI ] [ PubMed ] [ Google Scholar ]

- 35. Mudge AM, Banks MD, Barnett AG, Blackberry I, Graves N, Green T, et al. CHERISH (collaboration for hospitalised elders reducing the impact of stays in hospital): protocol for a multi-site improvement program to reduce geriatric syndromes in older inpatients. BMC Geriatr. 2017;17:11. doi: 10.1186/s12877-016-0399-7. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data availability statement.

- View on publisher site

- PDF (1019.0 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

IMAGES

VIDEO