Open Access is an initiative that aims to make scientific research freely available to all. To date our community has made over 100 million downloads. It’s based on principles of collaboration, unobstructed discovery, and, most importantly, scientific progression. As PhD students, we found it difficult to access the research we needed, so we decided to create a new Open Access publisher that levels the playing field for scientists across the world. How? By making research easy to access, and puts the academic needs of the researchers before the business interests of publishers.

We are a community of more than 103,000 authors and editors from 3,291 institutions spanning 160 countries, including Nobel Prize winners and some of the world’s most-cited researchers. Publishing on IntechOpen allows authors to earn citations and find new collaborators, meaning more people see your work not only from your own field of study, but from other related fields too.

Brief introduction to this section that descibes Open Access especially from an IntechOpen perspective

Want to get in touch? Contact our London head office or media team here

Our team is growing all the time, so we’re always on the lookout for smart people who want to help us reshape the world of scientific publishing.

Home > Books > Quality Control and Quality Assurance - Techniques and Applications

Design of Experiments (DOE): Applications and Benefits in Quality Control and Assurance

Submitted: 09 June 2023 Reviewed: 24 November 2023 Published: 23 February 2024

DOI: 10.5772/intechopen.113987

Cite this chapter

There are two ways to cite this chapter:

From the Edited Volume

Quality Control and Quality Assurance - Techniques and Applications

Edited by Sayyad Zahid Qamar and Nasr Al-Hinai

To purchase hard copies of this book, please contact the representative in India: CBS Publishers & Distributors Pvt. Ltd. www.cbspd.com | [email protected]

Chapter metrics overview

185 Chapter Downloads

Impact of this chapter

Total Chapter Downloads on intechopen.com

Total Chapter Views on intechopen.com

This chapter explores the applications and benefits of Design of Experiments (DOE) in the context of quality control and quality assurance. DOE is a statistical methodology that enables researchers and practitioners to systematically investigate and optimize processes, identify critical factors affecting quality, and reduce variability and waste. This chapter begins by introducing the overview and definitions of DOE, covering topics such as the history of DOE, types of DOE, steps involved in conducting DOE, and key components of DOE. The specific applications of DOE in quality control and quality assurance were explored, highlighting their importance across various industries. It demonstrates how DOE can be effectively applied to optimize products and processes, reduce defects and variation, improve quality, implement Six Sigma, and validate and verify processes. It then delves into the specific applications of DOE in quality control and assurance, highlighting its significance in various industries and sectors. Furthermore, the book addresses challenges and considerations in implementing DOE in real-world scenarios, such as resource constraints, experimental constraints, and data analysis complexities. It provides basic information on software tools commonly used in DOE.

- design of experiments (DOE)

- quality control

- process variability

- optimization

Author Information

Sheriff lamidi *.

- Lagos State University of Science and Technology (Formerly Lagos State Polytechnic), Lagos, Nigeria

Rafiu Olalere

Adekunle yekinni, khairat adesina.

*Address all correspondence to: [email protected]

1. Introduction

Quality control and assurance are crucial aspects of any manufacturing or industrial process. Ensuring high-quality products and services is essential for customer satisfaction, brand reputation, and overall business success. Quality is a measure of the level of conformance of a product to design specifications or the ability of a product or service to satisfy user requirements. The duo of quality assurance and quality control helps deliver a defect-free product or service. Quality assurance focuses on preventing defects by ensuring the approaches, techniques, methods, and processes designed for the projects are implemented correctly. Quality control, on the other hand, focuses on identifying defects by ensuring that the approaches, techniques, methods, and processes designed in the project are followed correctly [ 1 ]. Quality assurance is process-oriented and a managerial tool, whereas quality control is product-oriented and a remedial tool, according to [ 1 ]. One powerful tool used in quality control and assurance is the design of experiments (DOE). According to engineers and technologists, they often make use of DOE methodologies for various applications ranging from the design of new products, improvement of design, maintenance, control and improvement of manufacturing processes, maintenance and repair of products, and several others [ 2 , 3 , 4 ]. This chapter aims to explore the applications and benefits of DOE in quality control and assurance. Design of experiments (DOE) is a statistical method for planning and conducting experiments. DOE is used to identify the factors that affect a process and to determine the optimal levels of those factors, as shown in Figure 1 . DOE can be used to improve the quality of products and processes, reduce costs, and increase efficiency [ 5 ]. Businesses and manufacturing companies can use Design of Experiments (DOE) in a variety of ways to differentiate themselves from the competition by constantly redesigning their products or creating new products to establish a presence in other markets. First, DOE can be used to identify the factors that most affect the quality of a product. By understanding which factors are most important, businesses can focus their efforts on improving those factors. This can lead to a product that is more reliable, durable, and user-friendly than the competition’s products. Second, DOE can be used to reduce the cost of manufacturing a product. By identifying the most efficient way to produce a product by optimizing manufacturing processes using DOE methodologies, businesses can improve the quality of their products and save money on labor, materials, and other costs [ 6 ]. This can lead to a lower price for the product, which can make it more competitive. Third, DOE can be used to develop new products that meet the needs of a specific market. By understanding the needs of the target market, businesses can develop products that are more likely to be successful. This can help businesses gain a foothold in new markets and increase their market share.

Design of Experiment (DOE).

1.1 Objective of the chapter

The objective of this chapter is to provide an in-depth understanding of DOE and its applications in quality control and assurance. We will explore various experimental designs, statistical techniques, and methodologies that are commonly used in DOE. Additionally, we will discuss its practical applications across various fields and the benefits and advantages that DOE offers in ensuring and improving quality standards.

1.2 An overview and definitions of design of experiments (DOE)

Definitions of Design of Experiments (DOE): DOE is a statistical methodology used to systematically plan, conduct, analyze, and interpret experiments to obtain valid and reliable results. It allows researchers to efficiently explore and identify the significant factors influencing a process or product’s performance. DOE is an important statistical method used in controlling input factors or variables in order to ascertain the level of relationships with the output (responses) according to Figure 1 , so as to ensure product or process quality. DOEs are usually carried out in five stages [ 7 , 8 ] as shown in Figure 2 . They are:

Five stages of DOE.

1.3 History of DOE

DOE has its roots in the work of Sir Ronald Fisher, who developed the basic principles of DOE in the early twentieth century. Fisher’s work was initially applied to agricultural research, but it was soon adapted for use in other fields, including manufacturing, engineering, and medicine [ 9 ]. Since then, many scientists and statisticians have contributed to DOE development and its application in different fields [ 9 , 10 , 11 , 12 ].

1.4 Types of DOE

There are many different types of DOE, each with its own strengths and weaknesses [ 8 , 13 ]. The best type of DOE to use will depend on the specific situation. Factors to consider include the number of factors, the number of levels for each factor, the desired level of confidence, and the time and budget constraints ( Figure 3 ) [ 10 , 14 , 15 , 16 ].

Types of DOE.

The most common types of DOE are:

1.4.1 Full factorial designs

These designs involve testing all possible combinations of factors. For example, if there are two factors with two levels each, there would be four possible combinations (2 × 2 = 4). Full factorial designs are the most comprehensive, but they can also be the most time-consuming and expensive.

1.4.2 Screening/fractional factorial designs

Fractional factorial experiments are a type of factorial experiment that uses fewer experimental runs than a full factorial design. These designs involve testing a subset of the possible combinations of factors. Fractional factorial designs are less comprehensive than full factorial designs, but they can save time and money.

1.4.3 Response surface methodology (RSM) designs

RSM is a type of DOE that is used to fit a mathematical model to the response variable. RSM can be used to identify the optimal levels of the factors and predict the response variable for new combinations of factors. These designs are used to study the relationship between a response variable and multiple factors.

1.4.4 Mixture designs

These designs are used to study the relationship between a response variable and multiple factors that are mixed together. Mixture designs are often used in the food and beverage industry to understand how the different ingredients in a product affect the taste, texture, and other properties of the product.

1.4.5 Taguchi designs

These designs are a type of fractional factorial design that is specifically designed for quality improvement. Taguchi designs are often used in manufacturing, where it is important to produce products that meet the required quality standards.

1.5 Steps involved in conducting DOE

Define clear objectives

Select Process variable

A feasible experimental design must be selected.

Execute the selected design.

Ensure that the data are consistent with the experimental assumptions.

Analyze and interpret the results.

Results presentation, and application for decision making.

Conclusions

Steps required in DOE.

In the application of the concept of DOE methodology for quality control and assurance, the following terminologies, otherwise known as components of DOE, are commonly used:

2. Key concepts/components of DOE

Factors: Variables that may influence the outcome of an experiment.

Levels: The values at which factors are set during an experiment.

Response Variable: The outcome or output variable that is measured or observed.

Experimental Units: The entities or subjects on which the experiments are conducted.

Treatment: The combination of factor levels applied to an experimental unit.

Replication: The process of repeating the experiment to reduce variability and enhance reliability.

3. Applications of DOE in quality control and assurance

3.1 product and process optimization.

DOE enables the systematic exploration of various factors and their interactions to optimize product and process performance. By identifying the key factors and their optimal levels, manufacturers can improve quality, reduce costs, and enhance efficiency.

3.2 Defects and variation reduction DOE

Helps identify the root causes of defects and variations in a manufacturing process. By conducting experiments and analyzing the results, quality engineers can pinpoint the factors that contribute to defects and develop strategies to reduce or eliminate them.

3.3 Quality improvement and six sigma

DOE is an integral part of Six Sigma methodologies, which aim to achieve process excellence and reduce variation. By using DOE, organizations can identify critical process parameters, set optimal levels, and implement strategies to minimize defects and variations, thus improving overall quality.

3.4 Process validation and verification

DOE plays a crucial role in the validation and verification of manufacturing processes. By conducting designed experiments, organizations can gather data on process performance, determine critical process parameters, and establish robustness and reliability of their processes.

4. Key factors affecting quality optimization processes and waste reduction

Process Design and Standardization: Well-designed processes with clear specifications and standard operating procedures (SOPs) play a vital role in optimizing quality and minimizing waste. Factors such as process layout, equipment selection, workflow efficiency, and error-proofing mechanisms can significantly impact the quality of output and waste generation [ 17 , 18 ].

Quality Control and Monitoring: Effective quality control measures, including robust inspection protocols, real-time monitoring systems, and statistical process control (SPC) techniques, help identify and rectify quality issues promptly. Monitoring critical process parameters and implementing quality control checks at various stages can minimize defects and waste [ 12 , 19 ].

Training and Skill Development: Well-trained and skilled personnel are essential for maintaining quality standards and reducing waste. Adequate training programs that emphasize quality awareness, technical skills, and problem-solving capabilities contribute to consistent quality optimization and waste reduction [ 20 ].

Continuous Improvement and Lean Practices: Embracing continuous improvement methodologies, such as Lean Six Sigma, can drive quality optimization and waste reduction. Tools like value stream mapping, root cause analysis, and Kaizen events enable organizations to identify and eliminate process inefficiencies, defects, and non-value-added activities [ 21 ].

5. Software tools commonly used in DOE

Minitab: Minitab is a popular statistical software package widely used for DOE. It offers a comprehensive set of DOE tools, including factorial designs, response surface methods, and mixture designs. Minitab provides easy-to-use graphical and statistical analysis features, making it suitable for both beginners and experienced users.

JMP: JMP is a powerful statistical software developed by SAS. It offers a range of DOE techniques, such as factorial designs, response surface methods, and mixture designs. JMP provides an interactive interface with drag-and-drop capabilities for designing experiments, analyzing data, and visualizing results.

Design-Expert: Design-Expert is a specialized software tool specifically designed for DOE. It offers a wide range of experimental design options, including factorial designs, response surface methods, mixture designs, and Taguchi designs. Design-Expert provides advanced graphical and statistical analysis features to facilitate the optimization of processes and product formulations.

R: R is a popular open-source programming language for statistical computing and graphics. It has a rich collection of packages that support DOE, such as the ‘DOE’ package and ‘rsm’ package. R provides extensive flexibility and customization options for designing experiments, analyzing data, and performing advanced statistical modeling.

Excel: Microsoft Excel, though not specifically designed for DOE, can be used for simple experimental designs and analysis. It offers basic statistical functions, charts, and data analysis tools that can be utilized for conducting DOE experiments and analyzing results.

6. Some examples of specific fields where DOE found its practical application

6.1 manufacturing industry.

DOE can be used to optimize the process of manufacturing a part, identify the root cause of a quality problem, or reduce the variability of a process, which is a measure of quality. It can be used to identify the causes of defects in a product or to find ways to reduce the time it takes to manufacture a product. DOE can be adopted in the manufacturing industry by an industry that desires to manufacture a machine part (from Al-Si alloy material) with minimum surface roughness by combining three controllable variables (cutting speed, feed rate, and depth of cut). Due to the combinations of many variables, the Design of Experiments (DOE) could be used to carry out the study of the effect of the three machining variables (cutting speed, feed rate, and depth of cut) on the surface roughness (Ra) of Al-Si alloy [ 22 , 23 ].

In the automotive industry, DOE is used to improve the fuel efficiency of cars and trucks. By identifying the factors that most affect fuel efficiency, businesses can design cars and trucks that use less fuel. This can lead to lower emissions and a reduced cost of ownership for the customer.

In the food industry, DOE is used to improve the taste and texture of food products. By understanding how different factors affect the taste and texture of food, businesses can develop products that are more appealing to consumers. This can lead to increased sales and a stronger brand reputation.

In the pharmaceutical industry, DOE is used to develop new drugs that are more effective and less harmful than existing drugs. By understanding how different factors affect the effectiveness and toxicity of drugs, businesses can develop drugs that are more likely to be approved by the agency in charge. This can lead to increased profits and to a better quality of life for patients.

6.2 Engineering

DOE can be used to optimize the design of a car engine or to improve the yield of a chemical reaction [ 10 ]. DOE can be used to improve the design of products and processes, reduce costs, and increase efficiency. Furthermore, DOE can be used to optimize the design of a bridge, identify the root cause of a failure, or reduce the weight of a product.

DOE can be used to optimize the design of composite materials for specific structural applications. Factors such as fiber type, fiber volume fraction, resin content, and curing parameters can be varied systematically to achieve desired mechanical properties such as strength, stiffness, and impact resistance [ 24 ].

DOE is used to optimize process parameters to improve yield and quality in semi-conductor engineering. Factors such as temperature, pressure, etching time, and gas flow rates can be varied systematically to identify the optimal settings that result in minimal defects and enhanced performance [ 25 ].

6.3 Medicine

DOE can be used to optimize drug formulations to improve the bioavailability of pharmaceutical products. Factors such as excipient composition, drug concentration, and manufacturing parameters can be systematically varied to identify the optimal combination that maximizes drug absorption and efficacy [ 26 ].

DOE can be used to optimize the dosage of a drug, to identify the side effects of a treatment, or to reduce the risk of a disease. DOE is used in medicine to improve the effectiveness of treatments and to reduce the side effects of drugs. For example, DOE can be used to identify the best dose of a drug to treat a particular condition or to find ways to reduce the toxicity of a drug.

DOE can be utilized to optimize treatment protocols in radiation therapy for cancer patients. Factors such as radiation dose, treatment duration, and beam angles can be systematically varied to identify the optimal combination that maximizes tumor control while minimizing side effects on healthy tissues [ 24 , 27 ].

6.4 Agriculture

DOE can be used to evaluate the effects of fertilizer formulations on crop yield. In the work of [ 17 ], DOE was used to investigate the impact of different fertilizer formulations on crop yield. The study involved varying factors such as nitrogen, phosphorus, and potassium concentrations in the fertilizer mix. By systematically designing and conducting experiments, they were able to determine the optimal combination of nutrients that maximized crop yield while minimizing the amount of fertilizer required.

DOE can be used to assess the impact of irrigation techniques on water use efficiency. The effect of different irrigation techniques on water use efficiency in crop production was evaluated by [ 28 ]. Various factors, such as irrigation frequency, irrigation duration, and water application rate, were manipulated and studied. The experiments allowed the researchers to identify the optimal combination of irrigation practices that resulted in improved water use efficiency without compromising crop yield [ 28 ].

Optimization of Plant growth conditions in controlled environments was conducted by [ 29 ] using DOE. The growth conditions of the plant was optimize in controlled environments such as greenhouses or growth chambers. Factors such as light intensity, temperature, humidity, and CO 2 levels were systematically varied to determine the optimal combination that promoted plant growth, development, and yield.

6.5 Marketing

DOE can be used to optimize product packaging design elements such as color, shape, size, and labeling to understand their impact on consumer perception and purchase behavior. By systematically varying these factors, marketers can identify the optimal packaging design that maximizes consumer appeal and product sales [ 30 ].

DOE can be employed to evaluate different pricing strategies and their impact on consumer behavior, purchase intent, and profitability. Factors such as price levels, discount offers, and promotional strategies can be systematically varied to determine the optimal pricing strategy that maximizes sales and profitability [ 31 , 32 ].

DOE can be utilized to test and optimize various elements of advertisements, such as visual design, headline, copywriting, and call-to-action. By systematically varying these factors and measuring consumer responses, marketers can identify the optimal combination that maximizes advertisement effectiveness and consumer engagement [ 33 ].

7. Benefits of DOE in quality control and assurance

Efficient Resource Utilization: DOE allows organizations to allocate their resources efficiently by identifying the most influential factors. By focusing on these factors, companies can optimize their processes and achieve significant improvements in quality without unnecessary expenditure.

Cost Reduction: By systematically exploring process factors and their interactions, DOE helps identify cost-effective solutions. By reducing defects, eliminating waste, and optimizing process parameters, organizations can save costs associated with rework, scrap, and material consumption.

Improved quality: DOE can be used to improve the quality of products and processes by identifying and reducing the variability of the process.

Reduced costs: DOE can be used to reduce costs by identifying the most efficient way to produce a product or by reducing the amount of waste.

Increased efficiency: DOE can be used to increase efficiency by identifying the root cause of problems and by improving the design of products and processes.

Enhanced Decision Making: DOE provides a structured approach to experimentation, resulting in reliable and statistically valid data. This enables informed decision making based on evidence rather than intuition or guesswork. By using DOE, organizations can make data-driven decisions to improve quality and minimize risks.

Faster Time to Market: DOE facilitates the identification of critical process parameters and optimal levels, leading to faster process optimization. By reducing the time required for experimentation and process development, organizations can accelerate product development cycles and bring products to market more quickly.

8. Challenges and considerations in implementing DOE in real-world scenarios

Resource Constraints: Limited resources such as time, budget, and availability of equipment or materials can pose challenges in implementing DOE. Conducting experiments may require significant time and financial investments. It is essential to carefully plan and allocate resources to ensure the feasibility and success of DOE studies [ 19 , 34 ].

Experimental Constraints: Some experiments may face practical constraints due to factors such as safety regulations, ethical considerations, or limitations in the process or system under investigation. Researchers must identify and address these constraints to design experiments that are feasible and align with regulatory requirements [ 21 , 35 ].

Data Analysis Complexities: Analyzing experimental data and interpreting the results can be challenging, particularly when dealing with complex designs or large datasets. Specialized statistical knowledge may be required to properly analyze and draw meaningful conclusions from the data obtained through DOE. Consideration should be given to the appropriate statistical methods and software tools for analyzing the experimental results [ 35 , 36 ].

Planning for Interactions and Confounding: Identifying and addressing potential interactions among factors and confounding effects can be complex in DOE. Interactions and confounding can affect the interpretation of experimental results and lead to incorrect conclusions. Careful consideration and appropriate experimental design strategies, such as fractional factorial designs, can help mitigate these challenges [ 16 , 37 , 38 ].

9. Conclusion

In this chapter, the authors focus on the applications and benefits of DOE in quality control and assurance. DOE is a very important statistical methodology that enables both scientists, engineers, researchers, and various other professionals to design, develop, and optimize high-quality products and services. DOE has many applications in various fields. Application of DOE ensures high-quality products and services, increases customer satisfaction, efficient resource utilization, cost reduction, enhanced decision making, improves brand reputation, and ensures overall business success. Having presented in this chapter the numerous applications of DOE in various fields, it can be concluded that DOE is a powerful research tool and methodology for quality control and assurance.

Acknowledgments

The opportunity to have this chapter review included in the main book, Quality Control and Quality Assurance - Techniques and Applications, is greatly appreciated by the authors. We also commend the rigorous peer review process.

- 1. Kiran Kumar Panigrahi. 2023. Available from: https://www.tutorialspoint.com/differences-between-quality-assurance-and-quality-control

- 2. Tanco M, Viles E, Ilzarbe L, Álvarez MJ. Manufacturing industries need Design of Experiments (DoE). In: Proceedings of the World Congress on Engineering. London, UK: Newswood Limited; 2007

- 3. Smith J, Johnson A. Application of design of experiments (DOE) methodologies in engineering and technology. Journal of Engineering and Technology Applications. 2020; 15 (2):45-62

- 4. Taguchi G. System of Experimental Design. New York: Wiley; 1987

- 5. Dahlgaard JJ, Dahlgaard-Park SM, Boesgaard KM. Enablers of waste management in Danish companies: Training, commitment and motivation. Journal of Cleaner Production. 2007; 15 (18):1765-1775

- 6. Gutiérres PH, De la Vara SR. Análisis y diseño de experimentos. Journal of Chemical Information and Modeling. 2012. DOI: 10.1017/CBO9781107415324.004

- 7. Wu CFJ. Design of Experiments: Theory and Practice. Hoboken, NJ: Wiley; 2006

- 8. McCool J. Using the Weibull Distribution: Reliability Modelling, and Interference. New Jersey: John Wily and Sons; 2012

- 9. Madadian Bozorg N, Leclercq M, Lescot T, Bazin M, Gaudreault N, Dikpati A, et al. Design of experiment and machine learning inform on the 3D printing of hydrogels for biomedical applications. Biomaterials Advances. 2023; 153 :213533. DOI: 10.1016/j.bioadv.2023.213533

- 10. Ilzarbe L, Álvarez MJ, Viles E, Tanco M. Practical applications of design of experiments in the field of engineering: A bibliographical review. Quality and Reliability Engineering. 2008; 24 (4):417-428. DOI: 10.1002/gre.909

- 11. Assia C. Designer’s Guide to Lab Practice. 1st ed. London: Routledge; 2023

- 12. Ebadi M, Mozdianfard MR, Aliabadi M. Employing full factorial design and response surface methodology for optimizing direct contact membrane distillation operational conditions in desalinating the rejected stream of a reverse OSMOSIS unit at Esfahan refinery. Water supply Journal. 2018; 19 (2):492-501. DOI: 10.2166/ws.2018.094

- 13. Myers RH, Montgomery DC. Response Surface Methodology: Process and Product Optimization Using Designed Experiments. United Kingdom: John Wiley & Sons; 2016

- 14. Michael Sadowski. 2022. Available from: https://www.synthace.com/blog/types-of-doe-design-a-users-guide

- 15. Terry WA. Introduction to Experimental Methods. 1st ed. Boca Raton: Taylor and Francis Group; 2023

- 16. Khavekar RS, Hari VS. Analyzing the need for a comparative study of Shainin DoE and traditional DoE tools for deploying six sigma in Indian manufacturing companies. IOP Conference Series: Materials Science and Engineering. 2018; 376 :012121

- 17. Zhang Y, Yu Z, Wang J, Li Y, He X. Optimization of fertilizer formulations for sustainable agriculture using design of experiments and response surface methodology. Journal of Plant Nutrition. 2018; 41 (6):713-725

- 18. Yang CH, Huang JT. Process optimization and layout design for electronics manufacturing service industry. Mathematical Problems in Engineering. 2020; 2020 :1-13

- 19. Montgomery DC. Design and Analysis of Experiments. 8th ed. Hoboken, NJ: Wiley; 2013

- 20. Antony J, Banuelas R. Key ingredients for the effective implementation of six sigma program. Measuring Business Excellence. 2002; 6 (4):20-27

- 21. Anderson MJ, Whitcomb PJ, Montgomery DC. Design of experiments in quality engineering. In: Encyclopedia of Statistics in Quality and Reliability. 2nd ed. New York City, USA: John Wiley & Sons; 2017. pp. 1-15

- 22. Kasali Aderinmoye A, Sheriff BL. Effect of dry cutting system on surface finish in turning operation of Al-Si alloy. Journal of Multidisciplinary Engineering Science and Technology. 2021; 8 :10

- 23. Lamidi S, Olaleye N, Bankole Y, Obalola A, Aribike E, Adigun I. Applications of response surface methodology (RSM) in product design, development, and process optimization. In: Response Surface Methodology - Research Advances and Applications. London, UK: IntechOpen; 2023. DOI: 10.5772/intechopen.106763

- 24. Wang Y, Lu H, Zhu Q , Tang Y. Design optimization of composite materials using DOE for stiffness. Polymer Testing. 2019; 80 :106119

- 25. Lee Y, Kim C, Park S. Optimization of the etching process parameters in plasma etching of Al 2 O 3 using a design of experiment. Journal of the Korean Physical Society. 2020; 77 (3):219-224

- 26. El-Helaly SN, Mostafa SA, Hussein AK. Optimization of theophylline oral disintegrating tablets formulation using design of experiments for enhanced bioavailability. Drug Development and Industrial Pharmacy. 2020; 46 (1):33-43

- 27. Vicente V, Zerón H, Martínez Á, Palomo A, Garayoa J. Optimization of treatment protocols in radiation therapy using design of experiments: A review. International Journal of Radiation Oncology *Biology* Physics. 2017; 99 (4):839-846

- 28. Rashid A, Aslam M, Azizullah A, Shah M. Enhancing water use efficiency in wheat crop using different irrigation techniques. Environmental Monitoring and Assessment. 2019; 191 (1):9

- 29. Körner O, Challa H. Plant phenotyping using DOE and machine learning algorithms in controlled environments. In: Proceedings of the 10th International Conference on Computer Vision Theory and Applications (VISAPP). Vol. 1. Lisbon Portugal: SciTePress; 2017. pp. 547-554

- 30. Hoegg J, Hofmann J, Meckel K. Packaging aesthetics and consumer preferences: A cross-cultural study using experimental auctions and choice tasks. Journal of Marketing. 2017; 81 (1):86-101

- 31. Ariely D, Wertenbroch K. Procrastination, deadlines, and performance: Self-control by precommitment. Psychological Science. 2002; 13 (3):219-224

- 32. Box GEP, Hunter WG, Hunter JS. Statistics for Experimenters: Design, Innovation, and Discovery. New York, USA: Wiley; 2005

- 33. Liu X, Dong Y. Designing optimal advertising messages for different cultures: An experimental study. International Journal of Advertising. 2018; 37 (4):588-610

- 34. Montgomery DC. Design and Analysis of Experiments. Arizona, USA: John Wiley & Sons; 2017

- 35. Hicks CR, Turner KV. Fundamental Concepts in the Design of Experiments. United Kingdom: Oxford University Press; 1999

- 36. Roberto F, Alberto M, Luca P, Luigi S. Design of experiments and machine learning with application to industrial experiments. Stat Papers. 2023; 64 :1251-1274. DOI: 10.1007/s00362-023-01437-w

- 37. Box GEP, Hunter WG, Hunter JS. Statistics for Experimenters: Design, Innovation, and Discovery. 2nd ed. Hoboken, NJ: Wiley; 2005

- 38. Grant EL, Leavenworth RS. Statistical Q uality Control. New York City, USA: McGraw-Hill Education; 2013

© 2023 The Author(s). Licensee IntechOpen. This chapter is distributed under the terms of the Creative Commons Attribution 3.0 License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Continue reading from the same book

Quality control and quality assurance.

Edited by Prof Dr Sayyad Zahid Qamar

Published: 29 May 2024

By Vladimir Shper, Elena Khunuzidi, Svetlana Sheremet...

85 downloads

By Barbara Ciecińska

69 downloads

By Elham Said Hasham and Anthony Said Hasham

IntechOpen Author/Editor? To get your discount, log in .

Discounts available on purchase of multiple copies. View rates

Local taxes (VAT) are calculated in later steps, if applicable.

Support: [email protected]

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 06 August 2019

A Design of Experiments (DoE) Approach Accelerates the Optimization of Copper-Mediated 18 F-Fluorination Reactions of Arylstannanes

- Gregory D. Bowden ORCID: orcid.org/0000-0003-2274-6738 1 ,

- Bernd J. Pichler 1 , 2 &

- Andreas Maurer ORCID: orcid.org/0000-0003-2412-5361 1 , 2

Scientific Reports volume 9 , Article number: 11370 ( 2019 ) Cite this article

25k Accesses

102 Citations

4 Altmetric

Metrics details

- Drug development

- Nuclear chemistry

Recent advancements in 18 F radiochemistry, such as the advent of copper-mediated radiofluorination (CMRF) chemistry, have provided unprecedented access to novel chemically diverse PET probes; however, these multicomponent reactions have come with a new set of complex optimization problems. Design of experiments (DoE) is a statistical approach to process optimization that is used across a variety of industries. It possesses a number of advantages over the traditionally employed “one variable at a time” (OVAT) approach, such as increased experimental efficiency as well as an ability to resolve factor interactions and provide detailed maps of a process’s behavior. Here we demonstrate the utility of DoE to the development and optimization of new radiochemical methodologies and novel PET tracer synthesis. Using DoE to construct experimentally efficient factor screening and optimization studies, we were able to identify critical factors and model their behavior with more than two-fold greater experimental efficiency than the traditional OVAT approach. Additionally, the use of DoE allowed us to glean new insights into the behavior of the CMRF of a number of arylstannane precursors. This information has guided our decision-making efforts while developing efficient reaction conditions that suit the unique process requirements of 18 F PET tracer synthesis.

Similar content being viewed by others

Bayesian reaction optimization as a tool for chemical synthesis

Anthropogenic biases in chemical reaction data hinder exploratory inorganic synthesis

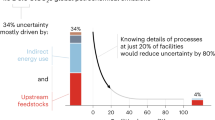

Reducing uncertainties in greenhouse gas emissions from chemical production

Introduction.

Positron emission tomography (PET) has become an important imaging technique that is used routinely in clinical practice and as a powerful biomedical research tool 1 . PET, as with other nuclear imaging modalities, relies on the appropriate use of well-designed radiotracers, molecules that are labelled with a positron emitting radionuclide and are designed to target and accumulate in specific organs, cells, diseased tissues, and/or biochemical pathways, providing physiological and molecular information about the subject 2 . The accessible and flexible design and radiosynthesis of novel tracers is a cornerstone of PET imaging as a preclinical research technique and the efficient and scalable development and production of new PET tracers is vital to the advancement of PET imaging as a clinically relevant tool 3 .

Of the many radioisotopes that can be readily produced with small medical cyclotrons, 18 F has become particularly popular for medical imaging due to its almost ideal nuclear properties. Its decay mode (97% by positron emission), short positron range in tissue, high specific activity and practical 110-minute half-life have made it an attractive isotope for both clinical PET imaging and preclinical research and development 2 , 4 . However, in large part due to fluoride’s large hydration energy, basicity, and weak nucleophilicity, late stage radiofluorinations are synthetically challenging 5 . These reactions have, up until recently, been restricted to a relatively small subset of nucleophilic substitution reactions on aliphatic carbons (S n 2) or electron-deficient aromatic rings (S n Ar). The limited number of synthetic tools available to radiochemists has in turn restricted the diversity and accessibility of new 18 F radiotracers and has hence hindered their development 3 . Additionally, most clinical and pre-clinical radiosyntheses need to be carefully designed so that they can be performed in automated synthesis modules, which adds an additional layer of complexity when developing scalable and clinically relevant 18 F tracer syntheses 2 .

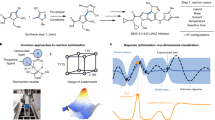

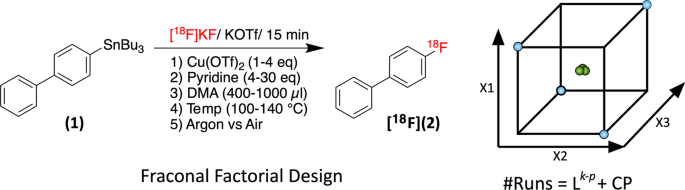

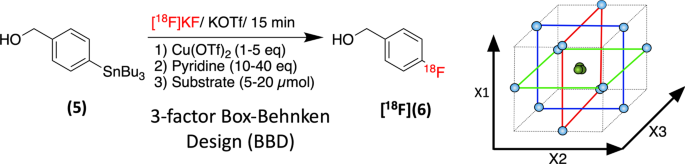

Recently however, new 18 F labeling methodologies have been published that have begun to push the field forward, opening new avenues for radiotracer design and synthesis 2 , 5 , 6 , 7 , 8 . Seminal works published by the groups of Sanford, Gouverneur and Scott have provided unprecedented new synthetic tools for the late-stage radiolabeling of electron-rich and -neutral aromatic rings through the copper-mediated radiofluorinations (CMRF) of aryl boronic acids, aryl boronic esters and arylstannanes (Figure 1 ) 9 , 10 , 11 , 12 , 13 . These reactions have been demonstrated through the synthesis of a number of clinically relevant tracers, and a number of groups, including our own, have begun to adopt these methodologies for the development of novel PET tracers 14 , 15 .

Recent copper-mediated nucleophilic radiofluorinations of electron-rich and electron-neutral ( a ) arylboronic esters by Tredwell et al ., ( b ) arylboronic acids by Mossine et al ., and ( c ) arylstannane precursors by Makaravage et al . 9 , 10 , 11 .

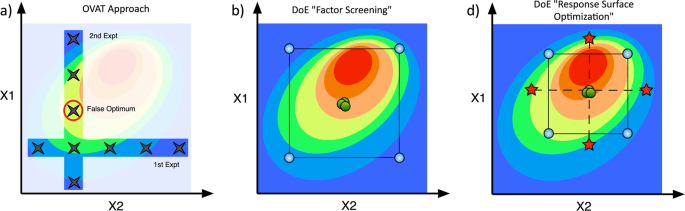

( a ) The OVAT approach resolves reaction space one dimension at a time. The DoE approach builds a matrix of experimental runs to model a response surface across all reaction space. Different design types allow for ( b ) efficient factor screening studies or ( c ) more focused and detailed response surface optimization studies. The color grading represents the value of the true response (blue low, red high.) This figure has been recreated and modified with permission from the catalysisconsulting.co.uk website 35 .

However, many of these reactions have suffered from poor reproducibility and synthesis performance at larger scales. Works by a number of groups have identified the processing of the 18 F (through QMA cartridge elution and azeotropic drying) as a critical step as the copper mediator is particularly sensitive the strong bases present in standard QMA eluents 14 , 15 . A number of efficient protocols to improve 18 F QMA recovery rates and reaction conversions have thus been developed 16 , 17 , 18 , 19 , 20 . These general “unified” conditions, which include the popular “minimalist” 18 F processing approach, have allowed CMRF chemistry to become a more frequently utilized tool for novel tracer development. However, in addition to the 18 F processing method, CMRF reactions are themselves also complex, multicomponent processes and thus require the optimization of multiple nuanced, non-linear, and (as we will show) precursor specific experimental factors. The synthesis of almost every novel tracer (with the goal of automation) must undergo an extensive optimization process with respect to the reaction conditions, especially where new methodologies are utilized. This remains a crucial yet difficult, expensive, and often rate limiting step in the tracer/synthesis development pipeline.

The uptake of new radiochemical methodologies into routine use is heavily dependent on the reaction’s optimized operational simplicity, scalability, reliability, and efficiency in terms of both radiochemical conversion (%RCC) and byproduct formation (radiochemical purity and specific activity) 3 . Traditionally, these methodologies are optimized through the “one variable at a time” (OVAT) approach, which aims to hold all reaction variables ( X i ) constant while one is adjusted until a maximum %RCC or isolated radiochemical yield (%RCY) (response, Y i ) is observed. This process is repeated until all factors suspected of effecting the response of interest have been optimized one by one (Fig. 2a ) 21 . This procedure is simple but laborious and time consuming, requiring many individual runs across an often-large number of parameters, many of which may have no significant contribution to the response. As this approach only looks at one factor at a time, it is unable to detect factor interactions, where the setting of one factor may affect the influence of another, and thus it often provides results that are difficult to interpret 22 . Additionally, the results of an OVAT study are dependent on the starting settings of the optimization process and as such, OVAT is prone to finding only local optima and may thus miss the true set of optimal conditions 23 .

An alternative to the OVAT approach is factorial experimental design or “Design of Experiments” (DoE), a systematic and statistical approach to process optimization that has been widely used by process engineers and chemists across a multitude of industries 24 . Unlike OVAT, DoE aims to explore, map and model the behavior of the response (or multiple responses) within a given reaction space (the combined ranges of all factors involved) across multiple factors simultaneously by varying all variables at once according to a predefined experimental matrix (Fig. 2b,c ). DoE is thus able to provide a more detailed picture of the behavior of a particular process with experimental efficiency and is able to determine the contribution of each factor to the system, model the effect of each factor on the response, and resolve factor interactions. Even with low-resolution factor screening designs, where multiple factors maybe confounded, DoE aids in decision making and in the planning of further optimization studies 21 , 25 . As DoE data is analyzed statistically across a whole study (using multiple linear regression (MLR)) the error throughout the regression model can be estimated without the need for the multitude of replicate experiments (with the exception of replicate centerpoint experiments which are used to calculate the pure error) typically performed in OVAT studies, further increasing the experimental efficiency of the approach. Furthermore, the advent of user-friendly software packages, such as Modde and JMP , has helped to lower the barrier of entry of DoE for researchers with basic experience in statistical analysis 26 . In addition to those cited here, the numerous practical and scientific advantages of the DoE approach have been well outlined in a number of excellent reviews 21 , 22 , 23 , 24 , 25 , 26 , 27 .

DoE studies are usually conducted in sequential phases to answer specific scientific questions and there are a large number of different DoE designs that can be used in various situations to maximize the amount and quality of information obtained from the lowest number of experimental runs 25 , 27 . Typically, a DoE optimization will begin with a low resolution (highly confounded) fractional factorial screening design (Sup. Fig. 1a,b ) in order to screen a large number of continuous (temperature, reagent stoichiometry, concentration, time, etc.) or discrete (atmosphere, solvent, reagent identity, etc.) variables that may affect the investigated response (%RCC, specific activity (SA), etc.) These “factor screening” (FS) experiments are designed to ascertain which factors have the largest influence on the response, give limited information on the presence of factor interactions and eliminate non-significant factors in as few runs as possible. They are thus usually not detailed enough to provide an accurate, predictive model of the system in question. Once the significant factors are identified, higher resolution response surface optimization (RSO) studies with a reduced subset of experimental factors can be constructed and performed if necessary (Sup. Fig. 1c,d ). These designs usually contain more experimental points (per factor) and are intended to produce a detailed mathematical model of the process’s behavior.

DoE has been previously demonstrated as powerful tool for exploring and understanding new radiochemical methodologies 28 , 29 . In the context of copper-mediated radiosynthesis, DoE may provide a practical and efficient way to expedite the optimization process by increasing one’s understanding of the factors affecting the radiosynthesis of a new tracer at an early stage of its development. As DoE aims to maximize the information that can be obtained from a limited number of experimental runs, well-constructed DoE studies would save time, reduce the experimental resources (expensive cartridges, reagents and hot-cell/lead-castle time) devoted to the development of new methods and the optimization of synthesis protocols for new tracers, and would lower the exposure of researchers to harmful ionizing radiation.

The aim of the presented study was to assess the usefulness of a DoE approach to the study and optimization of the CMRFs of model arylstannanes as disclosed by Makaravage et al . and to glean to insights into the most important experimental factors that must be considered when attempting to optimize a tracer syntheses using this methodology 11 . This information was applied to an RSO DoE constructed to optimize the late-stage CMRF of 2-{(4-[ 18 F]fluorophenyl)methoxy}pyrimidine-4-amine ([ 18 F] p FBC), a novel tracer under development in our group that had previously suffered from poor synthesis performance and proved difficult to optimize through the conventional approach. Additionally, we used an RSO study to optimize the single step production of 4-[ 18 F]fluorobenzyl alcohol ([ 18 F] p BnOH), an 18 F synthon of importance to a number of ongoing multistep radiosynthesis projects within our laboratory. In doing so, we highlight the use of DoE within the field of radiochemistry as a powerful tool to enhance radiochemical method development, expedite tracer synthesis optimization, and provide useful practical information about the process under investigation. This information could aid in general decision making when translating a radiosynthesis to an automated synthesis module, ultimately bringing it in line with current Good Manufacturing Practices (cGMP) for clinical production.

Results and Discussion

Ovat vs doe: the advantage of better optimization routines.

In order to assess the benefit of investigating the DoE approach for radiochemical process optimization, we studied the supplementary information of the original paper disclosing the CMRF of arylstannanes by Makaravage et al 11 . The authors investigated 8 non-discrete experimental factors, stating that each run was performed at least twice (n ≥ 2). Each factor was investigated across 3–6 different settings. Assuming n = 2 runs were performed for each setting, the authors therefore performed a minimum of 74 experimental runs (counted from the SI) to investigate the reaction’s behavior. Zarrad et al . later conducted a similar OVAT optimization study on a variation of this methodology that was based upon an improved QMA 18 F processing method suitable for large-scale automated syntheses 16 . While their study successfully led to the development of a scalable and automatable procedure for the production of a number of PET tracers from aryltrialkylstannnes, it was also done with great experimental effort.

In contrast, a fractional factorial Resolution IV (RES IV) DoE study consisting of as few as 19 runs, could be performed to identify which factors had the largest influence on the response (Sup. Table 1 ). If, for example, 3 factors were identified as significant, a high-resolution response surface optimization experiment (consisting of only 17 runs) could then be carried out to estimate a more detailed map of the experimental space. Thus, the DoE approach (across both FS and RSO studies) would, if valid, provide a more comprehensive model of the process in just 36 (vs 74) runs. This marked, potential improvement in experimental efficiency, prompted us to further investigate DoE as a tool for radiochemical optimization.

Factor screening of the CMRF of arylstannanes

In order to identify the factors that had the most significant effects on the reaction outcome, a factor screening Resolution V + (RES V + ) fractional factorial design (capable of resolving main effects, 2 factor interactions, and revealing the presence of curvature in the model) was constructed using Modde Go 12 (Umetrics). 4-Tributylstannylbiphenyl ( 1 ) was chosen as a model substrate due its availability, the low volatility of the product 4-[ 18 F]fluorobiphenyl ( [ 18 F]2 ) on TLC plates, and its prevalence in the literature as a standard model compound for radiofluorination method development (Fig. 3 ) 11 . The precursor amount was set at 2 mg (4.5 µmol) across all runs. Five factors, namely: Reaction solvent volume (DMA vol: 400–1000 µl DMA), temperature (Temp: 100–140 °C), copper triflate loading (Cu(OTf) 2 : 1–4 eq relative to substrate), pyridine loading (Pyridine: 4–30 eq), and atmosphere (Atm: argon vs air) were identified in pilot experiments and though literature consultation as factors of interest. A number of previous studies, including that reported by Zarrad et al ., have reported enhanced yields when these reactions are performed in air 9 , 15 , 16 , 19 . The effect of using argon or air on the %RCC was however difficult to compare and quantify during pilot experiments and thus it was included as a qualitative factor (argon or air) in the factor screening DoE. Time was not investigated as a factor as i) time is related to temperature in most chemical process and ii) given the short half-life time 18 F, it is more desirable to set a reaction duration of < 30 min. The radiochemical conversion of the reaction (%RCC) was chosen as the response ( Y %RCC ), as it can be quickly and accurately measured by radioTLC.

The investigated factors and their ranges for the fractional factorial factor screening of the model synthesis 4-[ 18 F]fluorobiphenyl ( [ 18 F]2 ) from 4-tributyltinbiphenyl ( 1 ). In a fractional factorial design, experimental points are arranged at the corner of a K-dimensional hypercube. p is the total number of generators used to form the array (1/K p is the fraction of the total number of runs from the full factorial experiment (all vertices of a hypercube). Center points (CP, shown in Green) are repeated experiments carried out at the center of the hypercube to estimate reproducibility and measure curvature in the response surface.

The fractional factorial experimental design entailed a total of 24 experimental runs composed of 16 experimental points with 8 center point experiments. Due to the practical constraints of processing and using radiofluoride, the factor screening DoE (and future RSO DoE studies) needed to be run over multiple days. It was decided, given the time required to perform each reaction, that 6 experiments per day was optimal. To account for uncontrollable factors brought about through day-to-day variances in radiofluoride quality and quantity, QMA cartridge variations, and variations in QMA eluent, the experiments were arranged into 4 blocks of 6 runs. Each block, which contained two replicate center points to assess reproducibility, would be included into the model as blocking factors to account for variations in day-to-day uncontrollable factors.

18 F trapped on a QMA cartridge (preconditioned with NaHCO 3 ) was eluted with the QMA eluent as published by Makaravage et al . and was divided among the 6 reaction vials in 80 ul aliquots. The limitations of this “aliquot” method have been well documented in the literature 15 . The lower base/salt content present in smaller aliquot volumes of QMA eluent has less of a negative effect on %RCC than if a full QMA eluent “batch” is used. As such, %RCC values obtained via the aliquot method are often not representative of the %RCC obtained from large-scale batch elutions of 18 F with the same QMA eluent. However, despite this limitation, we chose to aliquot the 18 F into each reaction as this would better allow us to measure and account for variances in each QMA cartridge elution from day-to-day (between blocks) and would also ensure that the QMA eluent content present in each reaction vial after azeotropic drying would be reasonably stable within each block. The minimization of large sources of experimental error was of paramount important to the construction of an accurate DoE model.

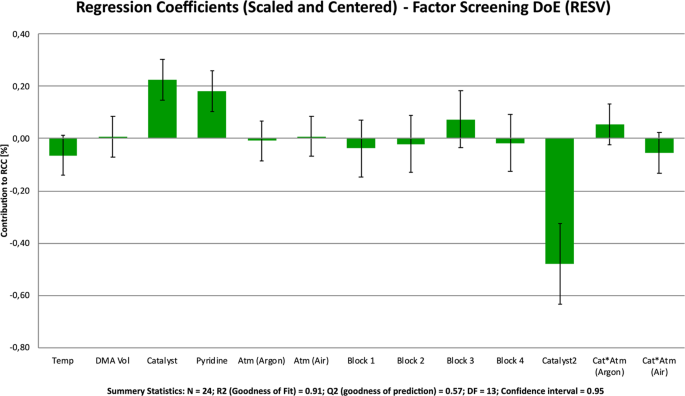

After performing each run, the experimental results were analyzed using Modde Go 12 . To obtain a normal distribution of the data, the %RCC data set was transformed to the log(10), fitted to a model using multiple linear regression (MLR), and checked for outliers and model quality. The output summary statistics suggested the model to be good enough for the purposes of factor screening (R 2 = 0.91 (goodness of fit), Q 2 = 0.57 (goodness of model prediction). The normal coefficients of each term in the model were used to gauge the significance of the contribution of the corresponding factors to the response (p = 0.05) (Fig. 4 ). The model suggested that both temperature (Temp) and total DMA volume (DMA Vol: reaction volume/concentration) were non-significant factors over the investigated ranges. Catalyst loading, (Cu(OTf) 2 ) and ligand loading (Pyridine) were determined to be significant factors. The model also suggested the presence of curvature in the response surface, but due factor confounding inherent in the (RES V) experimental design, a more detailed RSO experimental would need to be conducted to determine which quadratic terms would be required to fit an accurate model. The presence of missing quadratic terms in the linear factor screening model could explain the low Q 2 term in the model fit statistics. Additionally, no significant differences between the experimental blocks (Block 1–4) were observed, suggesting the experimental protocol to be stable from day-to-day.

The scaled and centered regression factors calculated from the results of fractional factorial factor screening DoE. Large regression coefficients represent factors with large contributions to the response (%RCC). A positive number denoted a positive influence on the response. A negative number denotes a diminishing effect on the response. To fit an accurate model, non-significant terms would need to be eliminated, but for the purposes of factor screening, these non-significant terms are shown here. If a factor’s regression coefficient is smaller than the associated errors bars it is probable (at the 95% confidence interval) that that factor is not significant.

The factor screening DoE also suggested that, when using stoichiometric quantities of Cu(OTf) 2 (1–4 eq), the choice of atmosphere (Atm (argon/air)) was not a significant factor and the presence of atmospheric oxygen does not significantly enhance the reaction over the ranges investigated. Interestingly however, a non-significant factor interaction between catalyst loading an atmosphere was detected. At high Cu(OTf) 2 loadings, an argon atmosphere is slightly preferred, while at low Cu(OTf) 2 loadings, an air atmosphere is beneficial. While its insignificance warrants that it is excluded from further experimental designs and models, the trend suggested by this interaction fits in line with the current understanding of the oxidation cycle of the Chan-lam coupling 30 . When catalytic quantities of Cu(II)(OTf) 2 are used, an oxidative atmosphere (Air) is required to activate catalytic complex to a Cu(III) species and to regenerate the catalyst after it undergoes reductive elimination. When larger amounts of Cu(II)(OTf) 2 are used, the reaction can be performed under argon as the oxidation of the inactive Cu(II) complex to the active Cu(III) complex is mediated by free Cu(II) through a single electron transfer 31 . An important conclusion from this result is that this CMRF can be performed in automated synthesizers using inert carrier gases; operating these reactions under air is not a requirement when stoichiometric loadings of Cu(OTf) 2 are used, as was originally suggested by Makaravage et al . 11 . Most routine radiosynthesis modules are setup and optimized to operate using an inert carrier gas such as nitrogen, argon or helium. While it is possible to setup and operate many synthesis modules using compressed air, it can be inconvenient to modify/change/switch established routine (or GMP) syntheses and synthesis modules to operate with air.

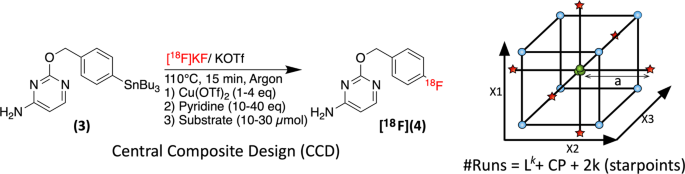

Response surface optimization of [ 18 F]pFBC

[ 18 F] p FBC ( [ 18 F]4 ), produced from precursor ( 3 ), is novel tracer under development in our laboratory that had shown poor synthesis performance and reliability (Fig. 5 ). Our efforts to optimize its synthesis iteratively through the OVAT approach in conjunction with previously published optimization data had given inconsistent and confusing results 11 , 16 . Thus, having identified and eliminated reaction solvent volume, temperature, atmosphere, and day-to-day uncontrollable factors as non-significant factors, a more detailed orthogonal central composite design (CCO) RSO study was constructed to optimize the radiosynthesis of this tracer. Cu(OTf) 2 loading (1–4 eq), pyridine loading (10–40 eq), and precursor loading (10–30 µmol) were chosen as factors for investigation. The reaction volume was kept constant across all runs at 700 µl and each run was performed at 110 °C for 15 min.

The investigated factors and their ranges for the orthogonal central composite design RSO of [ 18 F]pFBC ( [ 18 F]4 ). Starpoint distance a is scaled in order to ensure orthogonality throughout the experimental matrix. An orthogonal central composite design (CCO) has a distance “a” scaled so as to ensure orthogonality in the experimental matrix.

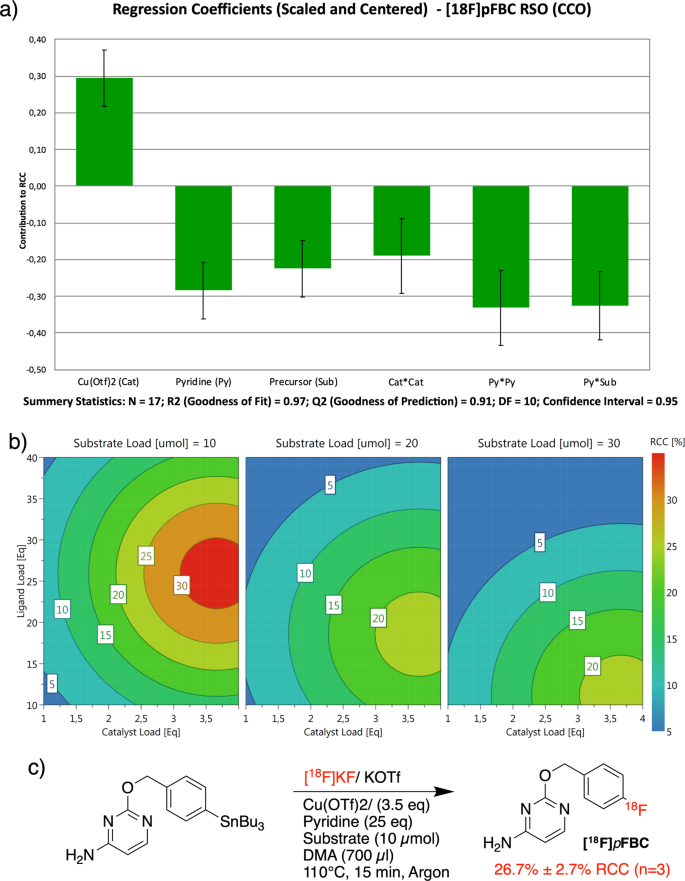

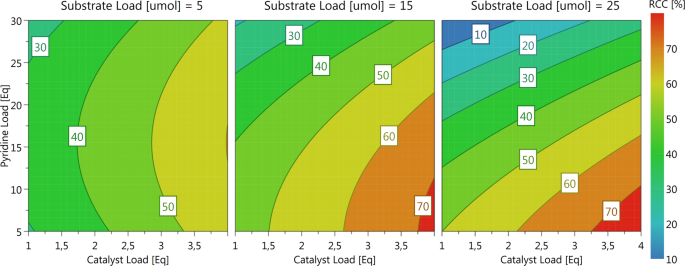

The CCO design, a type of central composite design (CCD), was chosen due to its ability to estimate second order response surfaces and resolve quadratic terms in the response surface model. The CCO design consisted of a total of 17 runs: 8 factorial points, 3 center points and 6 orthogonally scaled star points (Fig. 5 ). The 17 runs were again carried out using 80 µl aliquots of 18 F in accordance with the general procedure described in the supplementary information. The data was modeled using MLR and analyzed in Modde Go 12 . All three main factors were found to be significant, and the experiment also resolved quadratic behaviors for both catalyst and pyridine loading factors (Fig. 6a ). Additionally, a factor interaction between pyridine and substrate loading was resolved and included in the model. The summary of fit statistics gave R 2 and Q 2 to be 0.97 and 0.91 respectively, indicating a valid and predictive model. All three main factors had significant effects on the response. Strong quadratic behaviors were found for both Cu(OTf) 2 loading and pyridine factors, and a strong negative factor interaction was detected between the equivalents of pyridine and the amount of substrate used (higher amounts of pyridine are needed for lower amounts of precursor.) Plotting the response surface across the investigated ranges suggested that the optimal set of conditions consisted of 3.5 equivalents of catalyst and 25 equivalents of pyridine (a ratio≈1:7) at a 10 µmol substrate load (Fig. 6b ). Thus, three validation runs were performed using larger 180 µl aliquots of the QMA solution (400–500 MBq) under the optimized conditions (Fig. 6c ). These three runs gave respective %RCCs of 24.9%, 25.3%, and 29.8% (26.7 ± 2.7%RCC (n = 3)), demonstrating the robustness of these conditions and giving the highest %RCCs obtained for [ 18 F] p FBC thus far, using this reaction.

( a ) The scaled and centered regression factors calculated from the results of the RSO (CCO) ( a ) 4D plot output from Modde Go 12. Pyridine (ligand) and catalyst loadings are plotted on the vertical and horizontal axis respectively. The three windows, from right to left, represent an increasing amount of substrate (10–30 µmol). ( c ) Reaction conditions and radiochemical conversions of the optimized CMRF synthesis of [ 18 F] p FBC.

Response surface optimization of the synthesis of [ 18 F]4-fluorobenzyl alcohol ([ 18 F]pFBnOH)

The synthesis [ 18 F] p FBnOH [ 18 F]6 , an important radiochemical building block, has also been of interest to a number of projects within our laboratory. [ 18 F]6 has been previously synthesized in two steps via the nucleophilic aromatic substitution of 4-formyl- N,N,N -trimethylanilinium triflate and the subsequent reduction of the resulting 4-[ 18 F]fluorobenzaldehyde to [ 18 F]6 32 , 33 . In our hands the reduction step using NaBH 4 resulted in a significant loss of the product [ 18 F]6 and we thus chose to investigate the CMRF of 4-tributyltinbenzyl alcohol 5 as a possible single-step alternative route to [ 18 F]6 (Fig. 7 ). [ 18 F]6 could be reasonably purified via solid-phase extraction before use in a second synthesis step (these results will be published in due course.) Using the information obtained from our previous DoE studies, an RSO experiment was constructed to optimize the synthesis of [ 18 F]6 using a Box Behnken Design (BBD) (Fig. 7 ). The BBD requires slightly fewer runs than an equivalent CCD and also avoids experimental runs with combined extremes of the experimental factors. The three factor Box-Behnken design featured a total of 15 runs (12 experimental points with 3 center points). Again, substrate loading (5–25 µmol), catalyst loading (1–4 eq) and pyridine loading (5–30 eq) were chosen as factors for investigation. The reaction volume was again kept constant across all runs at 700 µl and the reactions were each performed according to the general procedure at 110 °C for 20 min.

The investigated factors and their ranges of the Box Behnken response surface optimization design for the synthesis of [ 18 F] p FBnOH ( [ 18 F]6 ) from p-tributyltin-benzyl alcohol ( 5 ). The BBD arranges the experimental points on the edges of the reaction space cube and can be thought of as a combination of three 2D full factorial designs (performed at 90° to each other) with shared center points.

Fitting the data using MLR in MODDE Go 12 gave summary of fit statistics that suggested a valid model (R 2 = 0.97 and Q 2 = 0.86). Catalyst loading and pyridine loading were found to be significant factors, with pyridine demonstrating a quadratic behavior. In this case, precursor loading was not found to be a significant factor over the investigated range. Plotting the response surface suggested that the optimum reaction conditions featured a high catalyst load and a low pyridine load with a higher substrate load being slightly (non-significantly) beneficial (Fig. 8 ). Again, validation runs with larger 180 µl 18 F aliquots were performed as before using a substrate loading of 25 µmol, 4 equivalents of Cu(OTf) 2 and 5 equivalents of pyridine in 700 µl of DMA. The outcome afforded [ 18 F]6 with a %RCC of 58 ± 5.3% (n = 4) in a single step. While these results were less than the those predicted by the response surface model, they again provided the product with greater efficiency than previously obtained in our hands using the general fluoride processing and reaction conditions published by Makaravage et al .

The response surface output from the Box Behnken response surface optimization of [ 18 F]6 .

In this case, deviations from the predicted model may be due to factors such as the amount of carbonate base present in the in larger volumes of QMA eluent solution (as discussed above), effects from as of yet unidentified controllable or non-controllable factors specific to this reaction, and/or model/data inaccuracies obtained through either random or systematic experimental error. Nonetheless, this easily automatable procedure mitigated the product losses sustained using the previously published 2-step synthesis approach 32 .

These data were used to successfully guide the automation of [ 18 F] p FBC as well as [ 18 F] p FBnOH (as part of larger multistep radiosynthesis project) on an Elixys Flex/Chem synthesis module (Sofie Biosciences, USA). These automated syntheses will be reported in due course as part of a larger tracer development study. It must however be noted that the radiochemical yields of the automated synthesis were, as expected, significantly lower than those predicted by the response surface model. This is in all likelihood due to the differences in single “batch” 18 F processing methods that were used in the automated synthesis versus the “aliquoted” 18 F processing that was used to carry out the DoE experiments. Although large scale radiosyntheses were nonetheless useful for imaging studies, this remains a significant limitation of the presented DoE studies. However, we suspect that the results of our reaction optimization can viewed independently from the known issues associated with fluoride processing, and we are currently working to confirm this hypothesis. As such, we believe that the application of improved fluoride processing techniques, such as the “minimalist” approach to fluoride processing, may help to drastically improve the large-scale performance of our optimized copper-mediated radiofluorination conditions and we are currently working to implement these methods into our workflow.

Comparing our factor screening and response surface models with the results obtained from the previous OVAT optimization studies by Makaravage et al . and Zarrad et al . reveals remarkably similar trends where the models are comparable (absolute %RCC values differ considerably due to the difference in the 18 F processing methods used.) For example, substrate load and copper triflate loading both show quadratic behaviors and their optima are reasonably well aligned with the analogous regions in our response surface models, despite the large differences in 18 F processing method. This lends weight to our hypothesis that the experimental factors affecting the reaction can be modeled separately from the 18 F processing conditions; i.e., there is no (or only a weak) factor interaction between the 18 F processing conditions used and reaction parameters we have investigated in this study; however, this still requires further investigation and will be reported on in due course. The multiparametric response surfaces provided by the DoE studies presented here also highlight the fact that much more information about a process can be obtained from fewer experiments if the DoE approach is appropriately applied.

Comparison of the two response surface models for [ 18 F] p FBC and [ 18 F] p FBnOH shows that the later requires a lower quantity of pyridine and a higher substrate concentration for optimal radiolabeling, while the synthesis of [ 18 F] p FBC benefits from a lower substrate concentration and higher pyridine load. This suggests that the nature of the substrate is a major factor when developing optimal CMRF reaction conditions. The presence of some heterocycles has been previously noted to have marked deleterious effects on %RCC, likely due to the formation of unreactive substrate/catalyst species. Taylor et al . examined the effects of various substrates on the analogous CMRF of boronic acid esters by performing reactions with a model substrate, while holding the reaction conditions constant and doping the reactions with various heterocycles and other common moieties often found in drug-like molecules 34 . From their results, they were able to construct a database of heterocyclic moieties that are compatible with their radiofluorination conditions that could be used to plan and “de-risk” future radiosyntheses. Our data suggests that, in certain cases, a detailed understanding of the process and careful optimization of important experimental factors could (to some degree) offset these deleterious effects, thus saving time by reducing the need to design complex multistep synthesis routes around problematic moieties in the candidate precursor. In combination with a database of problematic moieties (such as that published by Taylor et al .), well-designed DoE studies could aid in the establishment of useable radiofluorination protocols early on in a tracer’s development and thus expediate its passage from conception to its first preclinical studies. Scientist can then quickly decide if the tracer is biologically interesting and if further optimization or development of an improved synthetic strategy for GMP production is indeed warranted.

The work presented here highlights the benefit of using the DoE approach to aid in the development of new radiochemical methodologies as well as PET tracer development and production. The systematic use of the DoE approach streamlines the optimization process, saving time and resources while providing multiparametric information that can be used to guide decision-making early on during a tracer’s development. While we have specifically investigated the use of DoE for optimizing the copper-mediated radiofluorination of arylstannanes as proof of principle, it is important to note that DoE can be applied to any complex optimization problem. The availability of a number of easy to use DoE software packages (such as Modde Go 12 and JMP ) has allowed us to apply DoE to the synthesis optimization of a number of novel tracers under development and we are currently applying the presented DoE data and the general DoE approach to expedite the delivery of a number of biologically interesting tracers to imaging scientists within our group. We have also begun to explore the use of DoE as a research tool to guide reaction development and aid in the establishment of new radiochemical methodologies within our laboratory. We hope that DoE will become a more widely used tool that will help bring new radiochemical methods into clinical and preclinical relevancy and will in turn help expand the chemical diversity of new 18 F labelled tracers.

The synthesis procedures and characterization data of all precursor and non-radioactive standard compounds can be found the supplementary information attached to this paper along with the DoE design worksheets and regression model statistics.

General radiochemistry

As a general procedure for all radiochemical experiments, [ 18 F]fluoride in water was obtained from a cyclotron (GE PETtrace 800) target wash and was trapped on a QMA cartridge (QMA Light Carb, Waters; preconditioned sequentially with 1 M NaHCO 3 (10 ml), air (10 ml), Water (10 ml), and air (10 ml)), and eluted with a QMA eluent solution (K 2 CO 3 50 µg, KOTf 10 mg in H 2 O 550 µl.) (published by Makaravage et al .) To ensure consistency in the potassium [ 18 F]fluoride and potassium triflate content introduced from the QMA eluent, the eluted radiofluoride was aliquoted (80 µl) into 6 × 5 ml Wheaton (V-vials, oven dried) reactors (200–300 MBq) and each reactor was separately azeotropically dried at 110 °C with acetonitrile (3 × 1 ml) under a stream of argon gas. (As opposed to drying a single batch and aliquoting the poorly soluble [ 18 F]KF thereafter.) The reaction mixtures required by the DoE worksheet table were formulated from stock solutions of the required reagents in DMA (1 mg / 10 µl) and diluted with DMA to the required reaction volume. Reactions run under argon were purged with a stream of argon gas for 20 seconds. Reactions run under air were purged with air in similar fashion. The reactions were set to run at the required temperature for the specified time, after which they were quenched with 1 ml of water to solubilize the remaining fluoride. Samples of each reaction were taken for analysis.

Reaction analysis

RadioTLC was used to determine the relative incorporation of radiofluoride by the substrate and both product and by-product signals were quantified in order to determine %RCC. HPLC analysis was performed on representative samples over the course of the DoE studies to ensure compound identity.

Experimental design and analysis

All DoE studies were designed using the DoE software package Modde Go 12 ( Umetrics ). After the factors and responses of interest were defined, an appropriate design type was selected, and a DoE experimental worksheet table was generated. All experiments were performed in randomized order. After the %RCC data was collected, the data was modelled using MLR, checked for outliers and model quality, after which is could be used for the purposes of factor screening or response surface optimization.

van der Born, D. et al . Fluorine-18 labelled building blocks for PET tracer synthesis. Chem. Soc. Rev. 46 , 4709–4773 (2017).

Article Google Scholar

Brooks, A. F., Topczewski, J. J., Ichiishi, N., Sanford, M. S. & Scott, P. J. H. Late-stage [ 18 F]fluorination: New solutions to old problems. Chem. Sci. 5 , 4545–4553 (2014).

Article CAS Google Scholar

Campbell, M. G. et al . Bridging the gaps in 18 F PET tracer development. Nat. Chem. 9 , 1–3 (2016).

Miller, P. W., Long, N. J., Vilar, R. & Gee, A. D. Synthesis of 11 C, 18 F, 15 O, and 13 N Radiolabels for Positron Emission Tomography. Angew. Chemie Int. Ed. 47 , 8998–9033 (2008).

Campbell, M. G. & Ritter, T. Modern carbon-fluorine bond forming reactions for aryl fluoride synthesis. Chem. Rev. 115 , 612–633 (2015).

Beyzavi, M. H. et al . 18 F-Deoxyfluorination of Phenols via Ru π-Complexes. ACS Cent. Sci. 3 , 944–948 (2017).

Lee, E. et al . A Fluoride-Derived Electrophilic Late-Stage Fluorination Reagent for PET Imaging. Science (80-.) 334 , 639–642 (2011).

Article ADS CAS Google Scholar

Preshlock, S., Tredwell, M. & Gouverneur, V. 18 F-Labeling of Arenes and Heteroarenes for Applications in Positron Emission Tomography. Chem. Rev. 116 , 719–766 (2016).

Tredwell, M. et al . A General Copper-Mediated Nucleophilic 18 F Fluorination of Arenes. Angew. Chemie Int. Ed. 53 , 7751–7755 (2014).

Mossine, A. V. et al . Synthesis of [ 18 F]Arenes via the Copper-Mediated [ 18 F]Fluorination of Boronic Acids. Org. Lett. 17 , 5780–5783 (2015).

Makaravage, K. J., Brooks, A. F., Mossine, A. V., Sanford, M. S. & Scott, P. J. H. H. Copper-Mediated Radiofluorination of Arylstannanes with [ 18 F]KF. Org. Lett. 18 , 5440–5443 (2016).

Ichiishi, N. et al . Copper-Catalyzed [18 F]Fluorination of (Mesityl)(aryl)iodonium Salts. Org. Lett. 16 , 3224–3227 (2014).

McCammant, M. S. et al . Cu-Mediated C-H 18 F-Fluorination of Electron-Rich (Hetero)arenes. Org. Lett. 19 , 3939–3942 (2017).

Preshlock, S. et al . Enhanced copper-mediated 18 F-fluorination of aryl boronic esters provides eight radiotracers for PET applications. Chem. Commun. 52 , 8361–8364 (2016).

Zlatopolskiy, B. D. et al . Copper-mediated aromatic radiofluorination revisited: Efficient production of PET tracers on a preparative scale. Chem. - A Eur. J. 21 , 5972–5979 (2015).

Zarrad, F., Zlatopolskiy, B. D., Krapf, P., Zischler, J. & Neumaier, B. A practical method for the preparation of 18 F-labeled aromatic amino acids from nucleophilic [ 18 F]fluoride and stannyl precursors for electrophilic radiohalogenation. Molecules 22 (2017).

Antuganov, D. et al . Copper-Mediated Radiofluorination of Aryl Pinacolboronate Esters: A Straightforward Protocol by Using Pyridinium Sulfonates. European J. Org. Chem. 2019 , 918–922 (2019).

Richarz, R. et al . Neither azeotropic drying, nor base nor other additives: A minimalist approach to 18 F-labeling. Org. Biomol. Chem. 12 , 8094–8099 (2014).

Zischler, J., Kolks, N., Modemann, D., Neumaier, B. & Zlatopolskiy, B. D. Alcohol-Enhanced Cu-Mediated Radiofluorination. Chem. - A Eur. J. 23 , 3251–3256 (2017).

Mossine, A. V. et al . Development of Customized [(18)F]Fluoride Elution Techniques for the Enhancement of Copper-Mediated Late-Stage Radiofluorination. Sci. Rep. 7 , 233 (2017).

Article ADS Google Scholar

Murray, P. M. et al . The application of design of experiments (DoE) reaction optimisation and solvent selection in the development of new synthetic chemistry. Org. Biomol. Chem. 14 , 2373–2384 (2016).