Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

2.2 Psychologists Use Descriptive, Correlational, and Experimental Research Designs to Understand Behavior

Learning objectives.

- Differentiate the goals of descriptive, correlational, and experimental research designs and explain the advantages and disadvantages of each.

- Explain the goals of descriptive research and the statistical techniques used to interpret it.

- Summarize the uses of correlational research and describe why correlational research cannot be used to infer causality.

- Review the procedures of experimental research and explain how it can be used to draw causal inferences.

Psychologists agree that if their ideas and theories about human behavior are to be taken seriously, they must be backed up by data. However, the research of different psychologists is designed with different goals in mind, and the different goals require different approaches. These varying approaches, summarized in Table 2.2 “Characteristics of the Three Research Designs” , are known as research designs . A research design is the specific method a researcher uses to collect, analyze, and interpret data . Psychologists use three major types of research designs in their research, and each provides an essential avenue for scientific investigation. Descriptive research is research designed to provide a snapshot of the current state of affairs . Correlational research is research designed to discover relationships among variables and to allow the prediction of future events from present knowledge . Experimental research is research in which initial equivalence among research participants in more than one group is created, followed by a manipulation of a given experience for these groups and a measurement of the influence of the manipulation . Each of the three research designs varies according to its strengths and limitations, and it is important to understand how each differs.

Table 2.2 Characteristics of the Three Research Designs

| Research design | Goal | Advantages | Disadvantages |

|---|---|---|---|

| Descriptive | To create a snapshot of the current state of affairs | Provides a relatively complete picture of what is occurring at a given time. Allows the development of questions for further study. | Does not assess relationships among variables. May be unethical if participants do not know they are being observed. |

| Correlational | To assess the relationships between and among two or more variables | Allows testing of expected relationships between and among variables and the making of predictions. Can assess these relationships in everyday life events. | Cannot be used to draw inferences about the causal relationships between and among the variables. |

| Experimental | To assess the causal impact of one or more experimental manipulations on a dependent variable | Allows drawing of conclusions about the causal relationships among variables. | Cannot experimentally manipulate many important variables. May be expensive and time consuming. |

| There are three major research designs used by psychologists, and each has its own advantages and disadvantages. | |||

Stangor, C. (2011). Research methods for the behavioral sciences (4th ed.). Mountain View, CA: Cengage.

Descriptive Research: Assessing the Current State of Affairs

Descriptive research is designed to create a snapshot of the current thoughts, feelings, or behavior of individuals. This section reviews three types of descriptive research: case studies , surveys , and naturalistic observation .

Sometimes the data in a descriptive research project are based on only a small set of individuals, often only one person or a single small group. These research designs are known as case studies — descriptive records of one or more individual’s experiences and behavior . Sometimes case studies involve ordinary individuals, as when developmental psychologist Jean Piaget used his observation of his own children to develop his stage theory of cognitive development. More frequently, case studies are conducted on individuals who have unusual or abnormal experiences or characteristics or who find themselves in particularly difficult or stressful situations. The assumption is that by carefully studying individuals who are socially marginal, who are experiencing unusual situations, or who are going through a difficult phase in their lives, we can learn something about human nature.

Sigmund Freud was a master of using the psychological difficulties of individuals to draw conclusions about basic psychological processes. Freud wrote case studies of some of his most interesting patients and used these careful examinations to develop his important theories of personality. One classic example is Freud’s description of “Little Hans,” a child whose fear of horses the psychoanalyst interpreted in terms of repressed sexual impulses and the Oedipus complex (Freud (1909/1964).

Political polls reported in newspapers and on the Internet are descriptive research designs that provide snapshots of the likely voting behavior of a population.

Another well-known case study is Phineas Gage, a man whose thoughts and emotions were extensively studied by cognitive psychologists after a railroad spike was blasted through his skull in an accident. Although there is question about the interpretation of this case study (Kotowicz, 2007), it did provide early evidence that the brain’s frontal lobe is involved in emotion and morality (Damasio et al., 2005). An interesting example of a case study in clinical psychology is described by Rokeach (1964), who investigated in detail the beliefs and interactions among three patients with schizophrenia, all of whom were convinced they were Jesus Christ.

In other cases the data from descriptive research projects come in the form of a survey — a measure administered through either an interview or a written questionnaire to get a picture of the beliefs or behaviors of a sample of people of interest . The people chosen to participate in the research (known as the sample ) are selected to be representative of all the people that the researcher wishes to know about (the population ). In election polls, for instance, a sample is taken from the population of all “likely voters” in the upcoming elections.

The results of surveys may sometimes be rather mundane, such as “Nine out of ten doctors prefer Tymenocin,” or “The median income in Montgomery County is $36,712.” Yet other times (particularly in discussions of social behavior), the results can be shocking: “More than 40,000 people are killed by gunfire in the United States every year,” or “More than 60% of women between the ages of 50 and 60 suffer from depression.” Descriptive research is frequently used by psychologists to get an estimate of the prevalence (or incidence ) of psychological disorders.

A final type of descriptive research—known as naturalistic observation —is research based on the observation of everyday events . For instance, a developmental psychologist who watches children on a playground and describes what they say to each other while they play is conducting descriptive research, as is a biopsychologist who observes animals in their natural habitats. One example of observational research involves a systematic procedure known as the strange situation , used to get a picture of how adults and young children interact. The data that are collected in the strange situation are systematically coded in a coding sheet such as that shown in Table 2.3 “Sample Coding Form Used to Assess Child’s and Mother’s Behavior in the Strange Situation” .

Table 2.3 Sample Coding Form Used to Assess Child’s and Mother’s Behavior in the Strange Situation

| Coder name: | ||||

|---|---|---|---|---|

| Mother and baby play alone | ||||

| Mother puts baby down | ||||

| Stranger enters room | ||||

| Mother leaves room; stranger plays with baby | ||||

| Mother reenters, greets and may comfort baby, then leaves again | ||||

| Stranger tries to play with baby | ||||

| Mother reenters and picks up baby | ||||

| The baby moves toward, grasps, or climbs on the adult. | ||||

| The baby resists being put down by the adult by crying or trying to climb back up. | ||||

| The baby pushes, hits, or squirms to be put down from the adult’s arms. | ||||

| The baby turns away or moves away from the adult. | ||||

| This table represents a sample coding sheet from an episode of the “strange situation,” in which an infant (usually about 1 year old) is observed playing in a room with two adults—the child’s mother and a stranger. Each of the four coding categories is scored by the coder from 1 (the baby makes no effort to engage in the behavior) to 7 (the baby makes a significant effort to engage in the behavior). More information about the meaning of the coding can be found in Ainsworth, Blehar, Waters, and Wall (1978). | ||||

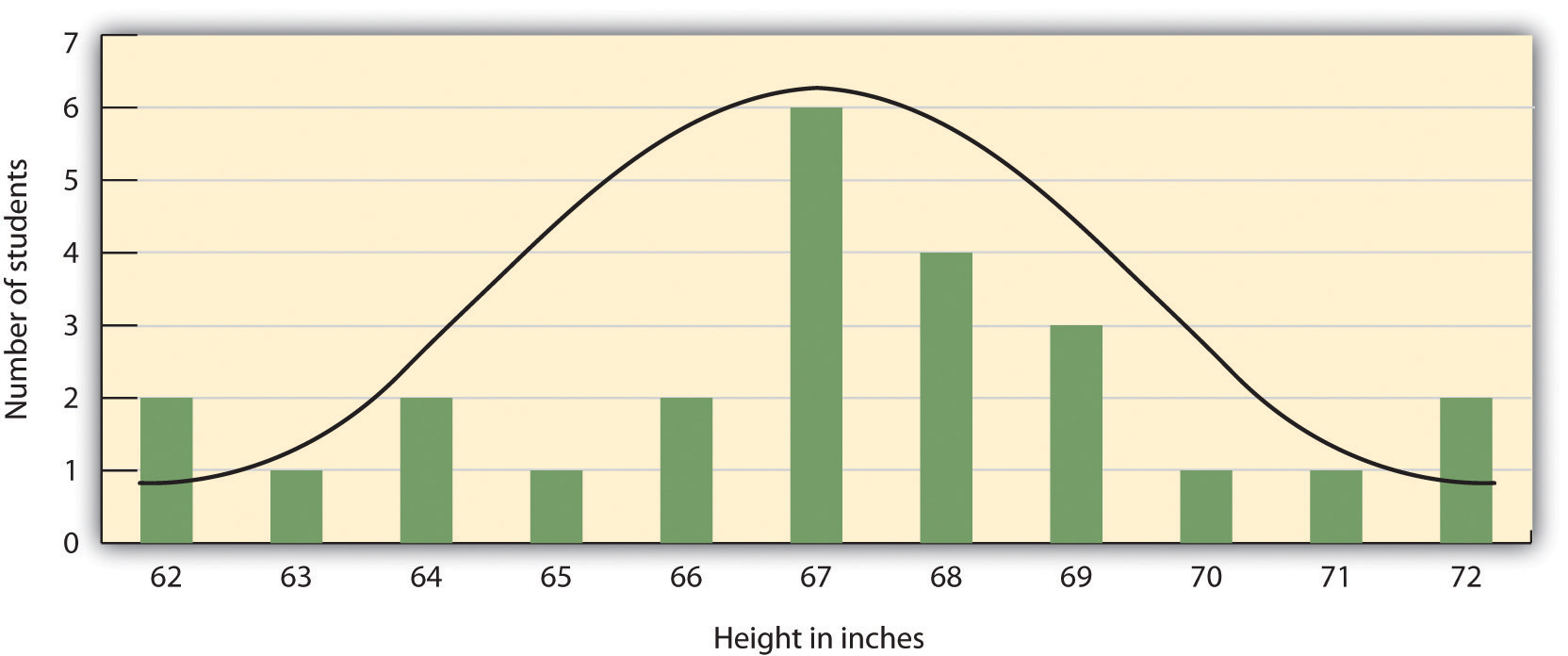

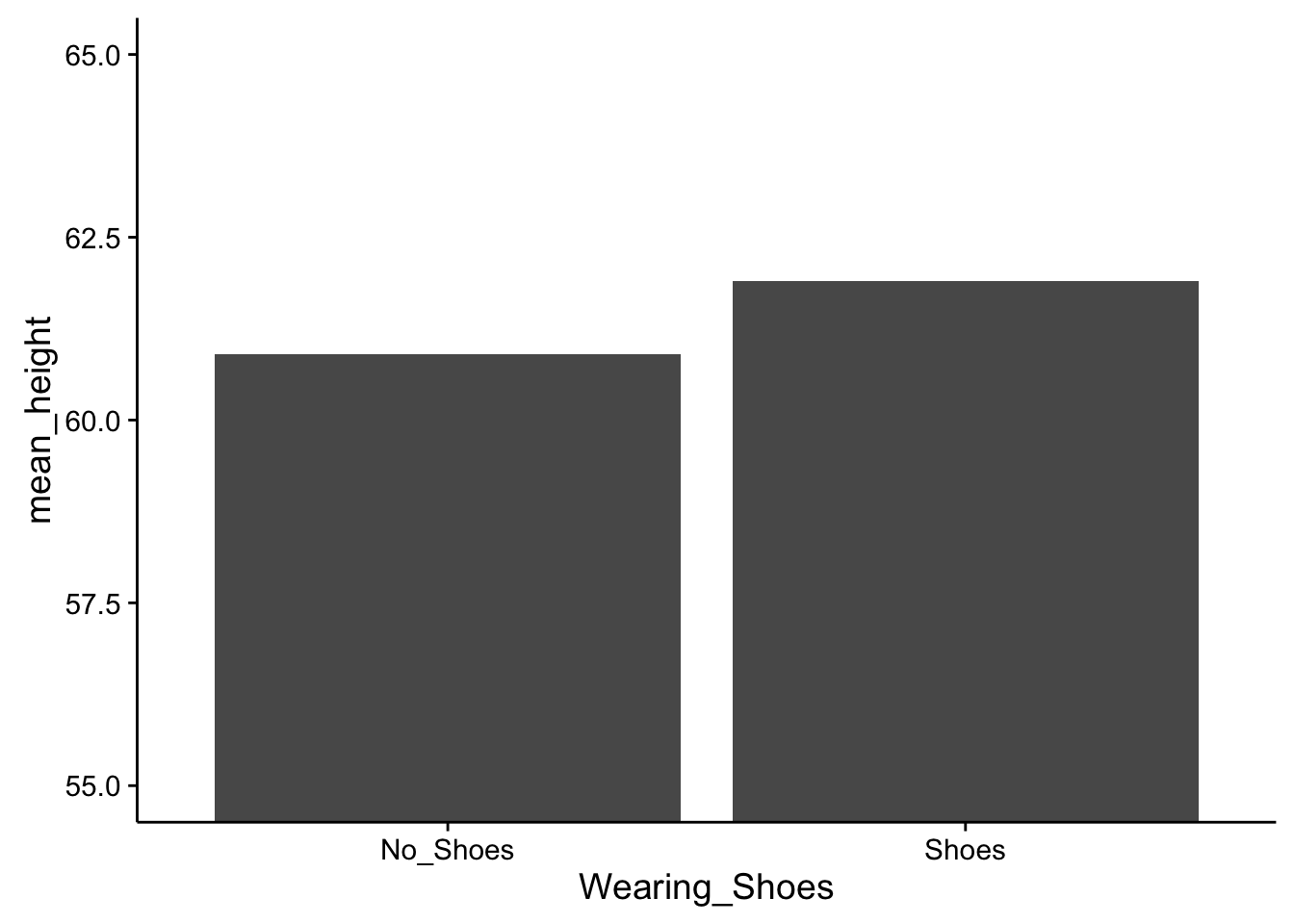

The results of descriptive research projects are analyzed using descriptive statistics — numbers that summarize the distribution of scores on a measured variable . Most variables have distributions similar to that shown in Figure 2.5 “Height Distribution” , where most of the scores are located near the center of the distribution, and the distribution is symmetrical and bell-shaped. A data distribution that is shaped like a bell is known as a normal distribution .

Table 2.4 Height and Family Income for 25 Students

| Student name | Height in inches | Family income in dollars |

|---|---|---|

| Lauren | 62 | 48,000 |

| Courtnie | 62 | 57,000 |

| Leslie | 63 | 93,000 |

| Renee | 64 | 107,000 |

| Katherine | 64 | 110,000 |

| Jordan | 65 | 93,000 |

| Rabiah | 66 | 46,000 |

| Alina | 66 | 84,000 |

| Young Su | 67 | 68,000 |

| Martin | 67 | 49,000 |

| Hanzhu | 67 | 73,000 |

| Caitlin | 67 | 3,800,000 |

| Steven | 67 | 107,000 |

| Emily | 67 | 64,000 |

| Amy | 68 | 67,000 |

| Jonathan | 68 | 51,000 |

| Julian | 68 | 48,000 |

| Alissa | 68 | 93,000 |

| Christine | 69 | 93,000 |

| Candace | 69 | 111,000 |

| Xiaohua | 69 | 56,000 |

| Charlie | 70 | 94,000 |

| Timothy | 71 | 73,000 |

| Ariane | 72 | 70,000 |

| Logan | 72 | 44,000 |

Figure 2.5 Height Distribution

The distribution of the heights of the students in a class will form a normal distribution. In this sample the mean ( M ) = 67.12 and the standard deviation ( s ) = 2.74.

A distribution can be described in terms of its central tendency —that is, the point in the distribution around which the data are centered—and its dispersion , or spread. The arithmetic average, or arithmetic mean , is the most commonly used measure of central tendency . It is computed by calculating the sum of all the scores of the variable and dividing this sum by the number of participants in the distribution (denoted by the letter N ). In the data presented in Figure 2.5 “Height Distribution” , the mean height of the students is 67.12 inches. The sample mean is usually indicated by the letter M .

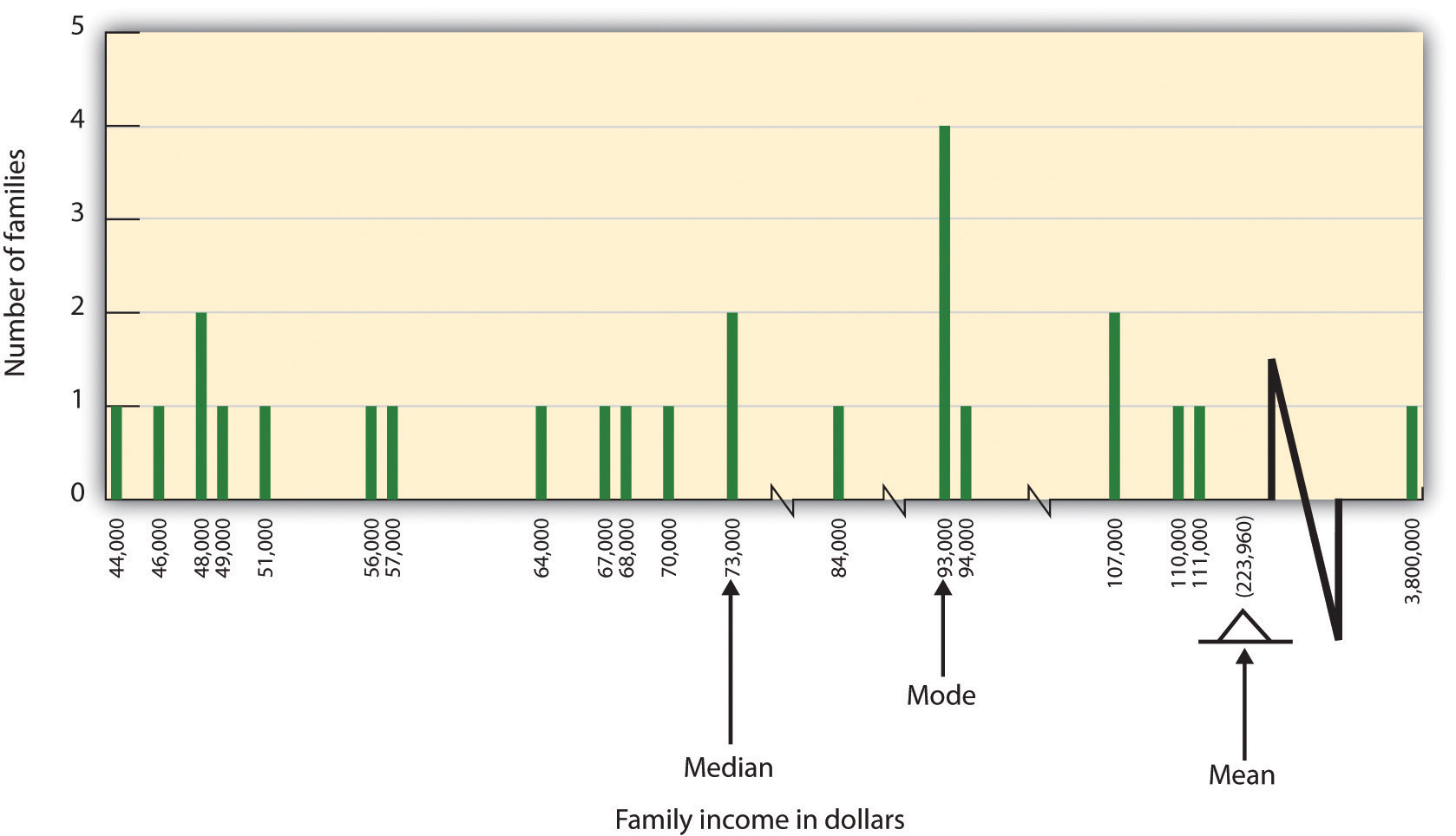

In some cases, however, the data distribution is not symmetrical. This occurs when there are one or more extreme scores (known as outliers ) at one end of the distribution. Consider, for instance, the variable of family income (see Figure 2.6 “Family Income Distribution” ), which includes an outlier (a value of $3,800,000). In this case the mean is not a good measure of central tendency. Although it appears from Figure 2.6 “Family Income Distribution” that the central tendency of the family income variable should be around $70,000, the mean family income is actually $223,960. The single very extreme income has a disproportionate impact on the mean, resulting in a value that does not well represent the central tendency.

The median is used as an alternative measure of central tendency when distributions are not symmetrical. The median is the score in the center of the distribution, meaning that 50% of the scores are greater than the median and 50% of the scores are less than the median . In our case, the median household income ($73,000) is a much better indication of central tendency than is the mean household income ($223,960).

Figure 2.6 Family Income Distribution

The distribution of family incomes is likely to be nonsymmetrical because some incomes can be very large in comparison to most incomes. In this case the median or the mode is a better indicator of central tendency than is the mean.

A final measure of central tendency, known as the mode , represents the value that occurs most frequently in the distribution . You can see from Figure 2.6 “Family Income Distribution” that the mode for the family income variable is $93,000 (it occurs four times).

In addition to summarizing the central tendency of a distribution, descriptive statistics convey information about how the scores of the variable are spread around the central tendency. Dispersion refers to the extent to which the scores are all tightly clustered around the central tendency, like this:

Or they may be more spread out away from it, like this:

One simple measure of dispersion is to find the largest (the maximum ) and the smallest (the minimum ) observed values of the variable and to compute the range of the variable as the maximum observed score minus the minimum observed score. You can check that the range of the height variable in Figure 2.5 “Height Distribution” is 72 – 62 = 10. The standard deviation , symbolized as s , is the most commonly used measure of dispersion . Distributions with a larger standard deviation have more spread. The standard deviation of the height variable is s = 2.74, and the standard deviation of the family income variable is s = $745,337.

An advantage of descriptive research is that it attempts to capture the complexity of everyday behavior. Case studies provide detailed information about a single person or a small group of people, surveys capture the thoughts or reported behaviors of a large population of people, and naturalistic observation objectively records the behavior of people or animals as it occurs naturally. Thus descriptive research is used to provide a relatively complete understanding of what is currently happening.

Despite these advantages, descriptive research has a distinct disadvantage in that, although it allows us to get an idea of what is currently happening, it is usually limited to static pictures. Although descriptions of particular experiences may be interesting, they are not always transferable to other individuals in other situations, nor do they tell us exactly why specific behaviors or events occurred. For instance, descriptions of individuals who have suffered a stressful event, such as a war or an earthquake, can be used to understand the individuals’ reactions to the event but cannot tell us anything about the long-term effects of the stress. And because there is no comparison group that did not experience the stressful situation, we cannot know what these individuals would be like if they hadn’t had the stressful experience.

Correlational Research: Seeking Relationships Among Variables

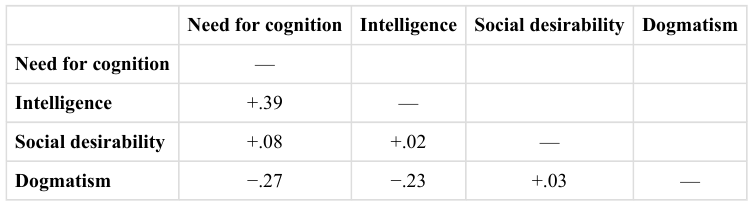

In contrast to descriptive research, which is designed primarily to provide static pictures, correlational research involves the measurement of two or more relevant variables and an assessment of the relationship between or among those variables. For instance, the variables of height and weight are systematically related (correlated) because taller people generally weigh more than shorter people. In the same way, study time and memory errors are also related, because the more time a person is given to study a list of words, the fewer errors he or she will make. When there are two variables in the research design, one of them is called the predictor variable and the other the outcome variable . The research design can be visualized like this, where the curved arrow represents the expected correlation between the two variables:

Figure 2.2.2

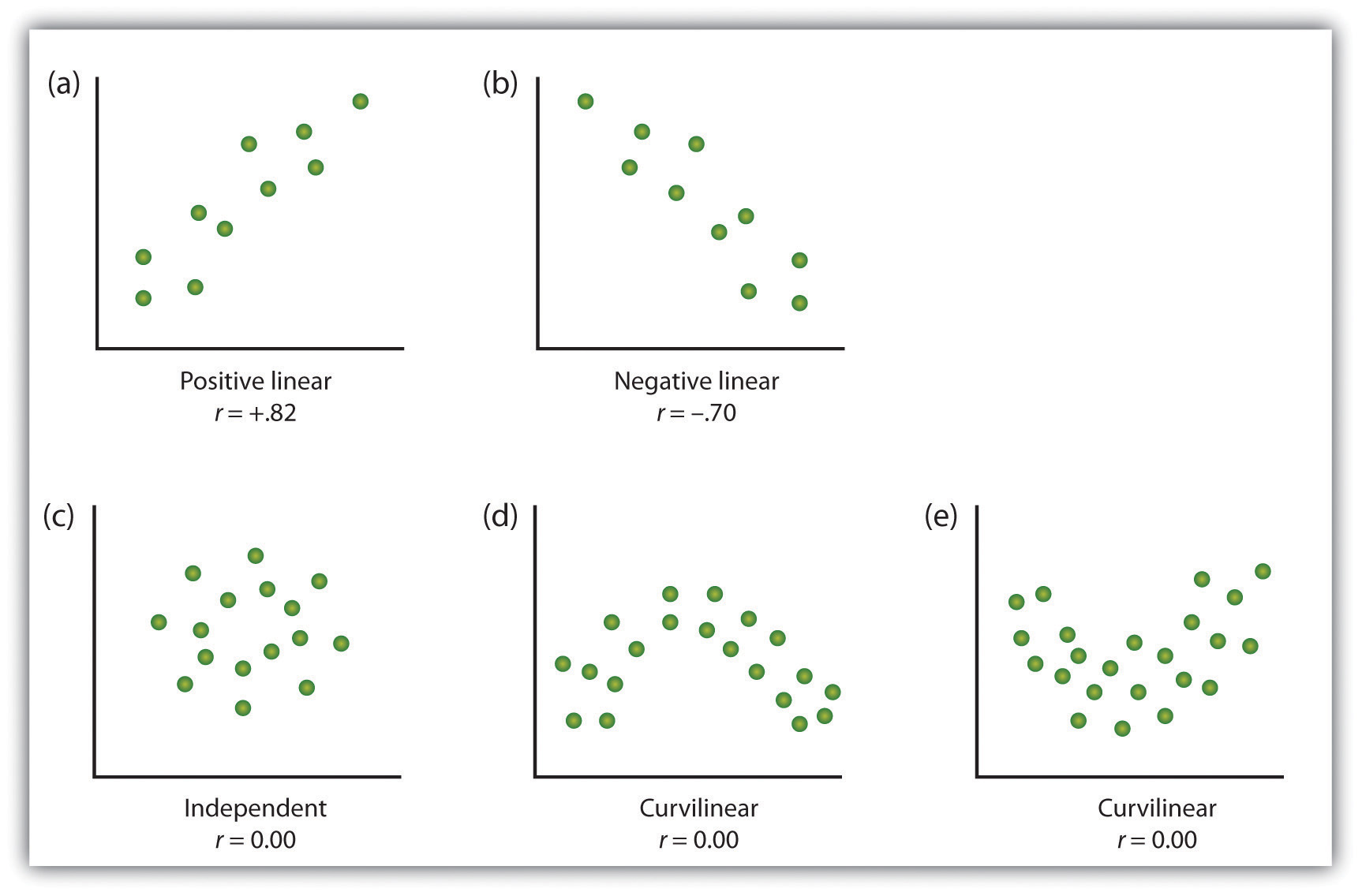

One way of organizing the data from a correlational study with two variables is to graph the values of each of the measured variables using a scatter plot . As you can see in Figure 2.10 “Examples of Scatter Plots” , a scatter plot is a visual image of the relationship between two variables . A point is plotted for each individual at the intersection of his or her scores for the two variables. When the association between the variables on the scatter plot can be easily approximated with a straight line, as in parts (a) and (b) of Figure 2.10 “Examples of Scatter Plots” , the variables are said to have a linear relationship .

When the straight line indicates that individuals who have above-average values for one variable also tend to have above-average values for the other variable, as in part (a), the relationship is said to be positive linear . Examples of positive linear relationships include those between height and weight, between education and income, and between age and mathematical abilities in children. In each case people who score higher on one of the variables also tend to score higher on the other variable. Negative linear relationships , in contrast, as shown in part (b), occur when above-average values for one variable tend to be associated with below-average values for the other variable. Examples of negative linear relationships include those between the age of a child and the number of diapers the child uses, and between practice on and errors made on a learning task. In these cases people who score higher on one of the variables tend to score lower on the other variable.

Relationships between variables that cannot be described with a straight line are known as nonlinear relationships . Part (c) of Figure 2.10 “Examples of Scatter Plots” shows a common pattern in which the distribution of the points is essentially random. In this case there is no relationship at all between the two variables, and they are said to be independent . Parts (d) and (e) of Figure 2.10 “Examples of Scatter Plots” show patterns of association in which, although there is an association, the points are not well described by a single straight line. For instance, part (d) shows the type of relationship that frequently occurs between anxiety and performance. Increases in anxiety from low to moderate levels are associated with performance increases, whereas increases in anxiety from moderate to high levels are associated with decreases in performance. Relationships that change in direction and thus are not described by a single straight line are called curvilinear relationships .

Figure 2.10 Examples of Scatter Plots

Some examples of relationships between two variables as shown in scatter plots. Note that the Pearson correlation coefficient ( r ) between variables that have curvilinear relationships will likely be close to zero.

Adapted from Stangor, C. (2011). Research methods for the behavioral sciences (4th ed.). Mountain View, CA: Cengage.

The most common statistical measure of the strength of linear relationships among variables is the Pearson correlation coefficient , which is symbolized by the letter r . The value of the correlation coefficient ranges from r = –1.00 to r = +1.00. The direction of the linear relationship is indicated by the sign of the correlation coefficient. Positive values of r (such as r = .54 or r = .67) indicate that the relationship is positive linear (i.e., the pattern of the dots on the scatter plot runs from the lower left to the upper right), whereas negative values of r (such as r = –.30 or r = –.72) indicate negative linear relationships (i.e., the dots run from the upper left to the lower right). The strength of the linear relationship is indexed by the distance of the correlation coefficient from zero (its absolute value). For instance, r = –.54 is a stronger relationship than r = .30, and r = .72 is a stronger relationship than r = –.57. Because the Pearson correlation coefficient only measures linear relationships, variables that have curvilinear relationships are not well described by r , and the observed correlation will be close to zero.

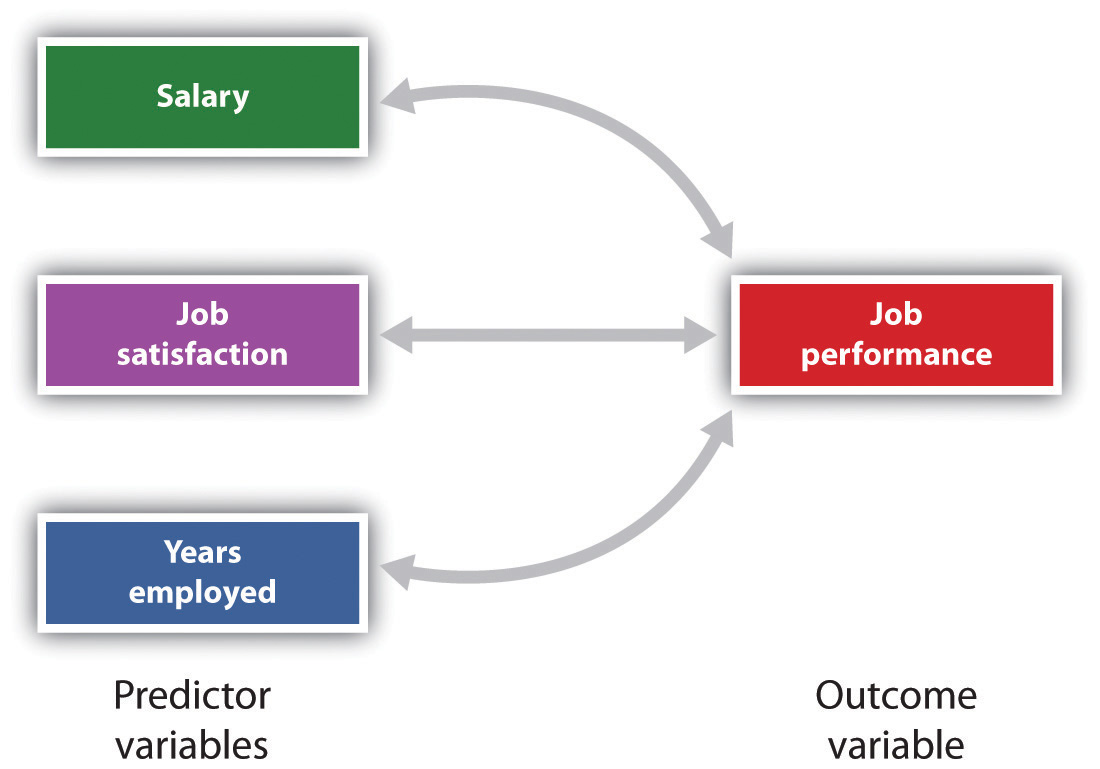

It is also possible to study relationships among more than two measures at the same time. A research design in which more than one predictor variable is used to predict a single outcome variable is analyzed through multiple regression (Aiken & West, 1991). Multiple regression is a statistical technique, based on correlation coefficients among variables, that allows predicting a single outcome variable from more than one predictor variable . For instance, Figure 2.11 “Prediction of Job Performance From Three Predictor Variables” shows a multiple regression analysis in which three predictor variables are used to predict a single outcome. The use of multiple regression analysis shows an important advantage of correlational research designs—they can be used to make predictions about a person’s likely score on an outcome variable (e.g., job performance) based on knowledge of other variables.

Figure 2.11 Prediction of Job Performance From Three Predictor Variables

Multiple regression allows scientists to predict the scores on a single outcome variable using more than one predictor variable.

An important limitation of correlational research designs is that they cannot be used to draw conclusions about the causal relationships among the measured variables. Consider, for instance, a researcher who has hypothesized that viewing violent behavior will cause increased aggressive play in children. He has collected, from a sample of fourth-grade children, a measure of how many violent television shows each child views during the week, as well as a measure of how aggressively each child plays on the school playground. From his collected data, the researcher discovers a positive correlation between the two measured variables.

Although this positive correlation appears to support the researcher’s hypothesis, it cannot be taken to indicate that viewing violent television causes aggressive behavior. Although the researcher is tempted to assume that viewing violent television causes aggressive play,

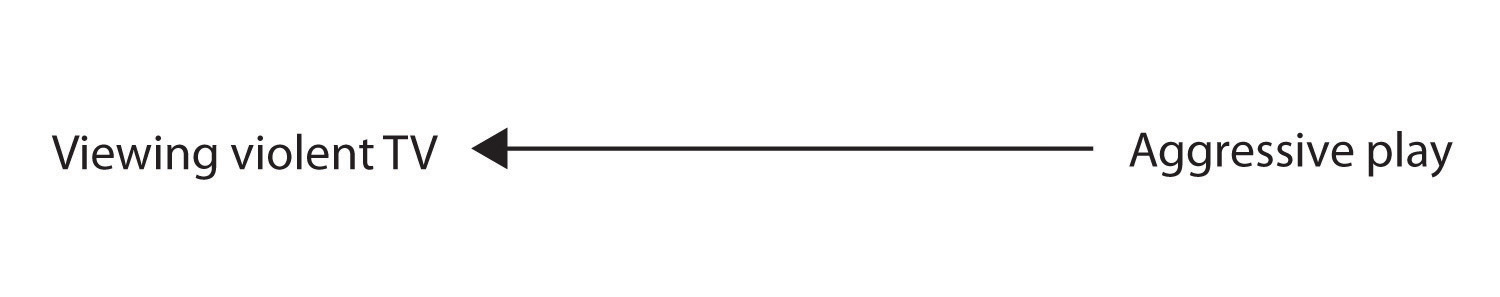

there are other possibilities. One alternate possibility is that the causal direction is exactly opposite from what has been hypothesized. Perhaps children who have behaved aggressively at school develop residual excitement that leads them to want to watch violent television shows at home:

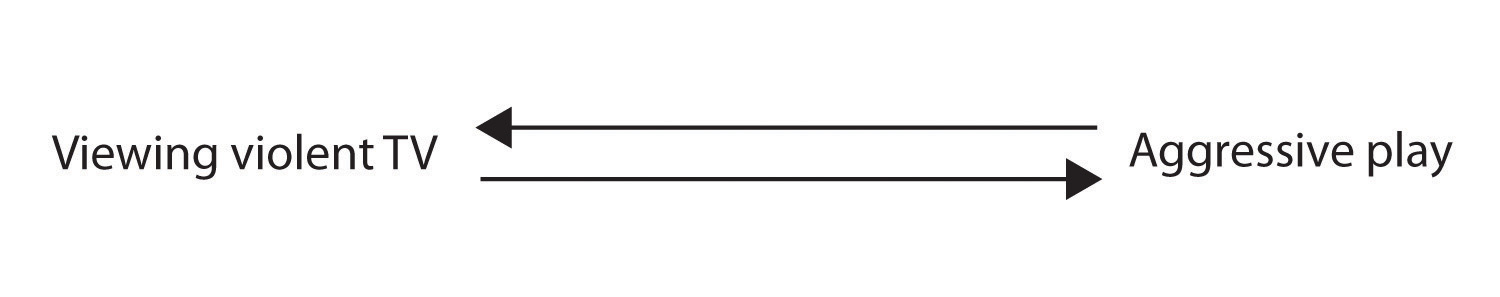

Although this possibility may seem less likely, there is no way to rule out the possibility of such reverse causation on the basis of this observed correlation. It is also possible that both causal directions are operating and that the two variables cause each other:

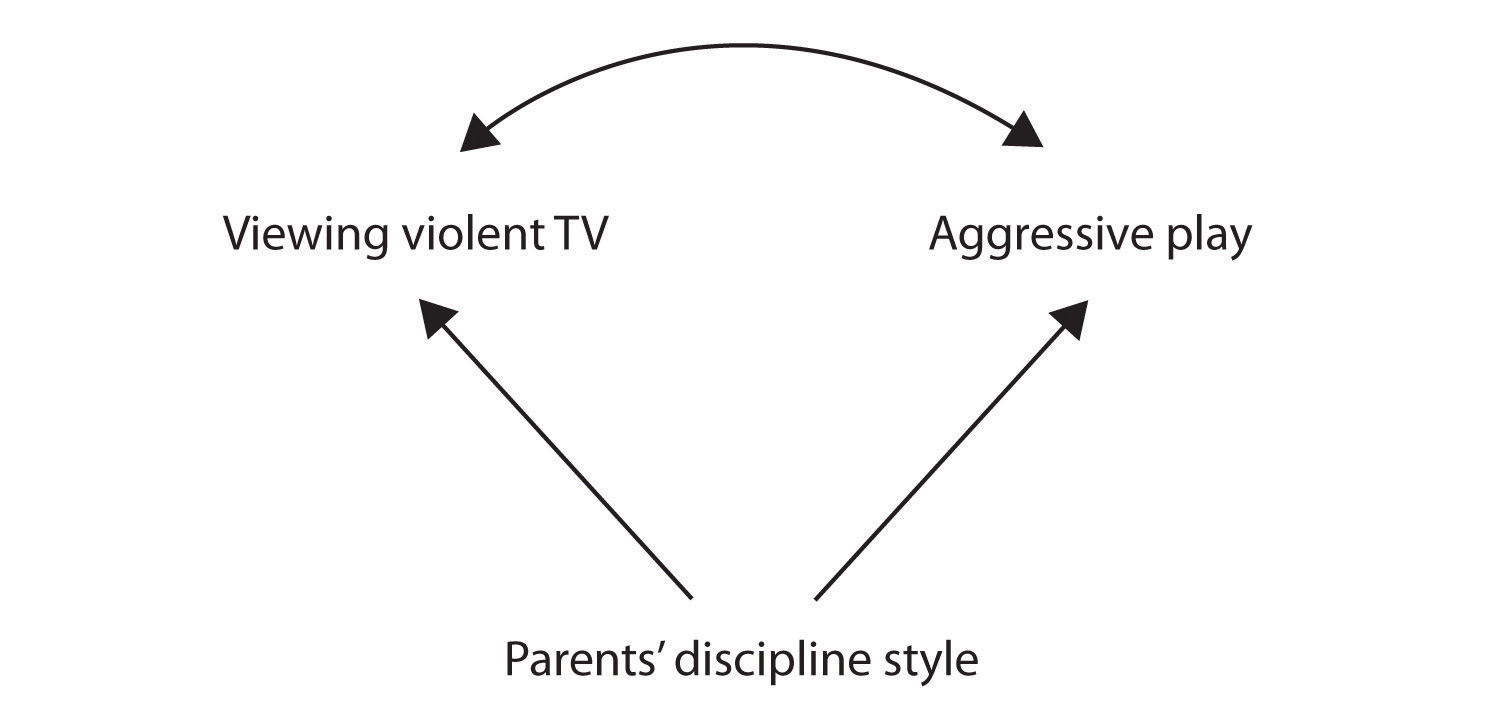

Still another possible explanation for the observed correlation is that it has been produced by the presence of a common-causal variable (also known as a third variable ). A common-causal variable is a variable that is not part of the research hypothesis but that causes both the predictor and the outcome variable and thus produces the observed correlation between them . In our example a potential common-causal variable is the discipline style of the children’s parents. Parents who use a harsh and punitive discipline style may produce children who both like to watch violent television and who behave aggressively in comparison to children whose parents use less harsh discipline:

In this case, television viewing and aggressive play would be positively correlated (as indicated by the curved arrow between them), even though neither one caused the other but they were both caused by the discipline style of the parents (the straight arrows). When the predictor and outcome variables are both caused by a common-causal variable, the observed relationship between them is said to be spurious . A spurious relationship is a relationship between two variables in which a common-causal variable produces and “explains away” the relationship . If effects of the common-causal variable were taken away, or controlled for, the relationship between the predictor and outcome variables would disappear. In the example the relationship between aggression and television viewing might be spurious because by controlling for the effect of the parents’ disciplining style, the relationship between television viewing and aggressive behavior might go away.

Common-causal variables in correlational research designs can be thought of as “mystery” variables because, as they have not been measured, their presence and identity are usually unknown to the researcher. Since it is not possible to measure every variable that could cause both the predictor and outcome variables, the existence of an unknown common-causal variable is always a possibility. For this reason, we are left with the basic limitation of correlational research: Correlation does not demonstrate causation. It is important that when you read about correlational research projects, you keep in mind the possibility of spurious relationships, and be sure to interpret the findings appropriately. Although correlational research is sometimes reported as demonstrating causality without any mention being made of the possibility of reverse causation or common-causal variables, informed consumers of research, like you, are aware of these interpretational problems.

In sum, correlational research designs have both strengths and limitations. One strength is that they can be used when experimental research is not possible because the predictor variables cannot be manipulated. Correlational designs also have the advantage of allowing the researcher to study behavior as it occurs in everyday life. And we can also use correlational designs to make predictions—for instance, to predict from the scores on their battery of tests the success of job trainees during a training session. But we cannot use such correlational information to determine whether the training caused better job performance. For that, researchers rely on experiments.

Experimental Research: Understanding the Causes of Behavior

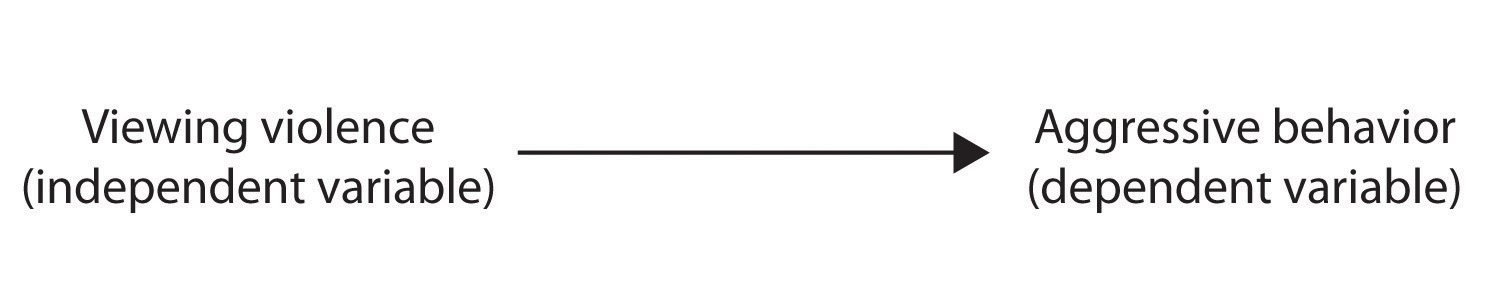

The goal of experimental research design is to provide more definitive conclusions about the causal relationships among the variables in the research hypothesis than is available from correlational designs. In an experimental research design, the variables of interest are called the independent variable (or variables ) and the dependent variable . The independent variable in an experiment is the causing variable that is created (manipulated) by the experimenter . The dependent variable in an experiment is a measured variable that is expected to be influenced by the experimental manipulation . The research hypothesis suggests that the manipulated independent variable or variables will cause changes in the measured dependent variables. We can diagram the research hypothesis by using an arrow that points in one direction. This demonstrates the expected direction of causality:

Figure 2.2.3

Research Focus: Video Games and Aggression

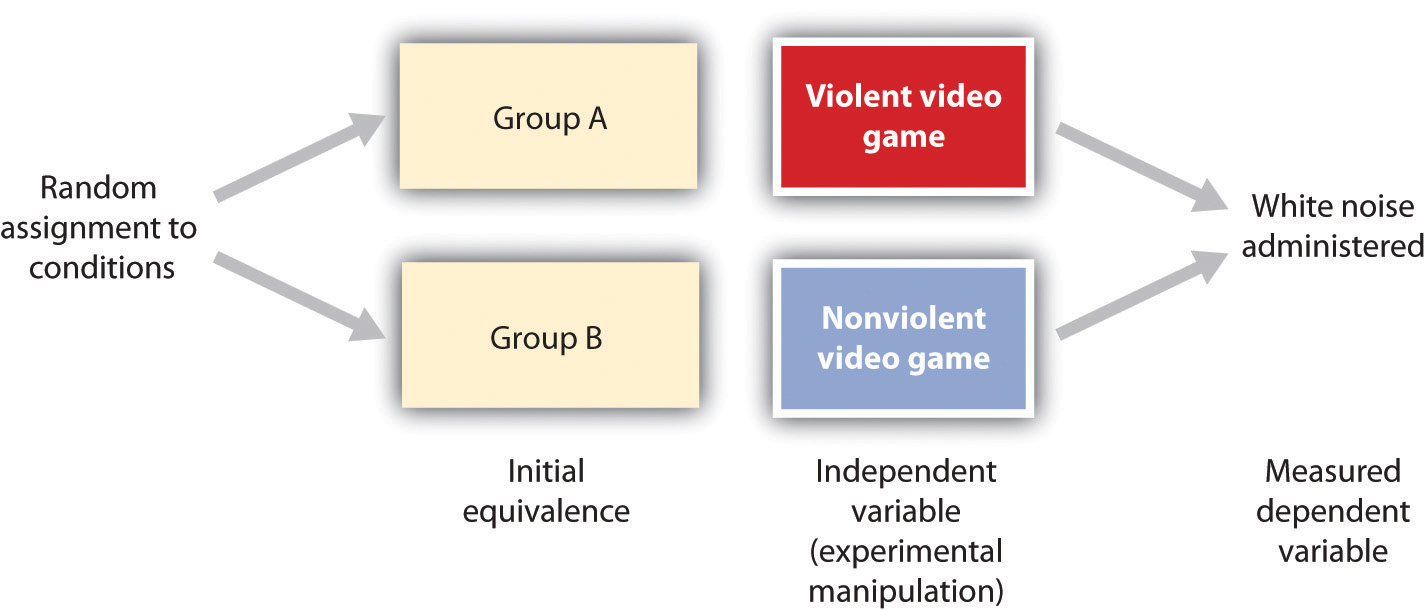

Consider an experiment conducted by Anderson and Dill (2000). The study was designed to test the hypothesis that viewing violent video games would increase aggressive behavior. In this research, male and female undergraduates from Iowa State University were given a chance to play with either a violent video game (Wolfenstein 3D) or a nonviolent video game (Myst). During the experimental session, the participants played their assigned video games for 15 minutes. Then, after the play, each participant played a competitive game with an opponent in which the participant could deliver blasts of white noise through the earphones of the opponent. The operational definition of the dependent variable (aggressive behavior) was the level and duration of noise delivered to the opponent. The design of the experiment is shown in Figure 2.17 “An Experimental Research Design” .

Figure 2.17 An Experimental Research Design

Two advantages of the experimental research design are (1) the assurance that the independent variable (also known as the experimental manipulation) occurs prior to the measured dependent variable, and (2) the creation of initial equivalence between the conditions of the experiment (in this case by using random assignment to conditions).

Experimental designs have two very nice features. For one, they guarantee that the independent variable occurs prior to the measurement of the dependent variable. This eliminates the possibility of reverse causation. Second, the influence of common-causal variables is controlled, and thus eliminated, by creating initial equivalence among the participants in each of the experimental conditions before the manipulation occurs.

The most common method of creating equivalence among the experimental conditions is through random assignment to conditions , a procedure in which the condition that each participant is assigned to is determined through a random process, such as drawing numbers out of an envelope or using a random number table . Anderson and Dill first randomly assigned about 100 participants to each of their two groups (Group A and Group B). Because they used random assignment to conditions, they could be confident that, before the experimental manipulation occurred, the students in Group A were, on average, equivalent to the students in Group B on every possible variable, including variables that are likely to be related to aggression, such as parental discipline style, peer relationships, hormone levels, diet—and in fact everything else.

Then, after they had created initial equivalence, Anderson and Dill created the experimental manipulation—they had the participants in Group A play the violent game and the participants in Group B play the nonviolent game. Then they compared the dependent variable (the white noise blasts) between the two groups, finding that the students who had viewed the violent video game gave significantly longer noise blasts than did the students who had played the nonviolent game.

Anderson and Dill had from the outset created initial equivalence between the groups. This initial equivalence allowed them to observe differences in the white noise levels between the two groups after the experimental manipulation, leading to the conclusion that it was the independent variable (and not some other variable) that caused these differences. The idea is that the only thing that was different between the students in the two groups was the video game they had played.

Despite the advantage of determining causation, experiments do have limitations. One is that they are often conducted in laboratory situations rather than in the everyday lives of people. Therefore, we do not know whether results that we find in a laboratory setting will necessarily hold up in everyday life. Second, and more important, is that some of the most interesting and key social variables cannot be experimentally manipulated. If we want to study the influence of the size of a mob on the destructiveness of its behavior, or to compare the personality characteristics of people who join suicide cults with those of people who do not join such cults, these relationships must be assessed using correlational designs, because it is simply not possible to experimentally manipulate these variables.

Key Takeaways

- Descriptive, correlational, and experimental research designs are used to collect and analyze data.

- Descriptive designs include case studies, surveys, and naturalistic observation. The goal of these designs is to get a picture of the current thoughts, feelings, or behaviors in a given group of people. Descriptive research is summarized using descriptive statistics.

- Correlational research designs measure two or more relevant variables and assess a relationship between or among them. The variables may be presented on a scatter plot to visually show the relationships. The Pearson Correlation Coefficient ( r ) is a measure of the strength of linear relationship between two variables.

- Common-causal variables may cause both the predictor and outcome variable in a correlational design, producing a spurious relationship. The possibility of common-causal variables makes it impossible to draw causal conclusions from correlational research designs.

- Experimental research involves the manipulation of an independent variable and the measurement of a dependent variable. Random assignment to conditions is normally used to create initial equivalence between the groups, allowing researchers to draw causal conclusions.

Exercises and Critical Thinking

- There is a negative correlation between the row that a student sits in in a large class (when the rows are numbered from front to back) and his or her final grade in the class. Do you think this represents a causal relationship or a spurious relationship, and why?

- Think of two variables (other than those mentioned in this book) that are likely to be correlated, but in which the correlation is probably spurious. What is the likely common-causal variable that is producing the relationship?

- Imagine a researcher wants to test the hypothesis that participating in psychotherapy will cause a decrease in reported anxiety. Describe the type of research design the investigator might use to draw this conclusion. What would be the independent and dependent variables in the research?

Aiken, L., & West, S. (1991). Multiple regression: Testing and interpreting interactions . Newbury Park, CA: Sage.

Ainsworth, M. S., Blehar, M. C., Waters, E., & Wall, S. (1978). Patterns of attachment: A psychological study of the strange situation . Hillsdale, NJ: Lawrence Erlbaum Associates.

Anderson, C. A., & Dill, K. E. (2000). Video games and aggressive thoughts, feelings, and behavior in the laboratory and in life. Journal of Personality and Social Psychology, 78 (4), 772–790.

Damasio, H., Grabowski, T., Frank, R., Galaburda, A. M., Damasio, A. R., Cacioppo, J. T., & Berntson, G. G. (2005). The return of Phineas Gage: Clues about the brain from the skull of a famous patient. In Social neuroscience: Key readings. (pp. 21–28). New York, NY: Psychology Press.

Freud, S. (1964). Analysis of phobia in a five-year-old boy. In E. A. Southwell & M. Merbaum (Eds.), Personality: Readings in theory and research (pp. 3–32). Belmont, CA: Wadsworth. (Original work published 1909)

Kotowicz, Z. (2007). The strange case of Phineas Gage. History of the Human Sciences, 20 (1), 115–131.

Rokeach, M. (1964). The three Christs of Ypsilanti: A psychological study . New York, NY: Knopf.

Introduction to Psychology Copyright © 2015 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

5.1 Experiment Basics

Learning objectives.

- Explain what an experiment is and recognize examples of studies that are experiments and studies that are not experiments.

- Distinguish between the manipulation of the independent variable and control of extraneous variables and explain the importance of each.

- Recognize examples of confounding variables and explain how they affect the internal validity of a study.

What Is an Experiment?

As we saw earlier in the book, an experiment is a type of study designed specifically to answer the question of whether there is a causal relationship between two variables. In other words, whether changes in an independent variable cause a change in a dependent variable. Experiments have two fundamental features. The first is that the researchers manipulate, or systematically vary, the level of the independent variable. The different levels of the independent variable are called conditions . For example, in Darley and Latané’s experiment, the independent variable was the number of witnesses that participants believed to be present. The researchers manipulated this independent variable by telling participants that there were either one, two, or five other students involved in the discussion, thereby creating three conditions. For a new researcher, it is easy to confuse these terms by believing there are three independent variables in this situation: one, two, or five students involved in the discussion, but there is actually only one independent variable (number of witnesses) with three different levels or conditions (one, two or five students). The second fundamental feature of an experiment is that the researcher controls, or minimizes the variability in, variables other than the independent and dependent variable. These other variables are called extraneous variables . Darley and Latané tested all their participants in the same room, exposed them to the same emergency situation, and so on. They also randomly assigned their participants to conditions so that the three groups would be similar to each other to begin with. Notice that although the words manipulation and control have similar meanings in everyday language, researchers make a clear distinction between them. They manipulate the independent variable by systematically changing its levels and control other variables by holding them constant.

Manipulation of the Independent Variable

Again, to manipulate an independent variable means to change its level systematically so that different groups of participants are exposed to different levels of that variable, or the same group of participants is exposed to different levels at different times. For example, to see whether expressive writing affects people’s health, a researcher might instruct some participants to write about traumatic experiences and others to write about neutral experiences. As discussed earlier in this chapter, the different levels of the independent variable are referred to as conditions , and researchers often give the conditions short descriptive names to make it easy to talk and write about them. In this case, the conditions might be called the “traumatic condition” and the “neutral condition.”

Notice that the manipulation of an independent variable must involve the active intervention of the researcher. Comparing groups of people who differ on the independent variable before the study begins is not the same as manipulating that variable. For example, a researcher who compares the health of people who already keep a journal with the health of people who do not keep a journal has not manipulated this variable and therefore has not conducted an experiment. This distinction is important because groups that already differ in one way at the beginning of a study are likely to differ in other ways too. For example, people who choose to keep journals might also be more conscientious, more introverted, or less stressed than people who do not. Therefore, any observed difference between the two groups in terms of their health might have been caused by whether or not they keep a journal, or it might have been caused by any of the other differences between people who do and do not keep journals. Thus the active manipulation of the independent variable is crucial for eliminating potential alternative explanations for the results.

Of course, there are many situations in which the independent variable cannot be manipulated for practical or ethical reasons and therefore an experiment is not possible. For example, whether or not people have a significant early illness experience cannot be manipulated, making it impossible to conduct an experiment on the effect of early illness experiences on the development of hypochondriasis. This caveat does not mean it is impossible to study the relationship between early illness experiences and hypochondriasis—only that it must be done using nonexperimental approaches. We will discuss this type of methodology in detail later in the book.

Independent variables can be manipulated to create two conditions and experiments involving a single independent variable with two conditions is often referred to as a single factor two-level design. However, sometimes greater insights can be gained by adding more conditions to an experiment. When an experiment has one independent variable that is manipulated to produce more than two conditions it is referred to as a single factor multi level design. So rather than comparing a condition in which there was one witness to a condition in which there were five witnesses (which would represent a single-factor two-level design), Darley and Latané’s used a single factor multi-level design, by manipulating the independent variable to produce three conditions (a one witness, a two witnesses, and a five witnesses condition).

Control of Extraneous Variables

As we have seen previously in the chapter, an extraneous variable is anything that varies in the context of a study other than the independent and dependent variables. In an experiment on the effect of expressive writing on health, for example, extraneous variables would include participant variables (individual differences) such as their writing ability, their diet, and their gender. They would also include situational or task variables such as the time of day when participants write, whether they write by hand or on a computer, and the weather. Extraneous variables pose a problem because many of them are likely to have some effect on the dependent variable. For example, participants’ health will be affected by many things other than whether or not they engage in expressive writing. This influencing factor can make it difficult to separate the effect of the independent variable from the effects of the extraneous variables, which is why it is important to control extraneous variables by holding them constant.

Extraneous Variables as “Noise”

Extraneous variables make it difficult to detect the effect of the independent variable in two ways. One is by adding variability or “noise” to the data. Imagine a simple experiment on the effect of mood (happy vs. sad) on the number of happy childhood events people are able to recall. Participants are put into a negative or positive mood (by showing them a happy or sad video clip) and then asked to recall as many happy childhood events as they can. The two leftmost columns of Table 5.1 show what the data might look like if there were no extraneous variables and the number of happy childhood events participants recalled was affected only by their moods. Every participant in the happy mood condition recalled exactly four happy childhood events, and every participant in the sad mood condition recalled exactly three. The effect of mood here is quite obvious. In reality, however, the data would probably look more like those in the two rightmost columns of Table 5.1 . Even in the happy mood condition, some participants would recall fewer happy memories because they have fewer to draw on, use less effective recall strategies, or are less motivated. And even in the sad mood condition, some participants would recall more happy childhood memories because they have more happy memories to draw on, they use more effective recall strategies, or they are more motivated. Although the mean difference between the two groups is the same as in the idealized data, this difference is much less obvious in the context of the greater variability in the data. Thus one reason researchers try to control extraneous variables is so their data look more like the idealized data in Table 5.1 , which makes the effect of the independent variable easier to detect (although real data never look quite that good).

| 4 | 3 | 3 | 1 |

| 4 | 3 | 6 | 3 |

| 4 | 3 | 2 | 4 |

| 4 | 3 | 4 | 0 |

| 4 | 3 | 5 | 5 |

| 4 | 3 | 2 | 7 |

| 4 | 3 | 3 | 2 |

| 4 | 3 | 1 | 5 |

| 4 | 3 | 6 | 1 |

| 4 | 3 | 8 | 2 |

| = 4 | = 3 | = 4 | = 3 |

One way to control extraneous variables is to hold them constant. This technique can mean holding situation or task variables constant by testing all participants in the same location, giving them identical instructions, treating them in the same way, and so on. It can also mean holding participant variables constant. For example, many studies of language limit participants to right-handed people, who generally have their language areas isolated in their left cerebral hemispheres. Left-handed people are more likely to have their language areas isolated in their right cerebral hemispheres or distributed across both hemispheres, which can change the way they process language and thereby add noise to the data.

In principle, researchers can control extraneous variables by limiting participants to one very specific category of person, such as 20-year-old, heterosexual, female, right-handed psychology majors. The obvious downside to this approach is that it would lower the external validity of the study—in particular, the extent to which the results can be generalized beyond the people actually studied. For example, it might be unclear whether results obtained with a sample of younger heterosexual women would apply to older homosexual men. In many situations, the advantages of a diverse sample (increased external validity) outweigh the reduction in noise achieved by a homogeneous one.

Extraneous Variables as Confounding Variables

The second way that extraneous variables can make it difficult to detect the effect of the independent variable is by becoming confounding variables. A confounding variable is an extraneous variable that differs on average across levels of the independent variable (i.e., it is an extraneous variable that varies systematically with the independent variable). For example, in almost all experiments, participants’ intelligence quotients (IQs) will be an extraneous variable. But as long as there are participants with lower and higher IQs in each condition so that the average IQ is roughly equal across the conditions, then this variation is probably acceptable (and may even be desirable). What would be bad, however, would be for participants in one condition to have substantially lower IQs on average and participants in another condition to have substantially higher IQs on average. In this case, IQ would be a confounding variable.

To confound means to confuse , and this effect is exactly why confounding variables are undesirable. Because they differ systematically across conditions—just like the independent variable—they provide an alternative explanation for any observed difference in the dependent variable. Figure 5.1 shows the results of a hypothetical study, in which participants in a positive mood condition scored higher on a memory task than participants in a negative mood condition. But if IQ is a confounding variable—with participants in the positive mood condition having higher IQs on average than participants in the negative mood condition—then it is unclear whether it was the positive moods or the higher IQs that caused participants in the first condition to score higher. One way to avoid confounding variables is by holding extraneous variables constant. For example, one could prevent IQ from becoming a confounding variable by limiting participants only to those with IQs of exactly 100. But this approach is not always desirable for reasons we have already discussed. A second and much more general approach—random assignment to conditions—will be discussed in detail shortly.

Figure 5.1 Hypothetical Results From a Study on the Effect of Mood on Memory. Because IQ also differs across conditions, it is a confounding variable.

Key Takeaways

- An experiment is a type of empirical study that features the manipulation of an independent variable, the measurement of a dependent variable, and control of extraneous variables.

- An extraneous variable is any variable other than the independent and dependent variables. A confound is an extraneous variable that varies systematically with the independent variable.

- Practice: List five variables that can be manipulated by the researcher in an experiment. List five variables that cannot be manipulated by the researcher in an experiment.

- Effect of parietal lobe damage on people’s ability to do basic arithmetic.

- Effect of being clinically depressed on the number of close friendships people have.

- Effect of group training on the social skills of teenagers with Asperger’s syndrome.

- Effect of paying people to take an IQ test on their performance on that test.

Share This Book

- Increase Font Size

Research Methods in Psychology

5. factorial designs ¶.

We have usually no knowledge that any one factor will exert its effects independently of all others that can be varied, or that its effects are particularly simply related to variations in these other factors. —Ronald Fisher

In Chapter 1 we briefly described a study conducted by Simone Schnall and her colleagues, in which they found that washing one’s hands leads people to view moral transgressions as less wrong [SBH08] . In a different but related study, Schnall and her colleagues investigated whether feeling physically disgusted causes people to make harsher moral judgments [SHCJ08] . In this experiment, they manipulated participants’ feelings of disgust by testing them in either a clean room or a messy room that contained dirty dishes, an overflowing wastebasket, and a chewed-up pen. They also used a self-report questionnaire to measure the amount of attention that people pay to their own bodily sensations. They called this “private body consciousness”. They measured their primary dependent variable, the harshness of people’s moral judgments, by describing different behaviors (e.g., eating one’s dead dog, failing to return a found wallet) and having participants rate the moral acceptability of each one on a scale of 1 to 7. They also measured some other dependent variables, including participants’ willingness to eat at a new restaurant. Finally, the researchers asked participants to rate their current level of disgust and other emotions. The primary results of this study were that participants in the messy room were in fact more disgusted and made harsher moral judgments than participants in the clean room—but only if they scored relatively high in private body consciousness.

The research designs we have considered so far have been simple—focusing on a question about one variable or about a statistical relationship between two variables. But in many ways, the complex design of this experiment undertaken by Schnall and her colleagues is more typical of research in psychology. Fortunately, we have already covered the basic elements of such designs in previous chapters. In this chapter, we look closely at how and why researchers combine these basic elements into more complex designs. We start with complex experiments—considering first the inclusion of multiple dependent variables and then the inclusion of multiple independent variables. Finally, we look at complex correlational designs.

5.1. Multiple Dependent Variables ¶

5.1.1. learning objectives ¶.

Explain why researchers often include multiple dependent variables in their studies.

Explain what a manipulation check is and when it would be included in an experiment.

Imagine that you have made the effort to find a research topic, review the research literature, formulate a question, design an experiment, obtain approval from teh relevant institutional review board (IRB), recruit research participants, and manipulate an independent variable. It would seem almost wasteful to measure a single dependent variable. Even if you are primarily interested in the relationship between an independent variable and one primary dependent variable, there are usually several more questions that you can answer easily by including multiple dependent variables.

5.1.2. Measures of Different Constructs ¶

Often a researcher wants to know how an independent variable affects several distinct dependent variables. For example, Schnall and her colleagues were interested in how feeling disgusted affects the harshness of people’s moral judgments, but they were also curious about how disgust affects other variables, such as people’s willingness to eat in a restaurant. As another example, researcher Susan Knasko was interested in how different odors affect people’s behavior [Kna92] . She conducted an experiment in which the independent variable was whether participants were tested in a room with no odor or in one scented with lemon, lavender, or dimethyl sulfide (which has a cabbage-like smell). Although she was primarily interested in how the odors affected people’s creativity, she was also curious about how they affected people’s moods and perceived health—and it was a simple enough matter to measure these dependent variables too. Although she found that creativity was unaffected by the ambient odor, she found that people’s moods were lower in the dimethyl sulfide condition, and that their perceived health was greater in the lemon condition.

When an experiment includes multiple dependent variables, there is again a possibility of carryover effects. For example, it is possible that measuring participants’ moods before measuring their perceived health could affect their perceived health or that measuring their perceived health before their moods could affect their moods. So the order in which multiple dependent variables are measured becomes an issue. One approach is to measure them in the same order for all participants—usually with the most important one first so that it cannot be affected by measuring the others. Another approach is to counterbalance, or systematically vary, the order in which the dependent variables are measured.

5.1.3. Manipulation Checks ¶

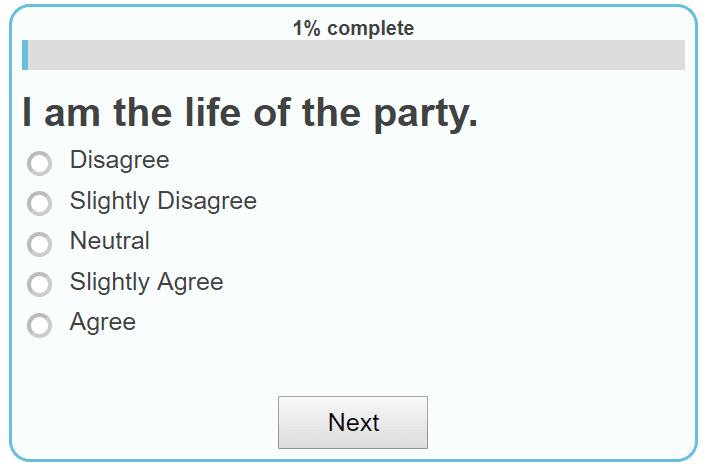

When the independent variable is a construct that can only be manipulated indirectly—such as emotions and other internal states—an additional measure of that independent variable is often included as a manipulation check. This is done to confirm that the independent variable was, in fact, successfully manipulated. For example, Schnall and her colleagues had their participants rate their level of disgust to be sure that those in the messy room actually felt more disgusted than those in the clean room.

Manipulation checks are usually done at the end of the procedure to be sure that the effect of the manipulation lasted throughout the entire procedure and to avoid calling unnecessary attention to the manipulation. Manipulation checks become especially important when the manipulation of the independent variable turns out to have no effect on the dependent variable. Imagine, for example, that you exposed participants to happy or sad movie music—intending to put them in happy or sad moods—but you found that this had no effect on the number of happy or sad childhood events they recalled. This could be because being in a happy or sad mood has no effect on memories for childhood events. But it could also be that the music was ineffective at putting participants in happy or sad moods. A manipulation check, in this case, a measure of participants’ moods, would help resolve this uncertainty. If it showed that you had successfully manipulated participants’ moods, then it would appear that there is indeed no effect of mood on memory for childhood events. But if it showed that you did not successfully manipulate participants’ moods, then it would appear that you need a more effective manipulation to answer your research question.

5.1.4. Measures of the Same Construct ¶

Another common approach to including multiple dependent variables is to operationalize and measure the same construct, or closely related ones, in different ways. Imagine, for example, that a researcher conducts an experiment on the effect of daily exercise on stress. The dependent variable, stress, is a construct that can be operationalized in different ways. For this reason, the researcher might have participants complete the paper-and-pencil Perceived Stress Scale and also measure their levels of the stress hormone cortisol. This is an example of the use of converging operations. If the researcher finds that the different measures are affected by exercise in the same way, then he or she can be confident in the conclusion that exercise affects the more general construct of stress.

When multiple dependent variables are different measures of the same construct - especially if they are measured on the same scale - researchers have the option of combining them into a single measure of that construct. Recall that Schnall and her colleagues were interested in the harshness of people’s moral judgments. To measure this construct, they presented their participants with seven different scenarios describing morally questionable behaviors and asked them to rate the moral acceptability of each one. Although the researchers could have treated each of the seven ratings as a separate dependent variable, these researchers combined them into a single dependent variable by computing their mean.

When researchers combine dependent variables in this way, they are treating them collectively as a multiple-response measure of a single construct. The advantage of this is that multiple-response measures are generally more reliable than single-response measures. However, it is important to make sure the individual dependent variables are correlated with each other by computing an internal consistency measure such as Cronbach’s \(\alpha\) . If they are not correlated with each other, then it does not make sense to combine them into a measure of a single construct. If they have poor internal consistency, then they should be treated as separate dependent variables.

5.1.5. Key Takeaways ¶

Researchers in psychology often include multiple dependent variables in their studies. The primary reason is that this easily allows them to answer more research questions with minimal additional effort.

When an independent variable is a construct that is manipulated indirectly, it is a good idea to include a manipulation check. This is a measure of the independent variable typically given at the end of the procedure to confirm that it was successfully manipulated.

Multiple measures of the same construct can be analyzed separately or combined to produce a single multiple-item measure of that construct. The latter approach requires that the measures taken together have good internal consistency.

5.1.6. Exercises ¶

Practice: List three independent variables for which it would be good to include a manipulation check. List three others for which a manipulation check would be unnecessary. Hint: Consider whether there is any ambiguity concerning whether the manipulation will have its intended effect.

Practice: Imagine a study in which the independent variable is whether the room where participants are tested is warm (30°) or cool (12°). List three dependent variables that you might treat as measures of separate variables. List three more that you might combine and treat as measures of the same underlying construct.

5.2. Multiple Independent Variables ¶

5.2.1. learning objectives ¶.

Explain why researchers often include multiple independent variables in their studies.

Define factorial design, and use a factorial design table to represent and interpret simple factorial designs.

Distinguish between main effects and interactions, and recognize and give examples of each.

Sketch and interpret bar graphs and line graphs showing the results of studies with simple factorial designs.

Just as it is common for studies in psychology to include multiple dependent variables, it is also common for them to include multiple independent variables. Schnall and her colleagues studied the effect of both disgust and private body consciousness in the same study. The tendency to include multiple independent variables in one experiment is further illustrated by the following titles of actual research articles published in professional journals:

The Effects of Temporal Delay and Orientation on Haptic Object Recognition

Opening Closed Minds: The Combined Effects of Intergroup Contact and Need for Closure on Prejudice

Effects of Expectancies and Coping on Pain-Induced Intentions to Smoke

The Effect of Age and Divided Attention on Spontaneous Recognition

The Effects of Reduced Food Size and Package Size on the Consumption Behavior of Restrained and Unrestrained Eaters

Just as including multiple dependent variables in the same experiment allows one to answer more research questions, so too does including multiple independent variables in the same experiment. For example, instead of conducting one study on the effect of disgust on moral judgment and another on the effect of private body consciousness on moral judgment, Schnall and colleagues were able to conduct one study that addressed both variables. But including multiple independent variables also allows the researcher to answer questions about whether the effect of one independent variable depends on the level of another. This is referred to as an interaction between the independent variables. Schnall and her colleagues, for example, observed an interaction between disgust and private body consciousness because the effect of disgust depended on whether participants were high or low in private body consciousness. As we will see, interactions are often among the most interesting results in psychological research.

5.2.2. Factorial Designs ¶

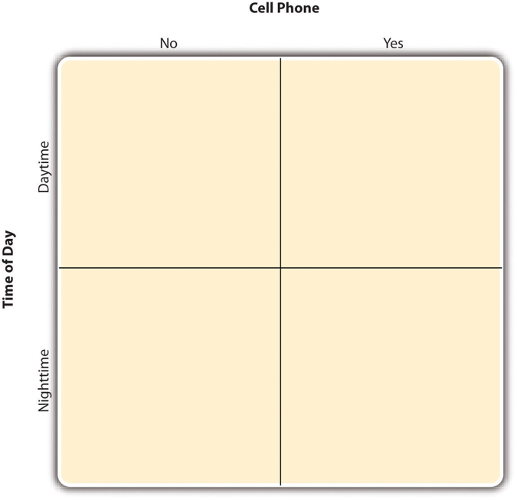

By far the most common approach to including multiple independent variables in an experiment is the factorial design. In a factorial design, each level of one independent variable (which can also be called a factor) is combined with each level of the others to produce all possible combinations. Each combination, then, becomes a condition in the experiment. Imagine, for example, an experiment on the effect of cell phone use (yes vs. no) and time of day (day vs. night) on driving ability. This is shown in the factorial design table in Figure 5.1 . The columns of the table represent cell phone use, and the rows represent time of day. The four cells of the table represent the four possible combinations or conditions: using a cell phone during the day, not using a cell phone during the day, using a cell phone at night, and not using a cell phone at night. This particular design is referred to as a 2 x 2 (read “two-by- two”) factorial design because it combines two variables, each of which has two levels. If one of the independent variables had a third level (e.g., using a hand-held cell phone, using a hands-free cell phone, and not using a cell phone), then it would be a 3 x 2 factorial design, and there would be six distinct conditions. Notice that the number of possible conditions is the product of the numbers of levels. A 2 x 2 factorial design has four conditions, a 3 x 2 factorial design has six conditions, a 4 x 5 factorial design would have 20 conditions, and so on.

Fig. 5.1 Factorial Design Table Representing a 2 x 2 Factorial Design ¶

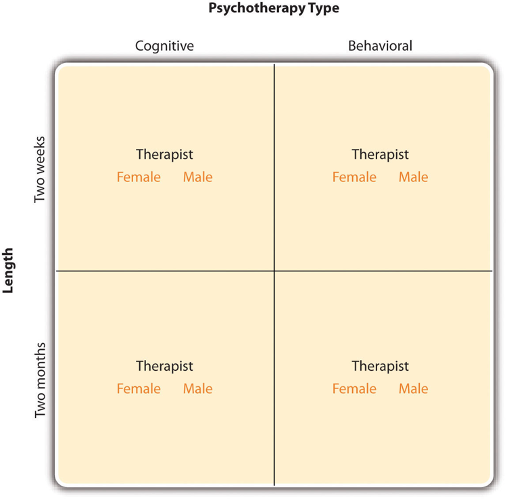

In principle, factorial designs can include any number of independent variables with any number of levels. For example, an experiment could include the type of psychotherapy (cognitive vs. behavioral), the length of the psychotherapy (2 weeks vs. 2 months), and the sex of the psychotherapist (female vs. male). This would be a 2 x 2 x 2 factorial design and would have eight conditions. Figure 5.2 shows one way to represent this design. In practice, it is unusual for there to be more than three independent variables with more than two or three levels each.

This is for at least two reasons: For one, the number of conditions can quickly become unmanageable. For example, adding a fourth independent variable with three levels (e.g., therapist experience: low vs. medium vs. high) to the current example would make it a 2 x 2 x 2 x 3 factorial design with 24 distinct conditions. Second, the number of participants required to populate all of these conditions (while maintaining a reasonable ability to detect a real underlying effect) can render the design unfeasible (for more information, see the discussion about the importance of adequate statistical power in Chapter 13 ). As a result, in the remainder of this section we will focus on designs with two independent variables. The general principles discussed here extend in a straightforward way to more complex factorial designs.

Fig. 5.2 Factorial Design Table Representing a 2 x 2 x 2 Factorial Design ¶

5.2.3. Assigning Participants to Conditions ¶

Recall that in a simple between-subjects design, each participant is tested in only one condition. In a simple within-subjects design, each participant is tested in all conditions. In a factorial experiment, the decision to take the between-subjects or within-subjects approach must be made separately for each independent variable. In a between-subjects factorial design, all of the independent variables are manipulated between subjects. For example, all participants could be tested either while using a cell phone or while not using a cell phone and either during the day or during the night. This would mean that each participant was tested in one and only one condition. In a within-subjects factorial design, all of the independent variables are manipulated within subjects. All participants could be tested both while using a cell phone and while not using a cell phone and both during the day and during the night. This would mean that each participant was tested in all conditions. The advantages and disadvantages of these two approaches are the same as those discussed in Chapter 4 ). The between-subjects design is conceptually simpler, avoids carryover effects, and minimizes the time and effort of each participant. The within-subjects design is more efficient for the researcher and help to control extraneous variables.

It is also possible to manipulate one independent variable between subjects and another within subjects. This is called a mixed factorial design. For example, a researcher might choose to treat cell phone use as a within-subjects factor by testing the same participants both while using a cell phone and while not using a cell phone (while counterbalancing the order of these two conditions). But he or she might choose to treat time of day as a between-subjects factor by testing each participant either during the day or during the night (perhaps because this only requires them to come in for testing once). Thus each participant in this mixed design would be tested in two of the four conditions.

Regardless of whether the design is between subjects, within subjects, or mixed, the actual assignment of participants to conditions or orders of conditions is typically done randomly.

5.2.4. Non-manipulated Independent Variables ¶

In many factorial designs, one of the independent variables is a non-manipulated independent variable. The researcher measures it but does not manipulate it. The study by Schnall and colleagues is a good example. One independent variable was disgust, which the researchers manipulated by testing participants in a clean room or a messy room. The other was private body consciousness, a variable which the researchers simply measured. Another example is a study by Halle Brown and colleagues in which participants were exposed to several words that they were later asked to recall [BKD+99] . The manipulated independent variable was the type of word. Some were negative, health-related words (e.g., tumor, coronary), and others were not health related (e.g., election, geometry). The non-manipulated independent variable was whether participants were high or low in hypochondriasis (excessive concern with ordinary bodily symptoms). Results from this study suggested that participants high in hypochondriasis were better than those low in hypochondriasis at recalling the health-related words, but that they were no better at recalling the non-health-related words.

Such studies are extremely common, and there are several points worth making about them. First, non-manipulated independent variables are usually participant characteristics (private body consciousness, hypochondriasis, self-esteem, and so on), and as such they are, by definition, between-subject factors. For example, people are either low in hypochondriasis or high in hypochondriasis; they cannot be in both of these conditions. Second, such studies are generally considered to be experiments as long as at least one independent variable is manipulated, regardless of how many non-manipulated independent variables are included. Third, it is important to remember that causal conclusions can only be drawn about the manipulated independent variable. For example, Schnall and her colleagues were justified in concluding that disgust affected the harshness of their participants’ moral judgments because they manipulated that variable and randomly assigned participants to the clean or messy room. But they would not have been justified in concluding that participants’ private body consciousness affected the harshness of their participants’ moral judgments because they did not manipulate that variable. It could be, for example, that having a strict moral code and a heightened awareness of one’s body are both caused by some third variable (e.g., neuroticism). Thus it is important to be aware of which variables in a study are manipulated and which are not.

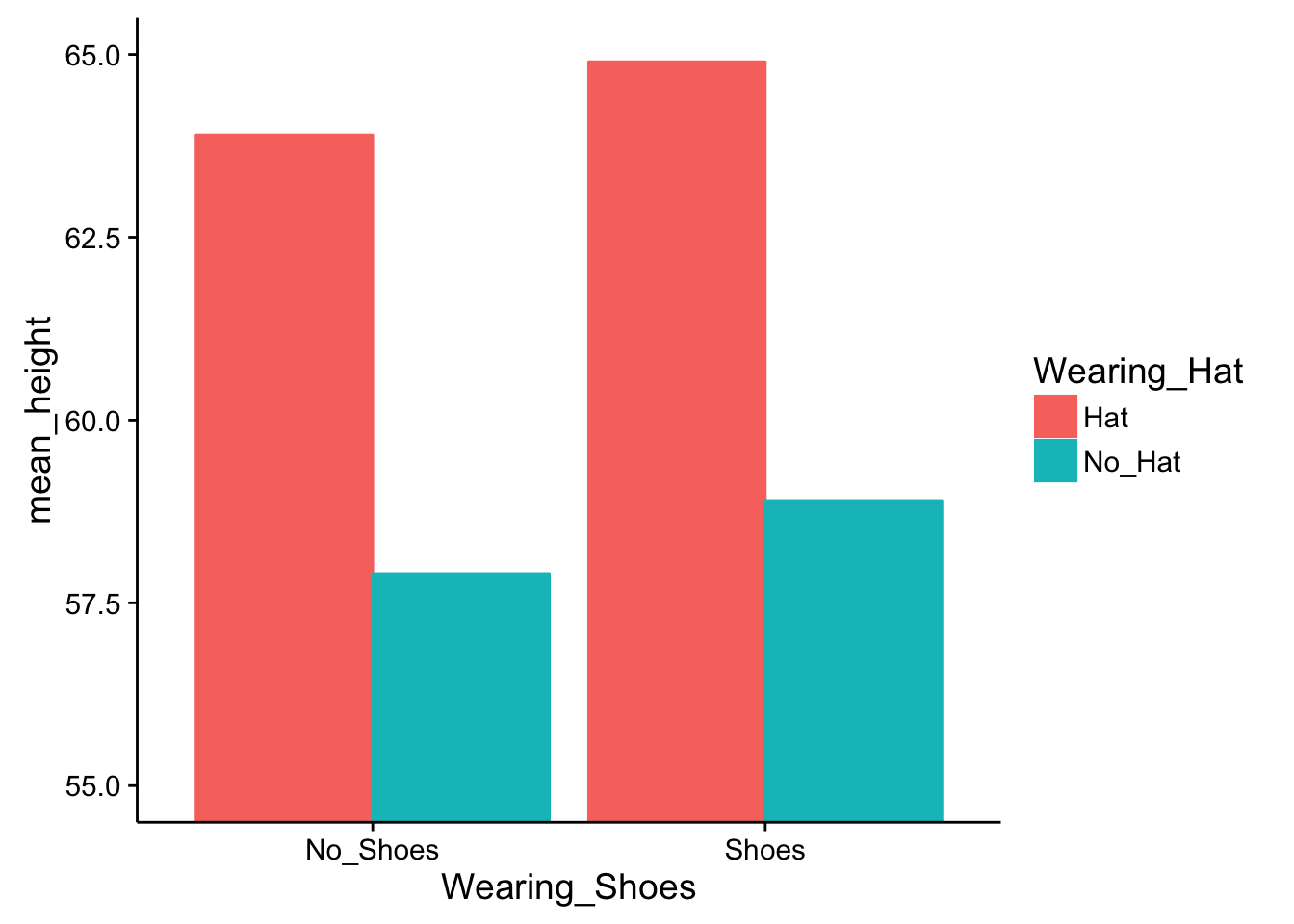

5.2.5. Graphing the Results of Factorial Experiments ¶

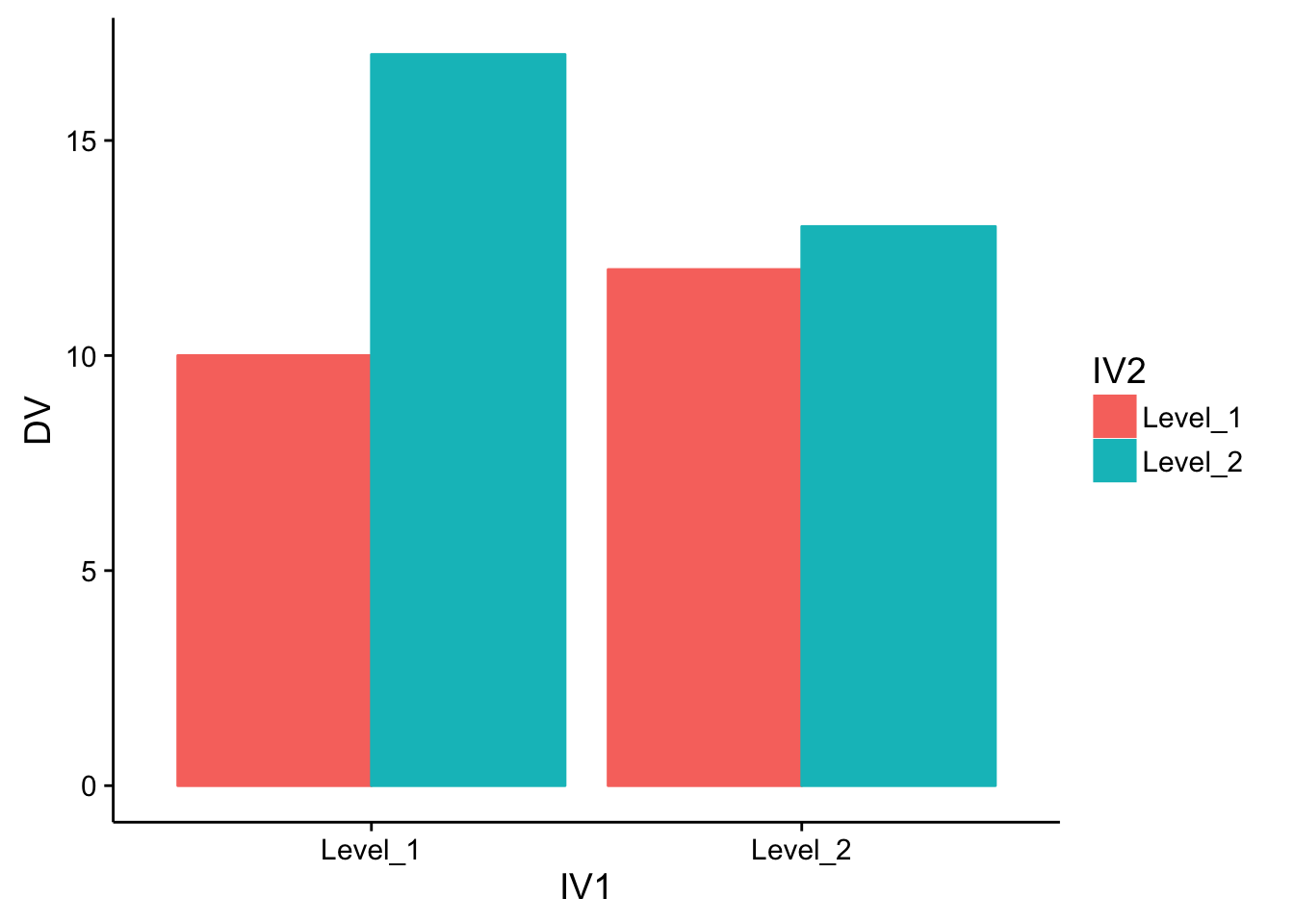

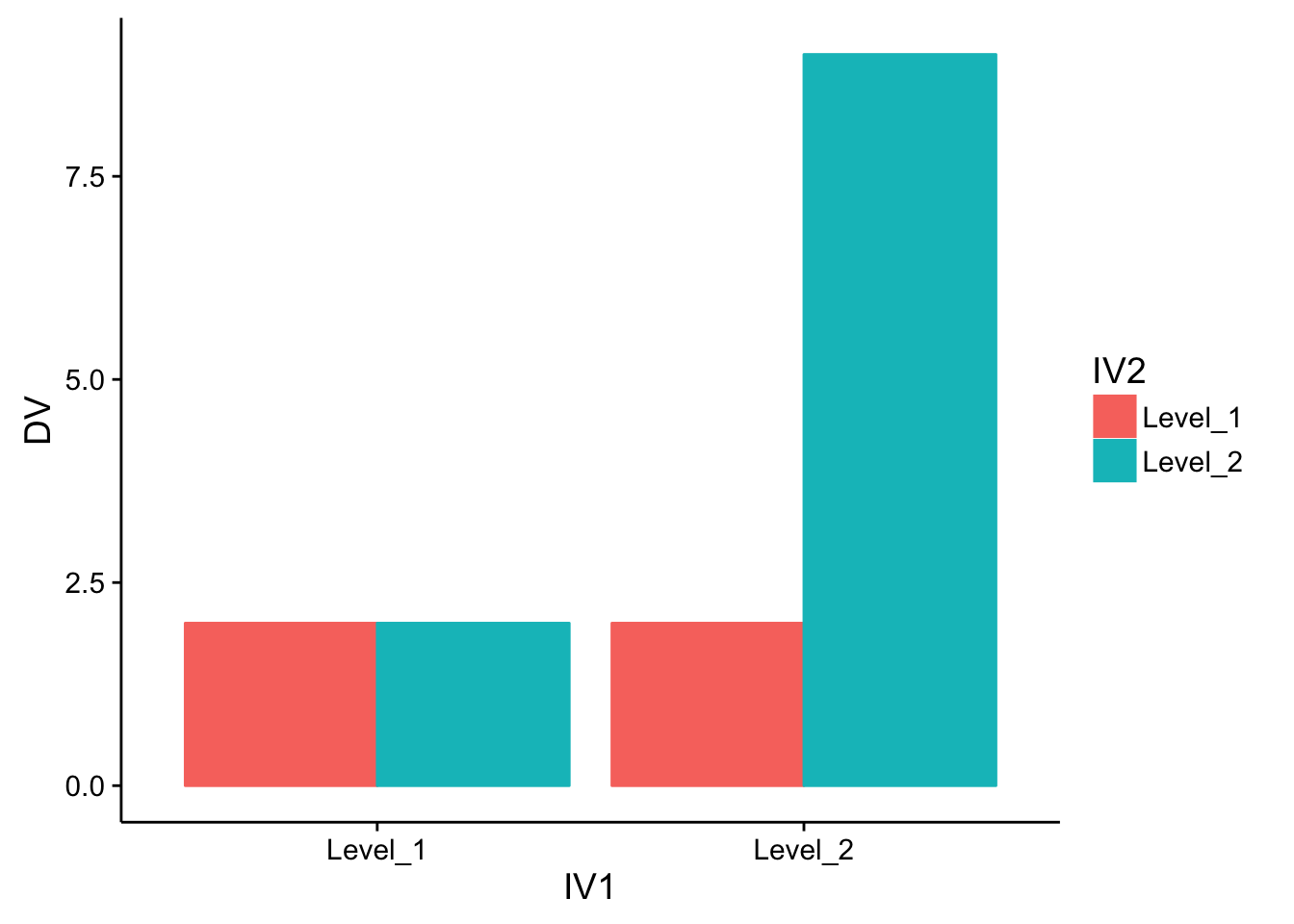

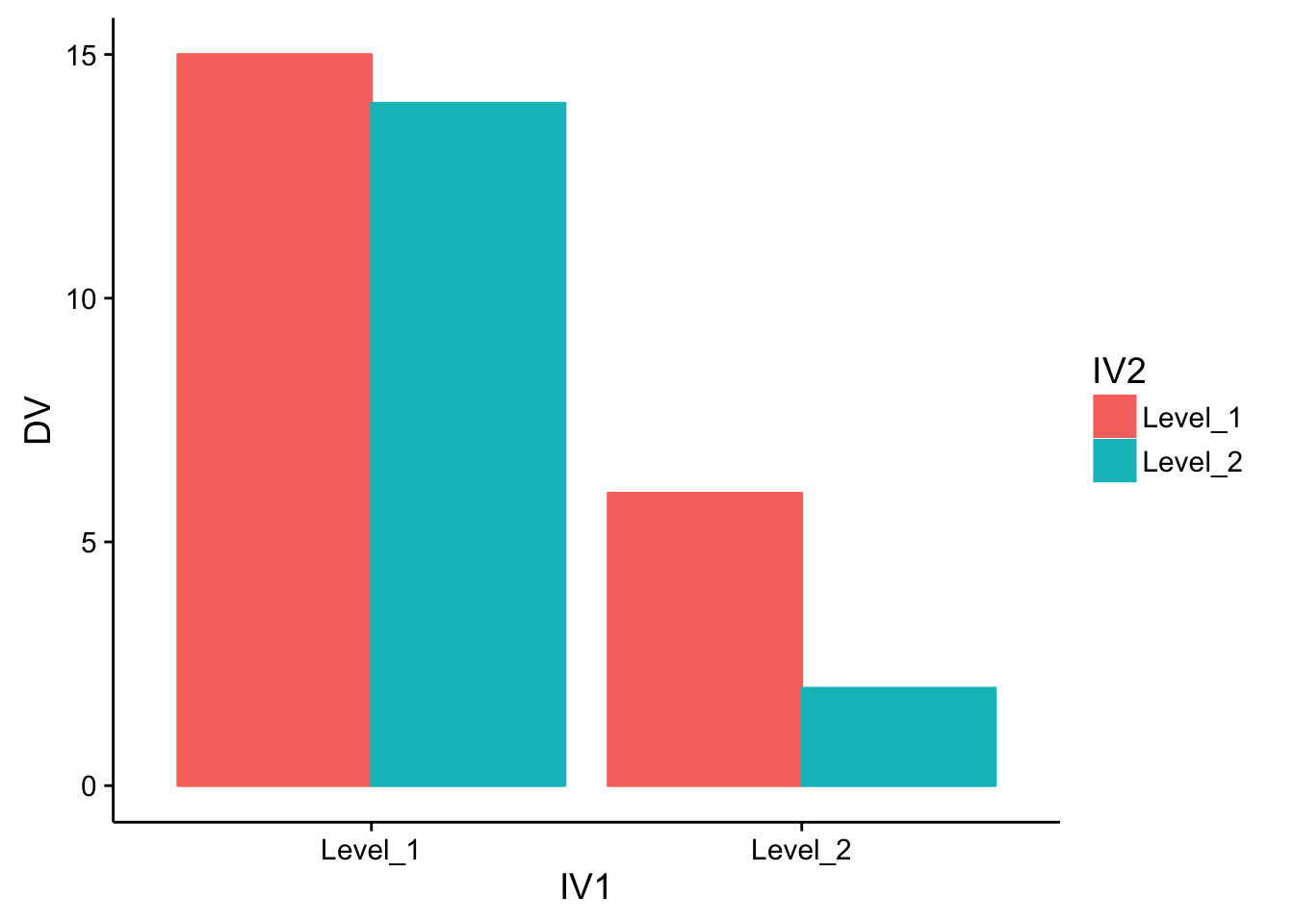

The results of factorial experiments with two independent variables can be graphed by representing one independent variable on the x-axis and representing the other by using different kinds of bars or lines. (The y-axis is always reserved for the dependent variable.)

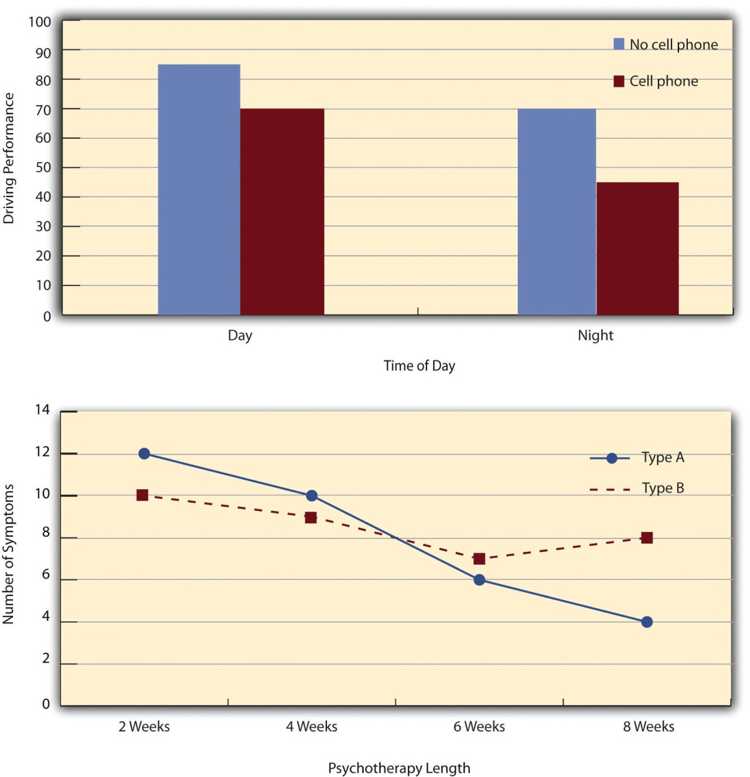

Fig. 5.3 Two ways to plot the results of a factorial experiment with two independent variables ¶

Figure 5.3 shows results for two hypothetical factorial experiments. The top panel shows the results of a 2 x 2 design. Time of day (day vs. night) is represented by different locations on the x-axis, and cell phone use (no vs. yes) is represented by different-colored bars. It would also be possible to represent cell phone use on the x-axis and time of day as different-colored bars. The choice comes down to which way seems to communicate the results most clearly. The bottom panel of Figure 5.3 shows the results of a 4 x 2 design in which one of the variables is quantitative. This variable, psychotherapy length, is represented along the x-axis, and the other variable (psychotherapy type) is represented by differently formatted lines. This is a line graph rather than a bar graph because the variable on the x-axis is quantitative with a small number of distinct levels. Line graphs are also appropriate when representing measurements made over a time interval (also referred to as time series information) on the x-axis.

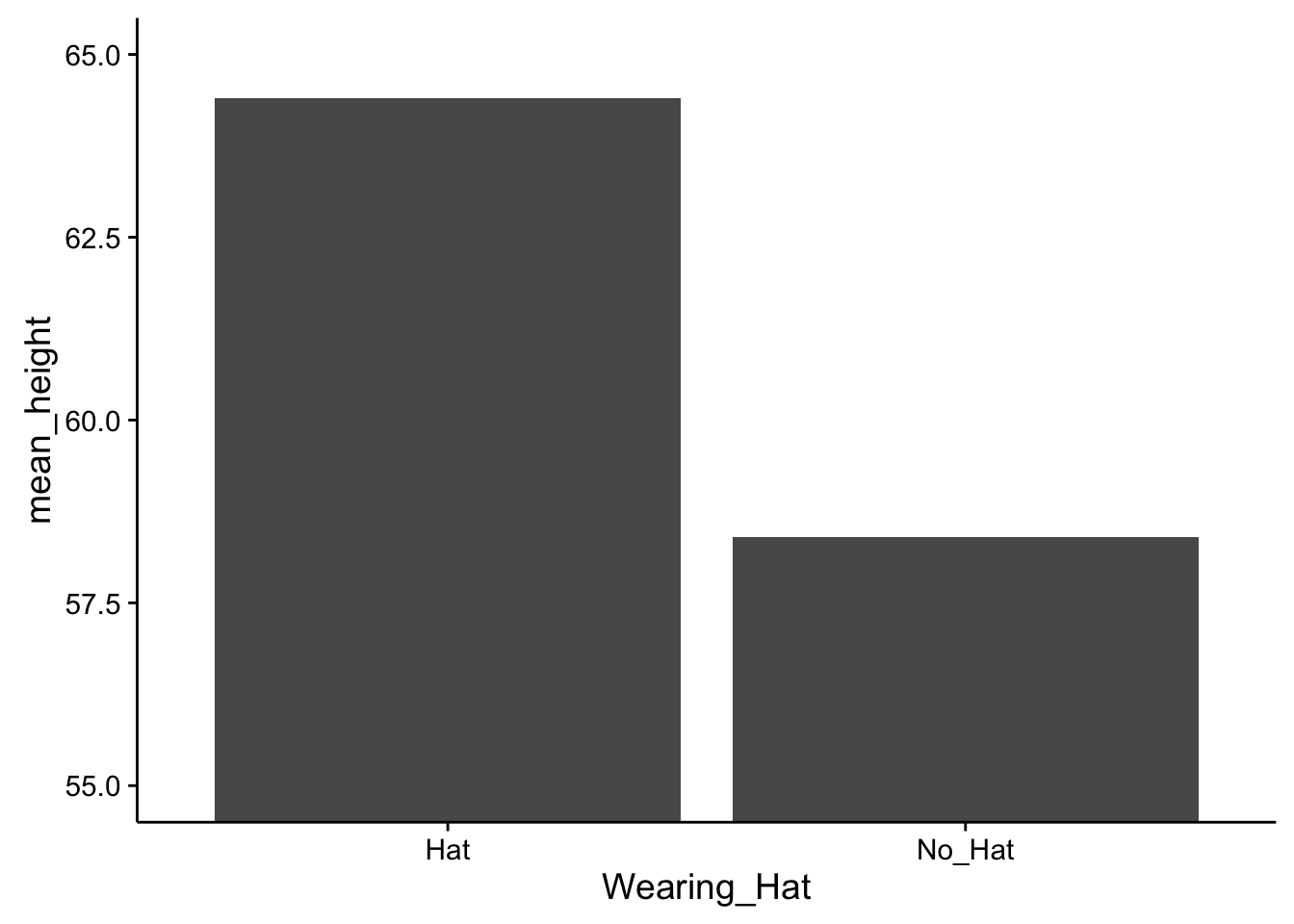

5.2.6. Main Effects and Interactions ¶

In factorial designs, there are two kinds of results that are of interest: main effects and interactions. A main effect is the statistical relationship between one independent variable and a dependent variable-averaging across the levels of the other independent variable(s). Thus there is one main effect to consider for each independent variable in the study. The top panel of Figure 5.4 shows a main effect of cell phone use because driving performance was better, on average, when participants were not using cell phones than when they were. The blue bars are, on average, higher than the red bars. It also shows a main effect of time of day because driving performance was better during the day than during the night-both when participants were using cell phones and when they were not. Main effects are independent of each other in the sense that whether or not there is a main effect of one independent variable says nothing about whether or not there is a main effect of the other. The bottom panel of Figure 5.4 , for example, shows a clear main effect of psychotherapy length. The longer the psychotherapy, the better it worked.

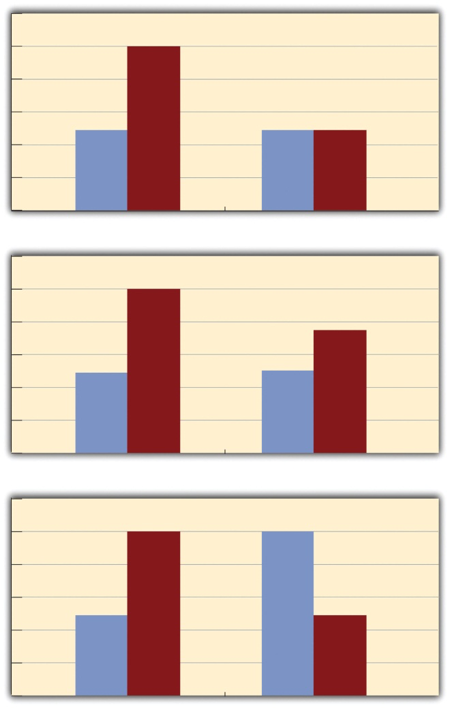

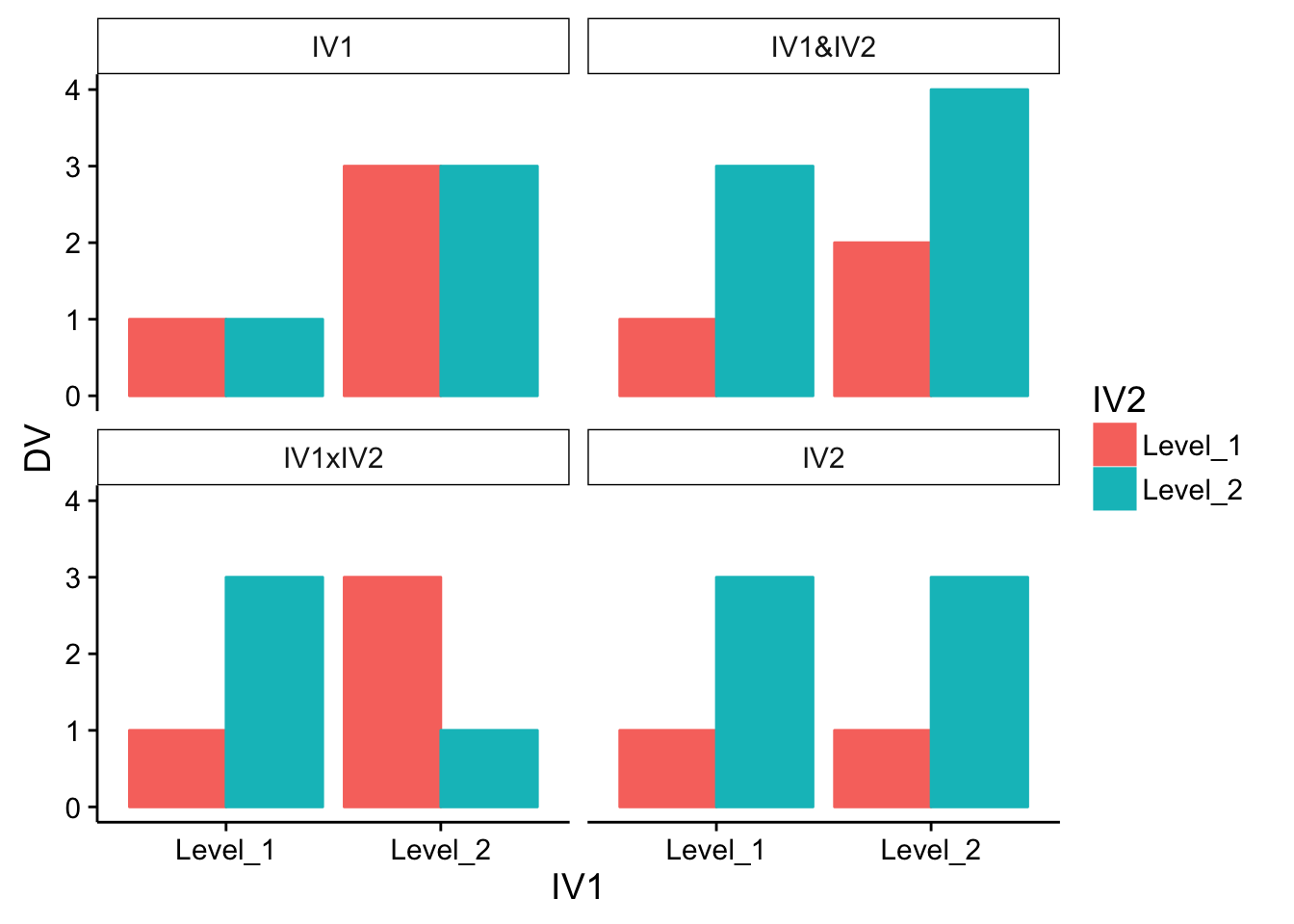

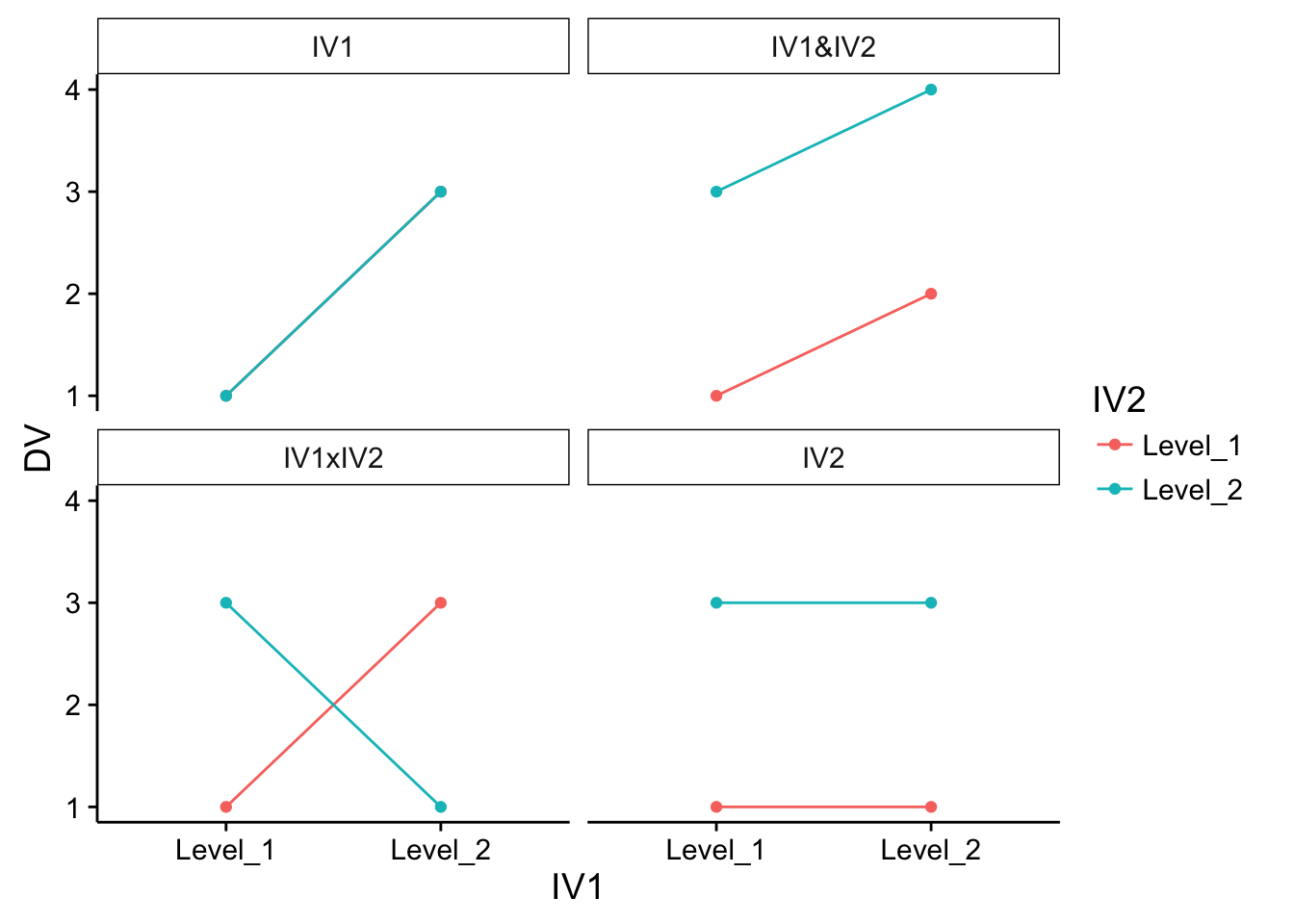

Fig. 5.4 Bar graphs showing three types of interactions. In the top panel, one independent variable has an effect at one level of the second independent variable but not at the other. In the middle panel, one independent variable has a stronger effect at one level of the second independent variable than at the other. In the bottom panel, one independent variable has the opposite effect at one level of the second independent variable than at the other. ¶

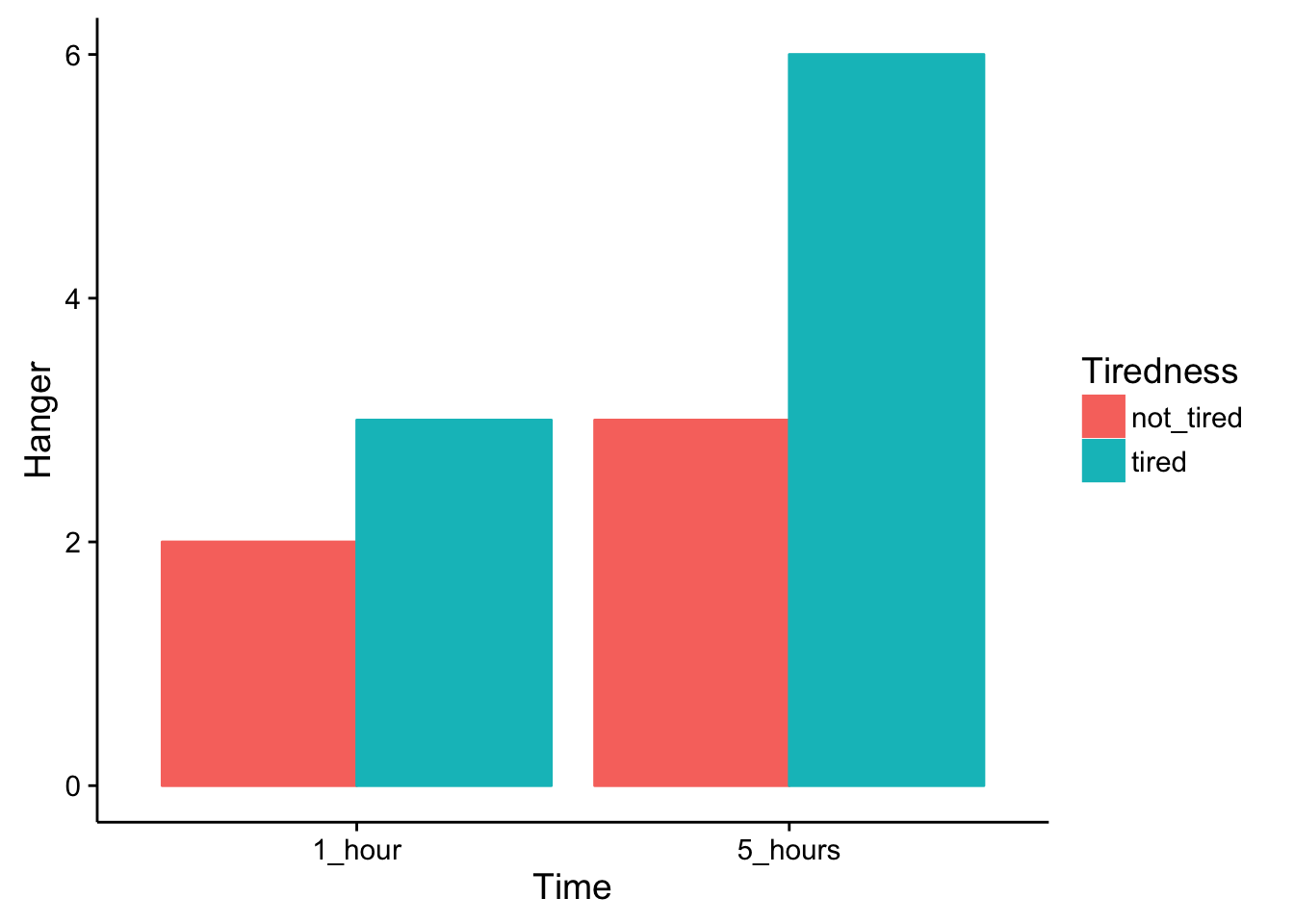

There is an interaction effect (or just “interaction”) when the effect of one independent variable depends on the level of another. Although this might seem complicated, you already have an intuitive understanding of interactions. It probably would not surprise you, for example, to hear that the effect of receiving psychotherapy is stronger among people who are highly motivated to change than among people who are not motivated to change. This is an interaction because the effect of one independent variable (whether or not one receives psychotherapy) depends on the level of another (motivation to change). Schnall and her colleagues also demonstrated an interaction because the effect of whether the room was clean or messy on participants’ moral judgments depended on whether the participants were low or high in private body consciousness. If they were high in private body consciousness, then those in the messy room made harsher judgments. If they were low in private body consciousness, then whether the room was clean or messy did not matter.

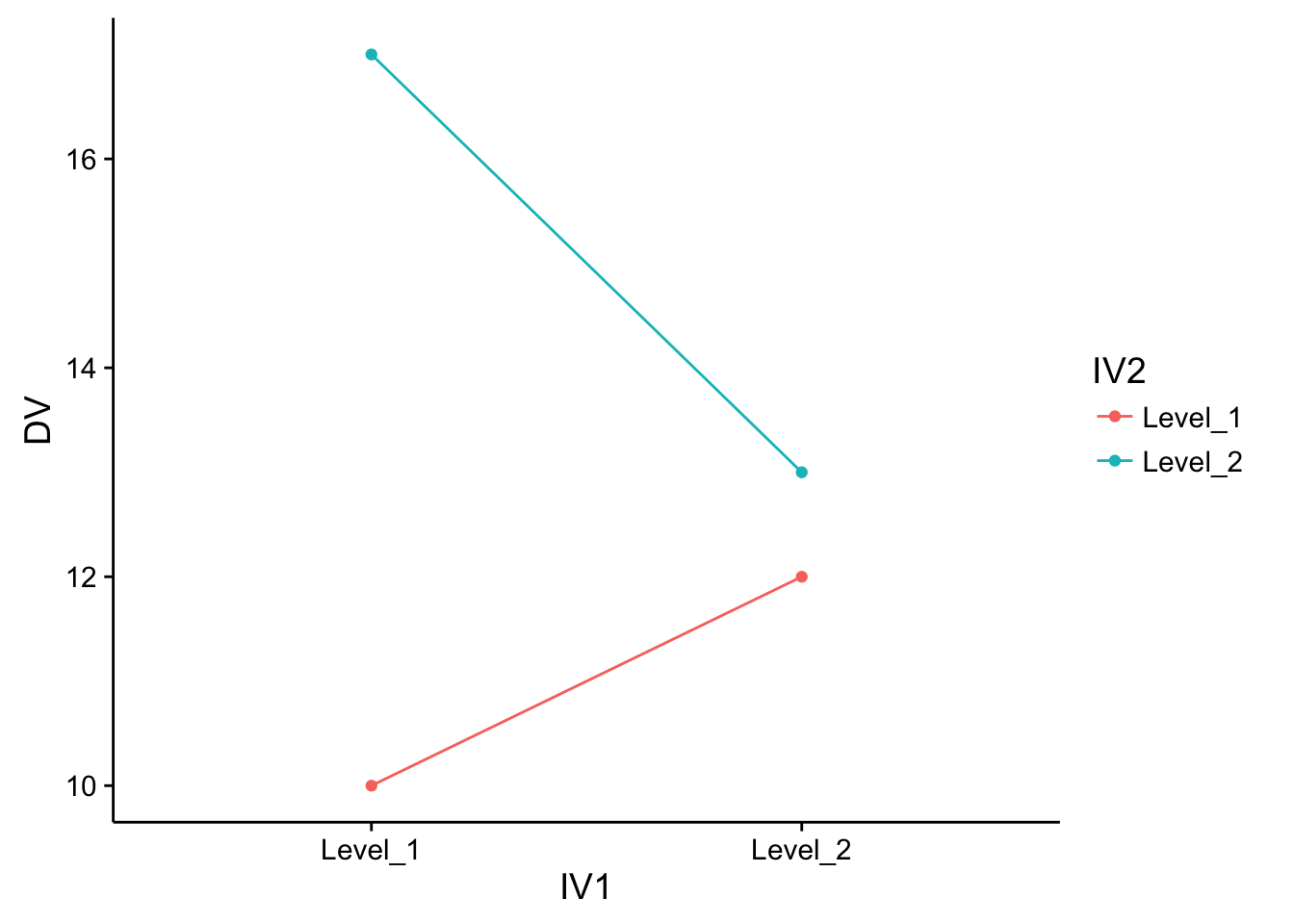

The effect of one independent variable can depend on the level of the other in several different ways. This is shown in Figure 5.5 .

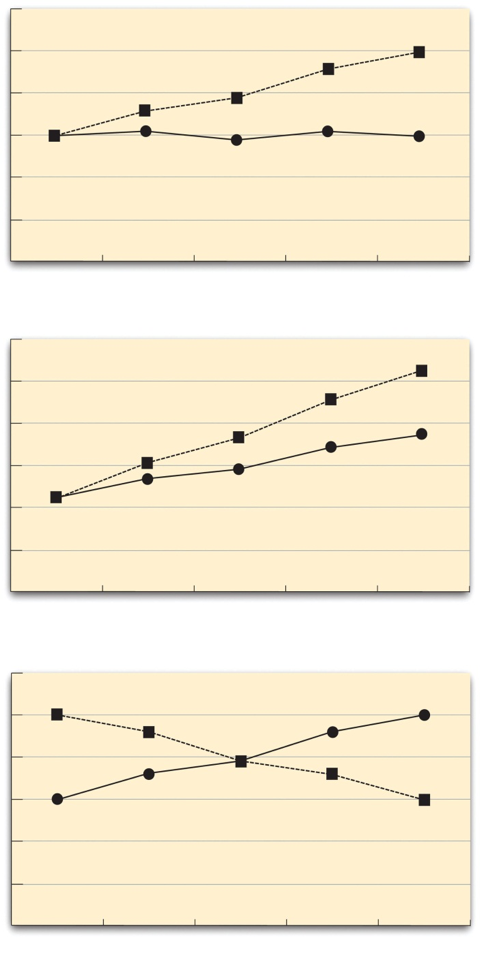

Fig. 5.5 Line Graphs Showing Three Types of Interactions. In the top panel, one independent variable has an effect at one level of the second independent variable but not at the other. In the middle panel, one independent variable has a stronger effect at one level of the second independent variable than at the other. In the bottom panel, one independent variable has the opposite effect at one level of the second independent variable than at the other. ¶

In the top panel, independent variable “B” has an effect at level 1 of independent variable “A” but no effect at level 2 of independent variable “A” (much like the study of Schnall in which there was an effect of disgust for those high in private body consciousness but not for those low in private body consciousness). In the middle panel, independent variable “B” has a stronger effect at level 1 of independent variable “A” than at level 2. This is like the hypothetical driving example where there was a stronger effect of using a cell phone at night than during the day. In the bottom panel, independent variable “B” again has an effect at both levels of independent variable “A”, but the effects are in opposite directions. This is what is called called a crossover interaction. One example of a crossover interaction comes from a study by Kathy Gilliland on the effect of caffeine on the verbal test scores of introverts and extraverts [Gil80] . Introverts perform better than extraverts when they have not ingested any caffeine. But extraverts perform better than introverts when they have ingested 4 mg of caffeine per kilogram of body weight.

In many studies, the primary research question is about an interaction. The study by Brown and her colleagues was inspired by the idea that people with hypochondriasis are especially attentive to any negative health-related information. This led to the hypothesis that people high in hypochondriasis would recall negative health-related words more accurately than people low in hypochondriasis but recall non-health-related words about the same as people low in hypochondriasis. And this is exactly what happened in this study.

5.2.7. Key Takeaways ¶

Researchers often include multiple independent variables in their experiments. The most common approach is the factorial design, in which each level of one independent variable is combined with each level of the others to create all possible conditions.

In a factorial design, the main effect of an independent variable is its overall effect averaged across all other independent variables. There is one main effect for each independent variable.

There is an interaction between two independent variables when the effect of one depends on the level of the other. Some of the most interesting research questions and results in psychology are specifically about interactions.

5.2.8. Exercises ¶

Practice: Return to the five article titles presented at the beginning of this section. For each one, identify the independent variables and the dependent variable.

Practice: Create a factorial design table for an experiment on the effects of room temperature and noise level on performance on the MCAT. Be sure to indicate whether each independent variable will be manipulated between-subjects or within-subjects and explain why.

Practice: Sketch 8 different bar graphs to depict each of the following possible results in a 2 x 2 factorial experiment:

No main effect of A; no main effect of B; no interaction

Main effect of A; no main effect of B; no interaction

No main effect of A; main effect of B; no interaction

Main effect of A; main effect of B; no interaction

Main effect of A; main effect of B; interaction

Main effect of A; no main effect of B; interaction

No main effect of A; main effect of B; interaction

No main effect of A; no main effect of B; interaction

5.3. Factorial designs: Round 2 ¶

Factorial designs require the experimenter to manipulate at least two independent variables. Consider the light-switch example from earlier. Imagine you are trying to figure out which of two light switches turns on a light. The dependent variable is the light (we measure whether it is on or off). The first independent variable is light switch #1, and it has two levels, up or down. The second independent variable is light switch #2, and it also has two levels, up or down. When there are two independent variables, each with two levels, there are four total conditions that can be tested. We can describe these four conditions in a 2x2 table.

Switch 1 Up | Switch 1 Down | |

|---|---|---|

Switch 2 Up | Light ? | Light ? |

Switch 2 Down | Light ? | Light ? |

This kind of design has a special property that makes it a factorial design. That is, the levels of each independent variable are each manipulated across the levels of the other indpendent variable. In other words, we manipulate whether switch #1 is up or down when switch #2 is up, and when switch numebr #2 is down. Another term for this property of factorial designs is “fully-crossed”.